AI Model Benchmarking: The Visual Guide to Performance Metrics for Strategic Selection

Understanding the Benchmarking Landscape for AI Models

I've spent years evaluating AI models across various platforms and configurations. Through this experience, I've learned that effective benchmarking is not just about raw numbers—it's about visualizing performance data in ways that drive strategic decisions.

Understanding the Benchmarking Landscape for AI Models

When I first dove into AI model evaluation, I quickly realized that performance benchmarking isn't just about running tests—it's about creating a strategic framework for model selection that aligns with specific business goals. In today's competitive landscape, finding the perfect balance between speed, cost, and accuracy has become the holy grail of AI implementation.

Standardized evaluation frameworks like the AIIA DNN Benchmark provide a comprehensive approach to evaluate deep neural networks across various hardware and software configurations. These frameworks measure inference speed, accuracy, and resource utilization, giving us a standardized way to compare models.

The true power of benchmarking emerges when we transform complex performance data into visual insights. Through my work with various organizations, I've witnessed how visualization bridges the gap between technical metrics and business value, enabling stakeholders to make informed decisions quickly.

The Benchmarking Ecosystem

The AI model benchmarking ecosystem consists of multiple interconnected components that work together to provide comprehensive evaluation:

flowchart TD

A[Model Selection] --> B[Performance Metrics]

B --> C{Evaluation Criteria}

C --> D[Speed & Efficiency]

C --> E[Accuracy & Quality]

C --> F[Cost & Resources]

D --> G[Visualization]

E --> G

F --> G

G --> H[Decision Making]

H --> I[Deployment Strategy]

I --> J[Continuous Monitoring]

J --> A

Throughout my career implementing AI implementation strategies, I've seen how proper model selection based on comprehensive benchmarking can dramatically impact business outcomes. Companies that invest in robust benchmarking processes consistently achieve faster time-to-value and higher ROI from their AI initiatives.

Essential Performance Metrics for Comprehensive Evaluation

Speed and Efficiency Metrics

When evaluating AI models for production environments, I always start with speed and efficiency metrics. These measurements tell us how quickly a model can process inputs and generate outputs, which is critical for applications with real-time requirements.

Inference Time Comparison Across Hardware

Resource utilization is another critical aspect I consider when benchmarking models. Understanding how a model consumes CPU, GPU, and memory resources helps identify potential bottlenecks and optimization opportunities.

For edge device deployments, I've found that optimization techniques like quantization and pruning can dramatically improve performance. These techniques reduce model size and computational requirements, though they often come with accuracy trade-offs that must be carefully evaluated.

When working with a healthcare client, I used PageOn.ai's data visualization capabilities to create interactive speed trade-off charts that helped executives understand the performance implications of different model architectures across their diverse hardware infrastructure.

Accuracy and Quality Metrics

While speed is important, accuracy often determines whether an AI model delivers business value. The specific metrics I use depend on the task type:

| Task Type | Primary Metrics | When to Use |

|---|---|---|

| Classification | Accuracy, Precision, Recall, F1-score, ROC-AUC | When class distribution matters or false positives/negatives have different costs |

| Regression | MSE, RMSE, MAE, R-squared | When predicting continuous values where error magnitude matters |

| Generative Models | BLEU, ROUGE, Perplexity, FID | When generating text, images, or other creative content |

| Object Detection | mAP, IoU | When identifying objects in images with bounding boxes |

Domain-specific performance indicators often provide the most valuable insights. For example, in healthcare applications, I've found that metrics like diagnostic accuracy and false negative rates are more meaningful than generic accuracy scores.

Classification Model Performance Comparison

Creating comparative accuracy dashboards with AI Blocks has been one of my most effective strategies for communicating model performance to stakeholders. These visual tools help bridge the gap between technical metrics and business outcomes, making it easier for decision-makers to understand the trade-offs involved in model selection.

As my work with AI assistants has shown, visualizing these metrics helps teams make better decisions about which models to deploy for specific use cases.

Cost Analysis and Resource Optimization

In my experience advising companies on AI strategy, I've found that many organizations overlook the total cost of ownership (TCO) when selecting AI models. Beyond the initial development costs, we need to consider ongoing inference costs, infrastructure requirements, and maintenance expenses.

Training costs can vary dramatically across model architectures. Large language models might require significant upfront investment in compute resources, while simpler models may be trained on more modest hardware. However, inference costs often dominate the TCO over time, especially for models serving high-volume production traffic.

Cost Impact of Model Optimization Techniques

Quantization and pruning have been game-changers for my clients with resource-constrained deployments. By reducing model precision from 32-bit to 8-bit floating point or even integer quantization, we've achieved up to 4x reduction in model size with minimal accuracy loss. Similarly, pruning unnecessary connections can dramatically reduce computational requirements.

The cloud versus edge deployment decision requires careful cost-benefit analysis. While cloud deployments offer scalability and simplified infrastructure management, edge deployments can reduce latency and data transfer costs. I typically recommend hybrid approaches that leverage the strengths of both paradigms.

Cloud vs. Edge Deployment Cost Structure

flowchart TD

A[Deployment Decision] --> B{Cloud or Edge?}

B -->|Cloud| C[Cloud Costs]

B -->|Edge| D[Edge Costs]

C --> C1[Pay-per-use Compute]

C --> C2[Data Transfer Fees]

C --> C3[Storage Costs]

C --> C4[API Management]

D --> D1[Hardware Acquisition]

D --> D2[Maintenance]

D --> D3[Power Consumption]

D --> D4[Software Updates]

C1 --> E[Total Cost of Ownership]

C2 --> E

C3 --> E

C4 --> E

D1 --> E

D2 --> E

D3 --> E

D4 --> E

Using PageOn.ai's visualization tools, I've helped teams create interactive cost-benefit scenarios that dynamically adjust based on expected usage patterns and business constraints. This approach has been particularly valuable for startups that need to optimize their AI investments for maximum ROI.

By focusing on ai productivity gains alongside cost considerations, we can build a more complete picture of the value proposition for each AI model option.

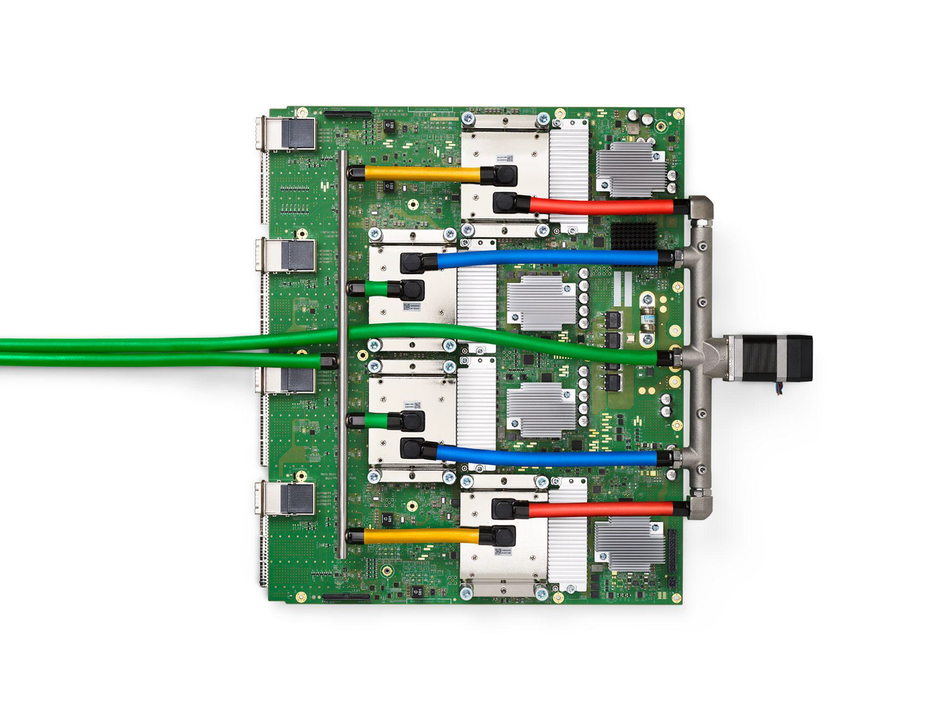

Benchmarking Across Different Hardware and Software Configurations

One of the most challenging aspects of AI model benchmarking is accounting for the vast diversity of hardware and software environments. I've learned that a model's performance can vary dramatically depending on the framework, hardware accelerators, and optimization techniques applied.

Framework Performance Comparison

In my benchmarking work, I've found that framework selection can impact performance by 2-3x for identical models. TensorFlow excels in production deployment scenarios, while PyTorch remains popular for research and experimentation. Converting models to ONNX provides a standardized format that can be optimized for various hardware targets.

Hardware-specific optimizations can yield dramatic performance improvements. Intel's OpenVINO toolkit has helped my clients achieve up to 4x speedup on Intel CPUs, while NVIDIA's TensorRT has provided similar gains on GPU hardware. For specialized workloads, I've even explored custom FPGA implementations that deliver order-of-magnitude improvements in efficiency.

Acceleration Engine Impact on Performance

graph TD

A[AI Model] --> B[Acceleration Engines]

B --> C[Hardware-Specific]

B --> D[Framework-Specific]

C --> E[CPU: OpenVINO]

C --> F[GPU: TensorRT]

C --> G[TPU: TensorFlow]

C --> H[FPGA: Custom]

D --> I[Graph Optimization]

D --> J[Operator Fusion]

D --> K[Memory Planning]

E --> L[Optimized Performance]

F --> L

G --> L

H --> L

I --> L

J --> L

K --> L

Multi-environment testing is essential for robust deployment. I recommend creating a comprehensive test matrix that covers all target environments to identify potential performance bottlenecks before production deployment. This approach has saved my clients countless hours of troubleshooting and optimization work.

Using PageOn.ai's visual tools, I've created interactive comparison matrices that help teams understand the performance implications of different hardware-software combinations. These visualizations make it easier to identify the optimal configuration for specific use cases and constraints.

When comparing different AI solutions like Gemini AI Assistant comparison or exploring Google AI search vs alternatives, these benchmarking approaches become especially valuable for making informed decisions.

Building an Effective Benchmarking Strategy

Through years of guiding organizations through AI adoption, I've found that the most successful benchmarking strategies are those that align closely with specific business objectives. Generic benchmarks might look impressive on paper, but they often fail to predict real-world performance for specific use cases.

I always recommend establishing meaningful baselines before diving into benchmarking. These baselines might include current system performance, competitor capabilities, or industry standards. Having clear reference points makes it much easier to evaluate the relative performance of different models.

Continuous Benchmarking Throughout Model Lifecycle

flowchart LR

A[Data Collection] --> B[Model Training]

B --> C[Initial Benchmarking]

C --> D[Optimization]

D --> E[Re-Benchmarking]

E --> F[Deployment]

F --> G[Production Monitoring]

G --> H[Performance Drift Detection]

H --> I[Model Retraining]

I --> B

Integrating benchmarking into the MLOps pipeline has been a game-changer for my clients. By automating performance testing at each stage of the model lifecycle, we can catch regressions early and ensure that optimizations actually deliver the expected improvements. This continuous benchmarking approach is particularly valuable for teams working with rapidly evolving models.

Strategic Benchmarking Framework

Using PageOn.ai's Vibe Creation tools, I've helped teams transform complex benchmarking strategies into clear visual roadmaps. These visualizations serve as both planning tools and communication aids, ensuring that all stakeholders understand the benchmarking process and its expected outcomes.

A well-designed benchmarking strategy should evolve alongside your AI implementation, continuously adapting to new business requirements, technological advancements, and competitive pressures.

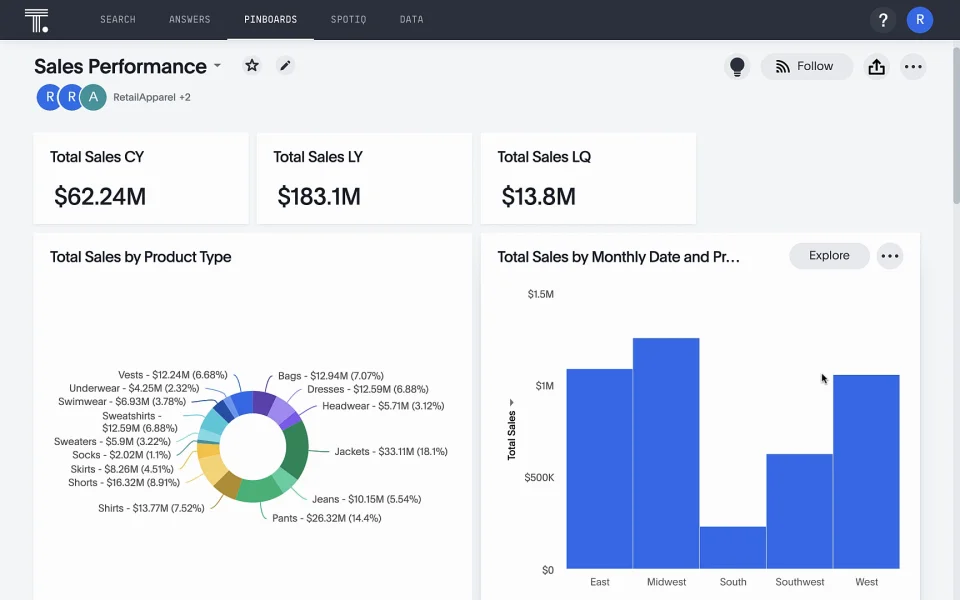

From Metrics to Decision-Making

The ultimate goal of any benchmarking effort is to inform better decision-making. Throughout my career advising organizations on AI strategy, I've developed a systematic approach to translate technical benchmarks into business value metrics that resonate with executive stakeholders.

Aligning model selection with strategic organizational goals requires a deep understanding of both the technical capabilities of different models and the specific business objectives they're meant to serve. I work closely with cross-functional teams to identify the key performance indicators that matter most for each use case.

Technical Metrics to Business Value Translation

flowchart TD

A[Technical Metrics] --> B{Metric Type}

B -->|Speed| C[Business Impact]

B -->|Accuracy| D[Business Impact]

B -->|Cost| E[Business Impact]

C --> C1[Customer Experience]

C --> C2[Throughput Capacity]

C --> C3[Time-to-Value]

D --> D1[Error-Related Costs]

D --> D2[Customer Satisfaction]

D --> D3[Regulatory Compliance]

E --> E1[TCO]

E --> E2[Scalability Economics]

E --> E3[Resource Allocation]

C1 --> F[ROI Calculation]

C2 --> F

C3 --> F

D1 --> F

D2 --> F

D3 --> F

E1 --> F

E2 --> F

E3 --> F

F --> G[Decision Framework]

For commercial AI models, I pay particular attention to conversion rate and business outcome metrics. These metrics directly connect model performance to revenue generation, making it easier to justify investments in more sophisticated (and often more expensive) models when they deliver measurably better business results.

Risk Assessment in Model Selection

Risk assessment is a critical component of the decision-making process. Models with high average performance but high variability may introduce operational risks that more stable models avoid. I help teams quantify these risks and incorporate them into their decision frameworks.

Using PageOn.ai's visual decision frameworks, I've created executive-friendly dashboards that present complex benchmarking data in an accessible format. These tools have been instrumental in helping leadership teams make informed decisions about AI model selection and deployment strategies.

Future-Proofing: Adapting Benchmarks for Emerging AI Technologies

The AI landscape is evolving at a breathtaking pace, with new model architectures and capabilities emerging almost daily. Throughout my career, I've learned that effective benchmarking strategies must be adaptable to accommodate these rapid changes.

For generative AI and large language models, traditional metrics like accuracy and precision are often insufficient. I've been exploring alternative evaluation approaches that better capture the nuanced capabilities of these models, including human evaluation protocols, reference-free quality metrics, and task-specific benchmarks.

Evolving Metrics for Generative AI

flowchart TD

A[Generative AI Evaluation] --> B[Text Generation]

A --> C[Image Generation]

A --> D[Code Generation]

A --> E[Multimodal Generation]

B --> B1[BLEU/ROUGE Scores]

B --> B2[Perplexity]

B --> B3[Human Evaluation]

B --> B4[Task Completion]

C --> C1[FID Score]

C --> C2[Inception Score]

C --> C3[User Preference]

C --> C4[CLIP Score]

D --> D1[Functional Correctness]

D --> D2[Code Quality]

D --> D3[Runtime Efficiency]

E --> E1[Cross-modal Coherence]

E --> E2[Information Alignment]

E --> E3[Context Relevance]

Responsible AI considerations are becoming increasingly important in benchmarking. Beyond performance, I now routinely evaluate models for fairness, bias, transparency, and explainability. These ethical dimensions are not just regulatory concerns but critical factors in building trustworthy AI systems that deliver sustainable business value.

AI Sustainability Impact

As we look toward multimodal AI systems that combine vision, language, and other modalities, benchmarking becomes even more complex. I'm working on integrated evaluation frameworks that can assess cross-modal capabilities while still providing meaningful, actionable insights for decision-makers.

Using PageOn.ai's visualization capabilities, I've created interactive dashboards that track AI technology evolution and performance trends over time. These tools help organizations anticipate future requirements and make strategic investments in AI capabilities that will remain valuable as the technology landscape evolves.

Case Studies: Benchmarking Success Stories

Throughout my career, I've had the privilege of guiding organizations across various industries in their AI benchmarking journeys. These real-world experiences have provided invaluable insights into the practical challenges and opportunities of performance-driven model selection.

Healthcare: Optimizing Diagnostic Models

A leading healthcare provider needed to deploy AI-powered diagnostic tools across facilities with varying computational resources. We developed a custom benchmarking framework that evaluated models not just on accuracy, but also on inference speed across different hardware configurations.

Using quantization and knowledge distillation, we reduced model size by 78% while maintaining diagnostic accuracy within 2% of the original model. This optimization enabled deployment on edge devices in remote clinics, dramatically expanding access to AI-assisted diagnostics.

Healthcare Case Study: Performance Impact

E-commerce: Balancing Recommendation Quality and Latency

An e-commerce platform was struggling with slow recommendation load times during peak shopping periods. Through comprehensive benchmarking, we identified that their sophisticated recommendation model was creating a bottleneck.

We implemented a tiered approach, using a lightweight model for initial recommendations and progressively loading more sophisticated recommendations as users engaged with the page. This strategy reduced initial page load times by 68% while maintaining overall recommendation quality, resulting in a 12% increase in conversion rates.

Financial Services: Optimizing for Regulatory Compliance

A financial institution needed to deploy fraud detection models that balanced accuracy, explainability, and computational efficiency. Traditional benchmarking metrics weren't capturing the regulatory requirements for model transparency.

We developed a custom benchmarking framework that incorporated explainability metrics alongside performance measures. This approach led to the selection of a gradient-boosted tree model that offered the optimal balance between detection accuracy, inference speed, and feature importance transparency required by regulators.

Using PageOn.ai's AI Blocks, we created interactive case study visualizations that helped our clients understand the benchmarking process and results. These visual tools were particularly valuable for communicating complex technical concepts to non-technical stakeholders and building organizational buy-in for AI initiatives.

These success stories demonstrate that effective benchmarking isn't just about measuring performance—it's about aligning technical capabilities with specific business objectives and constraints to deliver maximum value.

Transform Your AI Model Selection with PageOn.ai

Ready to visualize your AI benchmarking data and make more informed model selection decisions? PageOn.ai's powerful visualization tools help you transform complex performance metrics into clear, actionable insights that drive business value.

Start Creating with PageOn.ai TodayConclusion: The Future of AI Model Benchmarking

As we look to the future, I believe that AI model benchmarking will continue to evolve from a purely technical exercise into a strategic business function. The organizations that excel will be those that develop comprehensive, business-aligned benchmarking practices that inform not just model selection, but broader AI strategy.

The growing complexity of AI models and deployment environments makes visual communication of benchmarking results more important than ever. Tools like PageOn.ai that transform complex data into clear, actionable visualizations will play an increasingly crucial role in helping organizations navigate the AI landscape.

Whether you're just beginning your AI journey or looking to optimize existing implementations, I encourage you to invest in robust benchmarking practices that balance speed, cost, and accuracy in alignment with your specific business objectives. The insights you gain will pay dividends in more effective AI deployments and greater business value.

By combining rigorous technical evaluation with strategic business alignment and powerful visualization tools, you can make informed model selection decisions that drive competitive advantage in an increasingly AI-powered world.

You Might Also Like

Transform Your AI Results by Mastering the Art of Thinking in Prompts | Strategic AI Communication

Master the strategic mindset that transforms AI interactions from fuzzy requests to crystal-clear outputs. Learn professional prompt engineering techniques that save 20+ hours weekly.

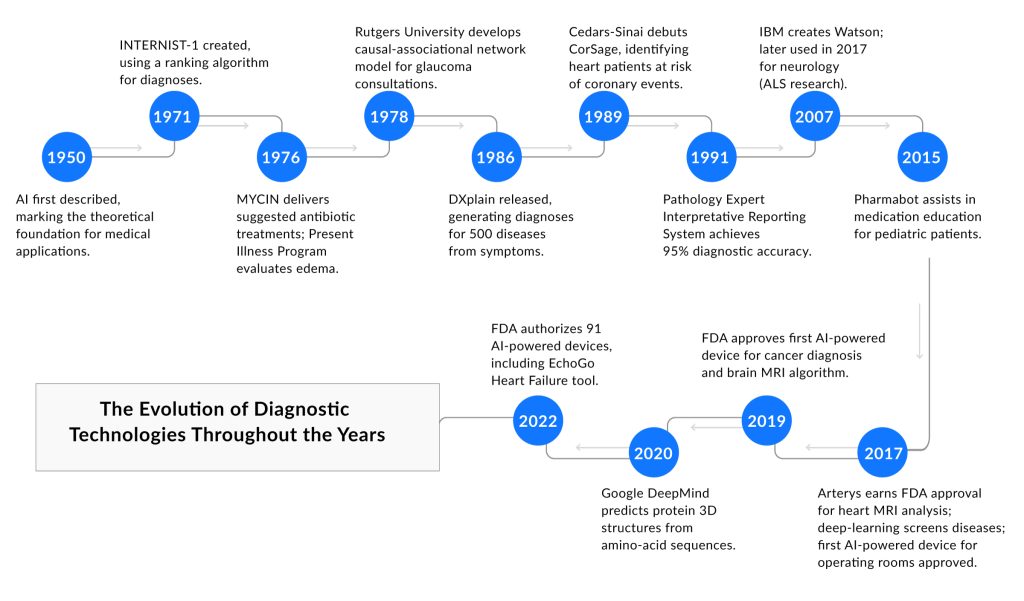

Visualizing the AI Revolution: From AlphaGo to AGI Through Key Visual Milestones

Explore the visual journey of AI evolution from AlphaGo to AGI through compelling timelines, infographics and interactive visualizations that map key breakthroughs in artificial intelligence.

Prompt Chaining Techniques That Scale Your Business Intelligence | Advanced AI Strategies

Master prompt chaining techniques to transform complex business intelligence workflows into scalable, automated insights. Learn strategic AI methodologies for data analysis.

Transforming Marketing Teams: From AI Hesitation to Strategic Implementation Success

Discover proven strategies to overcome the four critical barriers blocking marketing AI adoption. Transform your team from hesitant observers to strategic AI implementers with actionable roadmaps and success metrics.