Demystifying AI Compute: A Visual Guide to Requirements and Costs

Navigate the complex landscape of AI computational demands, infrastructure requirements, and economic considerations

The rapid advancement of artificial intelligence has brought with it an exponential increase in computational requirements and associated costs. Understanding these factors is crucial for organizations and individuals looking to leverage AI effectively while managing resources efficiently. This comprehensive guide breaks down the complex world of AI compute into visual, accessible insights.

Understanding the AI Compute Landscape

The computational requirements for artificial intelligence have grown at a staggering pace, creating both challenges and opportunities for organizations implementing AI solutions. Understanding this landscape is the first step toward making informed decisions about AI investments and infrastructure planning.

The Relationship Between Model Complexity and Compute

As AI models increase in complexity (measured by parameters and layers), their computational requirements grow exponentially rather than linearly. This relationship fundamentally shapes the economics of AI development.

Key AI Computing Terminology

| Term | Definition | Relevance to AI Costs |

|---|---|---|

| FLOPs | Floating Point Operations Per Second - a measure of computational performance | Higher FLOP requirements directly translate to increased hardware costs and energy consumption |

| GPU Hours | Cumulative time spent using GPU resources for AI workloads | Primary billing metric for cloud-based AI services and a key component of total training costs |

| Training vs. Inference | Training builds the model; inference runs the model to make predictions | Training is typically a one-time high cost; inference is an ongoing operational cost |

| Petaflop-days | A unit measuring total computational work (1 petaflop for one day) | Used to compare the computational requirements of different AI models |

How PageOn.ai Transforms Complex AI Compute Concepts

PageOn.ai's visual tools allow you to create clear, engaging representations of complex computational concepts – making it easier to communicate technical requirements to non-technical stakeholders. With intuitive diagram tools, you can transform abstract metrics like FLOPs and parameter counts into accessible visuals that drive understanding.

The Architecture Behind AI Computing Power

Behind every AI system is a sophisticated hardware architecture specifically designed to handle the unique computational patterns of machine learning workloads. Understanding these components is crucial for building efficient AI infrastructure and making informed purchasing decisions.

The Hardware Ecosystem

AI Computing Hardware Ecosystem

flowchart TD

AI[AI System] --> Compute[Computing Units]

AI --> Memory[Memory Systems]

AI --> Infrastructure[Infrastructure]

Compute --> GPU[GPUs]

Compute --> TPU[TPUs]

Compute --> ASIC[AI ASICs]

Compute --> CPU[CPUs]

Memory --> HBM[High Bandwidth Memory]

Memory --> DRAM[System DRAM]

Memory --> Storage[Fast Storage - SSDs]

Infrastructure --> Power[Power Delivery]

Infrastructure --> Cooling[Cooling Systems]

Infrastructure --> Network[Network Fabric]

class AI,Compute,Memory,Infrastructure,GPU,TPU,ASIC,CPU,HBM,DRAM,Storage,Power,Cooling,Network nodeStyle;

classDef nodeStyle fill:#fff,stroke:#FF8000,stroke-width:2px,color:#333;

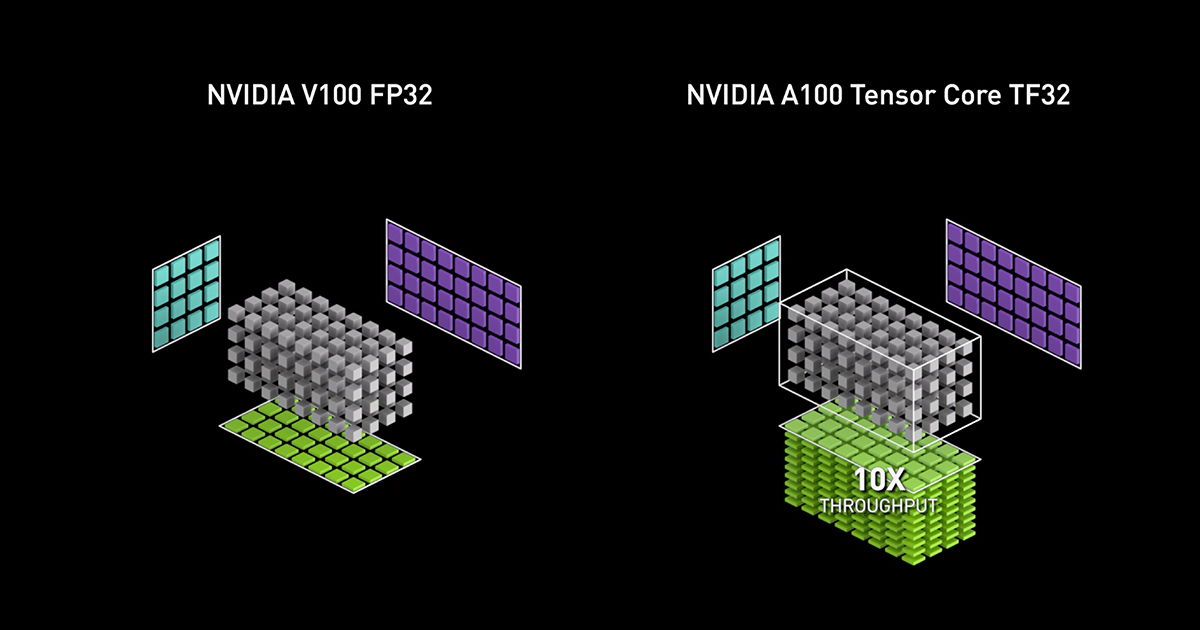

GPUs

Graphics Processing Units have become the backbone of AI computing due to their ability to perform thousands of calculations simultaneously. Modern AI-optimized GPUs like NVIDIA's A100 or H100 contain specialized tensor cores that further accelerate deep learning operations.

TPUs

Tensor Processing Units are Google's custom-developed ASIC chips specifically designed for machine learning workloads. They're optimized for Google AI frameworks like TensorFlow and offer exceptional performance for certain types of neural networks.

Memory Systems

High bandwidth memory (HBM) is critical for AI performance. Large models require extensive memory capacity with fast access times. Memory bottlenecks often become the limiting factor in scaling AI systems effectively.

Infrastructure Requirements

Advanced cooling systems, robust power delivery, and high-speed interconnects are essential infrastructure components that significantly impact total cost of ownership for AI systems.

Consumer vs. Enterprise AI Compute

Visualizing AI Architecture with PageOn.ai

PageOn.ai's AI Blocks feature helps you create clear visual representations of complex AI architectures. Whether you need to explain parallel processing concepts to executives or design a hardware procurement plan, the intuitive visual tools make it simple to communicate technical specifications effectively.

Training Costs Breakdown

Training large AI models represents one of the most significant investments in the AI development process. Understanding the factors that drive these costs is essential for budgeting and resource planning.

Factors Influencing Training Expenses

flowchart TD

Training[Training Costs] --> ModelSize[Model Size]

Training --> DataSize[Dataset Size]

Training --> Iterations[Training Iterations]

Training --> Hardware[Hardware Choice]

ModelSize --> Parameters[Parameter Count]

ModelSize --> Architecture[Model Architecture]

DataSize --> Preprocessing[Preprocessing Costs]

DataSize --> Storage[Storage Costs]

DataSize --> Quality[Data Quality Requirements]

Iterations --> Convergence[Convergence Rate]

Iterations --> Experiments[Experimental Iterations]

Hardware --> Cloud[Cloud vs On-Premise]

Hardware --> Specs[Hardware Specifications]

class Training,ModelSize,DataSize,Iterations,Hardware,Parameters,Architecture,Preprocessing,Storage,Quality,Convergence,Experiments,Cloud,Specs nodeStyle;

classDef nodeStyle fill:#fff,stroke:#FF8000,stroke-width:2px,color:#333;

The primary drivers of training costs include model size (number of parameters), dataset scale, training iterations required for convergence, and hardware selection. As these factors increase, costs can grow exponentially rather than linearly.

Case Studies: Real Training Costs of Landmark Models

The training costs for large foundation models have reached unprecedented levels. GPT-4's training is estimated to have cost tens of millions of dollars, making such development inaccessible to all but the largest organizations. These costs include not only the direct compute expenses but also the engineering resources required to develop and optimize the training process.

Cost Breakdown Components

Creating Cost Visualizations with PageOn.ai

When preparing budget proposals or ROI analyses for AI projects, PageOn.ai's visualization tools can transform complex cost structures into clear, compelling visuals. The platform's intuitive interface allows you to create professional-quality cost breakdowns and comparisons that help stakeholders understand the financial implications of AI investments.

Inference Economics: Running AI in Production

While training costs are often in the spotlight, the ongoing operational expenses of running AI models for inference (making predictions) typically represent the largest portion of total cost of ownership over time. Understanding the economics of inference is crucial for sustainable AI deployments.

Operational Cost Structure for Deployed AI

flowchart TD

Inference[Inference Costs] --> ComputeModel[Compute Resource Model]

Inference --> ProcessingMode[Processing Mode]

Inference --> DeploymentLocation[Deployment Location]

ComputeModel --> OnDemand[On-Demand/Pay-per-use]

ComputeModel --> Reserved[Reserved Capacity]

ComputeModel --> Spot[Spot/Preemptible]

ProcessingMode --> RealTime[Real-time Inference]

ProcessingMode --> Batch[Batch Processing]

DeploymentLocation --> Cloud[Cloud-based]

DeploymentLocation --> Edge[Edge Computing]

DeploymentLocation --> Hybrid[Hybrid Approach]

RealTime --> LowLatency[Low Latency Requirements]

RealTime --> HighAvailability[High Availability Needs]

Batch --> CostEfficiency[Cost Efficiency]

Batch --> TimeTolerance[Time Tolerance]

class Inference,ComputeModel,ProcessingMode,DeploymentLocation,OnDemand,Reserved,Spot,RealTime,Batch,Cloud,Edge,Hybrid,LowLatency,HighAvailability,CostEfficiency,TimeTolerance nodeStyle;

classDef nodeStyle fill:#fff,stroke:#FF8000,stroke-width:2px,color:#333;

On-demand vs. Reserved Resources

On-demand resources provide flexibility but at premium pricing. Reserved instances can reduce costs by 40-60% for predictable workloads but require upfront commitment.

Batch vs. Real-time Processing

Real-time inference requires constant resource availability and typically costs 3-5x more than batch processing. For use cases that can tolerate delay, batch processing offers significant cost savings.

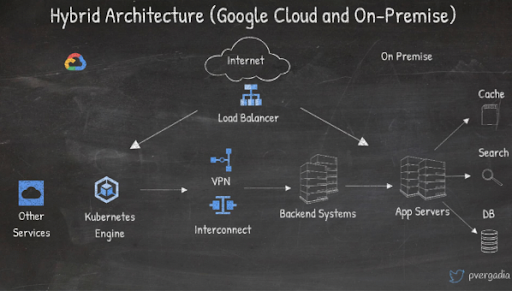

Cloud vs. Edge Deployment

Cloud deployments offer scalability but incur ongoing costs. Edge computing can reduce latency and long-term expenses for certain applications, particularly those used by AI assistants in IoT environments.

Cost Optimization Techniques

Model Optimization Techniques

- Quantization: Reduces model precision from 32-bit to 8-bit or even lower, decreasing memory requirements and increasing throughput.

- Pruning: Removes unnecessary connections in neural networks, reducing model size while maintaining accuracy.

- Distillation: Creates a smaller "student" model that learns from a larger "teacher" model, achieving similar accuracy at lower inference costs.

Operational Optimization Strategies

- Caching: Storing frequent inference results to avoid redundant computation.

- Batching: Grouping multiple inference requests together to maximize hardware utilization.

- Auto-scaling: Dynamically adjusting compute resources based on actual demand patterns.

- Hardware matching: Selecting the optimal hardware for specific model architectures.

Create Deployment Strategy Flowcharts with PageOn.ai

Planning your AI deployment strategy requires careful consideration of numerous factors. PageOn.ai's intuitive visual tools make it simple to create detailed flowcharts and decision trees that guide your inference optimization efforts. Whether you're comparing quantization strategies or mapping out a hybrid cloud-edge architecture, these visual tools help streamline the planning process.

Cloud Provider Comparison for AI Workloads

Choosing the right cloud provider for AI workloads involves evaluating a complex matrix of factors beyond simple hourly rates. Each major platform offers unique advantages, pricing models, and specialized services that can significantly impact both costs and capabilities.

Cost Comparison Across Major Platforms

The raw cost per hour varies significantly based on commitment level and specific instance configurations. Long-term commitments can reduce costs by 60-70% compared to on-demand pricing, making them attractive for stable, predictable AI workloads.

Understanding Hidden Costs and Pricing Models

| Cost Category | Description | Impact on Total Cost |

|---|---|---|

| Data Transfer | Costs for moving data in and out of the cloud environment | Can add 10-20% to total cost for data-intensive applications |

| Storage | Costs for persistent storage of models, datasets, and results | Often overlooked; can be substantial for large datasets |

| Managed Services | Premium for using vendor-specific AI platforms vs. raw compute | Typically adds 15-30% but reduces engineering overhead |

| Support Costs | Enterprise support plans required for mission-critical AI | Can add 10% or more to the monthly bill |

| Idle Resources | Paying for compute resources that aren't fully utilized | Often accounts for 30-45% of cloud spending |

Beyond the advertised instance prices, these hidden costs can significantly impact the total expense of cloud-based AI deployments. Comprehensive cost analysis must include all these components for accurate budgeting.

Platform-Specific Optimization Strategies

flowchart TD

subgraph AWS["AWS Optimization"]

A1[Use Spot Instances] --> A2[60-90% savings]

A3[Reserved Instances] --> A4[40-60% savings]

A5[Savings Plans] --> A6[Flexible commitment]

end

subgraph GCP["Google Cloud Optimization"]

G1[Preemptible VMs] --> G2[70-80% savings]

G3[Sustained Use Discounts] --> G4[Automatic discounts]

G5[Custom Machine Types] --> G6[Right-size resources]

end

subgraph Azure["Azure Optimization"]

M1[Azure Reserved VM] --> M2[Up to 72% savings]

M3[Low Priority VMs] --> M4[60-80% savings]

M5[Azure Hybrid Benefit] --> M6[License savings]

end

classDef awsStyle fill:#fff,stroke:#FF9900,stroke-width:2px,color:#333;

classDef gcpStyle fill:#fff,stroke:#4285F4,stroke-width:2px,color:#333;

classDef azureStyle fill:#fff,stroke:#0078D4,stroke-width:2px,color:#333;

class AWS,A1,A2,A3,A4,A5,A6 awsStyle;

class GCP,G1,G2,G3,G4,G5,G6 gcpStyle;

class Azure,M1,M2,M3,M4,M5,M6 azureStyle;

Each cloud provider offers unique cost-saving mechanisms that can be leveraged for AI workloads. Understanding and implementing these platform-specific strategies can yield substantial savings beyond the basic instance selection.

Generate Custom Cost Comparisons with PageOn.ai

Making the right cloud provider selection requires detailed cost analysis tailored to your specific AI workloads. PageOn.ai's visualization tools allow you to create comprehensive, customized comparison charts that account for your unique usage patterns, discount eligibility, and specific service requirements. These visual aids are invaluable for making data-driven decisions about where to deploy your AI models most cost-effectively.

Building a Cost-Efficient AI Infrastructure

Creating a cost-efficient AI infrastructure requires balancing immediate budget constraints with long-term scalability needs. This section explores the key decision frameworks and best practices for developing sustainable AI computing environments.

Decision Framework: In-House vs. Cloud Computing

On-Premise Advantages

- Lower long-term costs for consistent, high-utilization workloads

- Complete control over hardware selection and optimization

- No data egress fees or bandwidth limitations

- Potential for higher security and compliance control

- Fixed costs that don't fluctuate with usage

Cloud Advantages

- No upfront capital expenditure

- Rapid scaling for variable or growing workloads

- Access to latest hardware without upgrade costs

- Reduced maintenance and operational overhead

- Pay-as-you-go pricing aligns costs with actual usage

- Geographical distribution and redundancy

Scaling Strategies for Growing Compute Needs

flowchart TD

Start[New AI Project] --> Prototype[Prototype Phase]

Prototype --> ProtoCompute[Cloud Compute\nSmall-Scale Testing]

Prototype --> Validation[Validation Phase]

Validation --> ValidationComp[Hybrid Approach\nCloud for peaks\nBasic on-prem]

Validation --> Production[Production Phase]

Production --> EvaluateWorkload{Evaluate Workload\nCharacteristics}

EvaluateWorkload -->|Consistent & Predictable| OnPrem[On-Premise\nPrimary Infrastructure]

EvaluateWorkload -->|Variable & Scaling| Cloud[Cloud-First\nStrategy]

EvaluateWorkload -->|Mixed Requirements| Hybrid[Hybrid Infrastructure]

OnPrem --> OnPremStrategy[Cost Strategy:\n- Bulk hardware purchase\n- 3-5 year amortization\n- Cloud backup]

Cloud --> CloudStrategy[Cost Strategy:\n- Reserved instances\n- Spot/Preemptible VMs\n- Auto-scaling policies]

Hybrid --> HybridStrategy[Cost Strategy:\n- Baseline on-premise\n- Cloud bursting\n- Workload optimization]

class Start,Prototype,ProtoCompute,Validation,ValidationComp,Production,EvaluateWorkload,OnPrem,Cloud,Hybrid,OnPremStrategy,CloudStrategy,HybridStrategy nodeStyle;

classDef nodeStyle fill:#fff,stroke:#FF8000,stroke-width:2px,color:#333;

The optimal infrastructure evolves with your AI projects. Starting with cloud resources for flexibility during experimentation, then potentially shifting to hybrid or on-premise solutions as workloads stabilize and scale can provide the best balance of cost-efficiency and capability.

Total Cost of Ownership Analysis

A comprehensive TCO analysis must account for all direct and indirect costs associated with AI infrastructure. While cloud solutions eliminate many upfront and maintenance costs, the cumulative expenses over time may exceed on-premise solutions for stable, high-utilization workloads. The breakeven point typically occurs between 18-36 months depending on utilization rates and specific requirements.

Creating Infrastructure Planning Documents with PageOn.ai

When planning your AI infrastructure, PageOn.ai's conversational creation features help you develop comprehensive planning documents with embedded visualizations. Whether you're creating TCO comparisons, scaling roadmaps, or decision frameworks, PageOn.ai makes it simple to produce professional-quality documentation that guides your infrastructure strategy. The platform's intuitive tools allow technical and non-technical stakeholders alike to contribute to infrastructure planning discussions.

Future Trends in AI Compute Economics

The economics of AI compute is rapidly evolving, with emerging technologies and methodologies promising to reduce costs and increase accessibility. Understanding these trends is crucial for long-term planning and strategic decision-making.

Emerging Technologies Reducing Computational Demands

Efficient Model Architectures

New architectural approaches like mixture-of-experts (MoE), sparse models, and retrieval-augmented generation (RAG) significantly reduce computational requirements while maintaining or improving capabilities.

Specialized Hardware

Custom AI accelerators, neuromorphic computing, and next-generation TPUs/NPUs are being developed specifically to address AI workloads with order-of-magnitude improvements in computing efficiency.

Software Optimizations

Advancements in compilers, quantization techniques, and neural architecture search are making existing hardware more efficient for AI tasks, extending the useful life of current investments.

Cost Curve Predictions (2023-2028)

Industry projections suggest a rapid decline in both training and inference costs, with particularly dramatic efficiency gains in inference. Hardware performance per dollar is expected to improve by 35-40% annually, following a trend similar to historical improvements in general computing performance.

Sustainability and Carbon Footprint Considerations

The environmental impact of AI compute is becoming an increasingly important consideration. While training large models creates significant one-time emissions, the cumulative impact of inference at scale often exceeds training emissions over time. Sustainable AI practices, including the use of renewable energy sources for data centers and more efficient algorithms, are becoming competitive advantages as organizations face increasing pressure to reduce their carbon footprints.

Creating Future Scenario Visualizations with PageOn.ai

Planning for future AI compute needs requires visualizing complex trends and potential scenarios. PageOn.ai's advanced visualization tools make it easy to create engaging future-focused visuals that help stakeholders understand emerging trends and make forward-looking decisions. Whether you're explaining the impact of new hardware developments or projecting cost curves for budgeting purposes, these visualizations transform abstract concepts into actionable insights.

Practical Guide: Estimating Your AI Project Costs

Translating the concepts covered throughout this guide into practical cost estimates for your specific AI projects requires a structured approach. This section provides frameworks and tools to help you develop accurate budgets and ROI assessments.

Step-by-Step Calculation Framework

flowchart TD

Start[Start Cost Estimation] --> ModelType{What type of\nAI model?}

ModelType -->|NLP/Text| TextModel[Text-Based Model]

ModelType -->|Vision| VisionModel[Vision-Based Model]

ModelType -->|Multi-modal| MultiModal[Multi-modal Model]

TextModel --> TextSize{Model Size?}

VisionModel --> VisionSize{Model Size?}

MultiModal --> MultiSize{Model Size?}

TextSize -->|Small <1B params| TextSmall[Estimate:\n$2K-$10K Training\n$50-$200/mo Inference]

TextSize -->|Medium 1B-10B| TextMed[Estimate:\n$10K-$100K Training\n$500-$5K/mo Inference]

TextSize -->|Large >10B| TextLarge[Estimate:\n$100K-$1M+ Training\n$5K-$50K+/mo Inference]

VisionSize -->|Small| VisionSmall[Estimate:\n$5K-$20K Training\n$100-$500/mo Inference]

VisionSize -->|Medium| VisionMed[Estimate:\n$20K-$200K Training\n$1K-$10K/mo Inference]

VisionSize -->|Large| VisionLarge[Estimate:\n$200K-$2M+ Training\n$10K-$100K+/mo Inference]

MultiSize -->|Small| MultiSmall[Estimate:\n$10K-$50K Training\n$200-$1K/mo Inference]

MultiSize -->|Medium| MultiMed[Estimate:\n$50K-$500K Training\n$2K-$20K/mo Inference]

MultiSize -->|Large| MultiLarge[Estimate:\n$500K-$5M+ Training\n$20K-$200K+/mo Inference]

TextSmall & TextMed & TextLarge & VisionSmall & VisionMed & VisionLarge & MultiSmall & MultiMed & MultiLarge --> Adjust[Adjust Based On]

Adjust --> DataSize[Dataset Size]

Adjust --> TrainingDuration[Training Duration]

Adjust --> UserScale[User Scale]

Adjust --> RegionCosts[Regional Cost Differences]

Adjust --> Optimization[Optimization Techniques]

class Start,ModelType,TextModel,VisionModel,MultiModal,TextSize,VisionSize,MultiSize,TextSmall,TextMed,TextLarge,VisionSmall,VisionMed,VisionLarge,MultiSmall,MultiMed,MultiLarge,Adjust,DataSize,TrainingDuration,UserScale,RegionCosts,Optimization nodeStyle;

classDef nodeStyle fill:#fff,stroke:#FF8000,stroke-width:2px,color:#333;

This framework provides preliminary cost ranges based on model type and size. The actual costs will vary significantly based on specific implementation details, optimization techniques employed, and your choice of infrastructure.

ROI Assessment Model for AI Investments

ROI assessment for AI projects requires tracking both costs (initial and ongoing) and benefits (direct revenue, cost savings, productivity improvements). In this example, the breakeven point occurs around month 12, with significant positive returns afterward. Most enterprise AI projects target a 1-2 year payback period, with some AI automation tools achieving ROI in as little as 3-6 months.

Cost Estimation Variables for Common AI Applications

| Application Type | Key Cost Drivers | Typical Cost Range | ROI Measurement Metrics |

|---|---|---|---|

| Conversational AI / Chatbots | Message volume, response complexity, integration needs | $1K-$10K/mo | Customer service cost reduction, conversion rates |

| Document Processing / OCR | Document volume, complexity, accuracy requirements | $2K-$15K/mo | FTE savings, processing time reduction |

| Recommendation Systems | User base size, catalog size, update frequency | $5K-$50K/mo | Uplift in conversion, average order value |

| Computer Vision / Image Analysis | Image volume, resolution, real-time requirements | $3K-$30K/mo | Error reduction, inspection time savings |

| Educational AI Tools | User count, content complexity, personalization level | $1K-$8K/mo | Learning outcome improvements, completion rates for free AI tools for students |

Different AI applications have distinct cost profiles and ROI metrics. This table provides approximate monthly operational costs (excluding initial development) and suggests metrics to track for measuring return on investment.

Creating Cost Calculators with PageOn.ai

Estimating AI project costs with precision requires considering numerous variables specific to your use case. PageOn.ai's AI Blocks feature allows you to create custom visual calculators that account for your unique requirements. These interactive tools make it easy to explore different scenarios, adjust parameters, and communicate cost projections to stakeholders. Whether you're planning an initial AI deployment or scaling an existing system, these visual calculators provide valuable insight into the financial implications of your decisions.

Transform Your AI Cost Visualizations with PageOn.ai

Creating clear, compelling visualizations of complex AI compute requirements and costs is essential for effective planning and stakeholder communication. PageOn.ai's intuitive tools make it simple to transform technical concepts into accessible visual expressions.

Start Creating with PageOn.ai TodayFinal Thoughts

Understanding the economic aspects of AI compute is becoming increasingly crucial as artificial intelligence continues to transform industries. The ability to accurately estimate, plan for, and optimize these costs can be the difference between AI projects that deliver tremendous value and those that drain resources without adequate returns.

As we've explored throughout this guide, the landscape is complex but navigable with the right frameworks and tools. From evaluating cloud providers to building cost-efficient infrastructures and estimating project costs, a systematic approach can help organizations make informed decisions.

The future of AI compute economics looks promising, with ongoing innovations in hardware, software, and methodologies continuously driving down costs while increasing capabilities. Organizations that stay informed about these trends and leverage visual tools like Bing AI search and PageOn.ai to communicate complex concepts will be well-positioned to maximize the value of their AI investments.

By converting abstract computational concepts into clear visual expressions, technical and business stakeholders can collaborate more effectively to build sustainable, cost-efficient AI solutions that deliver tangible business value.

You Might Also Like

How to Design Science Lesson Plans That Captivate Students

Create science lesson plans that captivate students with hands-on activities, clear objectives, and real-world applications to foster curiosity and critical thinking.

How to Write a Scientific Review Article Step by Step

Learn how to write a review article in science step by step. Define research questions, synthesize findings, and structure your article for clarity and impact.

How to Write a Self-Performance Review with Practical Examples

Learn how to write a self-performance review with examples and tips. Use an employee performance review work self evaluation sample essay to guide your process.

How to Write a Spec Sheet Like a Pro? [+Templates]

Learn how to create a professional spec sheet with key components, step-by-step guidance, and free templates to ensure clarity and accuracy.