Building Your Local AI Powerhouse

Essential Open Source Tools for Development and Deployment

I've been exploring the world of local AI development for years now, and I'm excited to share what I've learned about running powerful AI models on your own hardware. The shift from cloud-only AI to locally-run models represents one of the most significant transformations in the AI landscape, offering unprecedented control, privacy, and often cost savings for developers and organizations alike.

In this guide, I'll walk you through the essential open source tools that form the backbone of local AI development and deployment. Whether you're looking to experiment with AI agent GitHub projects or build production-ready applications, understanding this ecosystem is crucial for success.

Let's dive into the tools and techniques that will help you build your own local AI powerhouse, with special attention to how visual tools like PageOn.ai can help clarify complex AI architectures and workflows.

The Local AI Revolution: Understanding the Landscape

When I first started working with AI models, running them locally was almost unthinkable due to their enormous resource requirements. Today, that's changed dramatically. The local AI revolution has been driven by more efficient models, better hardware, and a growing ecosystem of tools designed specifically for local deployment.

Cloud vs. Local: Making the Right Choice

The decision between cloud-based and local AI development involves several key factors:

I've found that local AI development offers several distinct advantages:

- Complete control over your AI infrastructure and models

- Enhanced privacy since sensitive data never leaves your environment

- No API costs or usage limitations once your system is set up

- Offline capability allowing AI functionality without internet connectivity

- Customization freedom to modify models for specific use cases

However, local AI development also presents unique challenges:

flowchart TB

A[Local AI Challenges] --> B[Hardware Requirements]

A --> C[Technical Complexity]

A --> D[Maintenance Burden]

A --> E[Limited Scalability]

B --> B1[GPU costs]

B --> B2[Memory constraints]

C --> C1[Model optimization]

C --> C2[Environment setup]

D --> D1[Updates & patches]

D --> D2[Performance tuning]

E --> E1[Fixed resource ceiling]

E --> E2[Deployment complexity]

When selecting open source AI tools for your project, I recommend considering these key factors:

- Hardware compatibility - Will it run efficiently on your available hardware?

- Community support - Is there an active community for troubleshooting?

- Documentation quality - Are setup and usage instructions clear?

- Integration capabilities - Will it work with your existing tech stack?

- Development pace - Is the project actively maintained?

Throughout my journey with local AI development, I've found that visual AI tools like PageOn.ai have been invaluable for mapping out complex workflows and architectures. The ability to quickly create visual representations of AI systems helps tremendously with both planning and documentation.

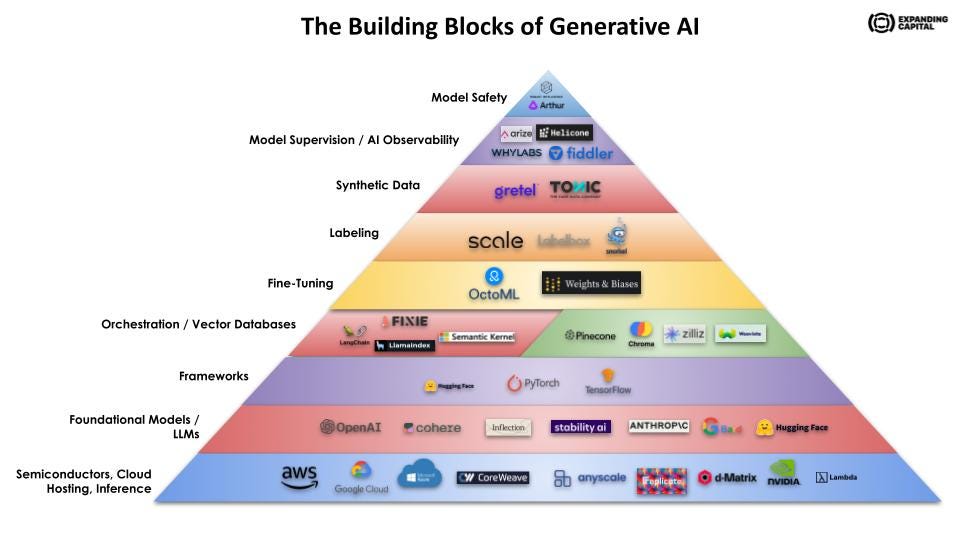

Foundation Models: The Building Blocks of Local AI

Foundation models serve as the core building blocks for local AI development. These pre-trained models can be run and even fine-tuned locally, giving you powerful AI capabilities without relying on external APIs. I've worked with many of these models and can help you understand the landscape.

Popular Open Source Language Models

| Model | Size Options | Min. Hardware | Best For |

|---|---|---|---|

| Llama 2/3 | 7B, 13B, 70B | 16GB RAM (7B), GPU recommended | General-purpose text generation, coding |

| Mistral | 7B, 8x7B (Mixtral) | 16GB RAM, GPU for Mixtral | Efficient reasoning, instruction following |

| Falcon | 7B, 40B | 16GB RAM (7B), GPU recommended | Multilingual capabilities |

| Phi-2 | 2.7B | 8GB RAM | Lightweight applications, low resources |

Multimodal Options

Beyond text-only models, the open source community has developed impressive multimodal models that can process images, text, and sometimes even audio:

Text-to-Image

- Stable Diffusion (multiple versions)

- ControlNet extensions

- Kandinsky

- Playground v2

Vision + Language

- LLaVA (Llama + Visual)

- CogVLM

- MiniGPT-4

- BakLLaVA

When selecting a foundation model, I always consider these technical factors:

Working with these foundation models can be complex, especially when trying to understand their architecture and capabilities. I've found that using PageOn.ai's Deep Search functionality helps me quickly find and compare model architectures, making it easier to select the right one for my specific use case. The visual representation of model components and relationships clarifies what can otherwise be extremely abstract concepts.

Essential Local Execution Environments

Once you've selected a foundation model, you need an environment to run it locally. These execution environments abstract away much of the complexity involved in setting up and running AI models on your local hardware.

Ollama: My Go-To Solution

Ollama has become one of the most popular tools for running large language models locally. It provides a simple interface for downloading, running, and managing models on your personal computer.

flowchart TD

A[Ollama Setup] --> B[Install Ollama]

B --> C[Pull Model]

C --> D[Run Model]

D --> E[Interact via API/CLI]

B --> B1[macOS, Linux, Windows]

B1 --> B2[GPU support where available]

C --> C1[ollama pull mistral]

C --> C2[ollama pull llama2]

C --> C3[ollama pull llava]

D --> D1[ollama run mistral]

D --> D2[ollama run llama2]

E --> E1[CLI interface]

E --> E2[REST API]

E --> E3[Integration with apps]

Setting up Ollama is remarkably simple:

curl -fsSL https://ollama.com/install.sh | sh ollama pull mistral ollama run mistral

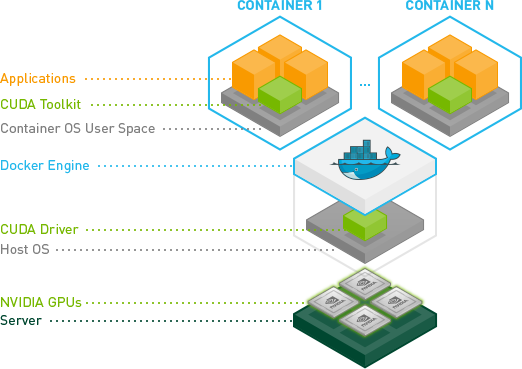

Docker-Based Solutions

Docker provides another excellent approach for running AI models locally, with the added benefit of consistent environments across different systems:

Docker-based solutions offer several advantages:

- Environment isolation - Dependencies don't conflict with your system

- Reproducibility - Same setup works across different machines

- Easy versioning - Switch between different model versions

- Simplified deployment - Move from development to production seamlessly

GPU Acceleration Tools

To get the most performance from your local AI setup, GPU acceleration is essential. These tools help optimize model performance on consumer hardware:

CUDA (NVIDIA)

Essential for NVIDIA GPUs, provides direct access to GPU computing capabilities.

ROCm (AMD)

Open platform for AMD GPU acceleration, though with more limited model support.

MPS (Apple)

Metal Performance Shaders for accelerating models on Apple Silicon.

When working with complex model architectures, I've found that visualizing them can significantly improve understanding. PageOn.ai's AI Blocks feature allows me to create clear, modular diagrams of model components and their relationships, making it much easier to conceptualize how different parts of the system interact.

Development Frameworks and Libraries

Once you have your foundation models running locally, you'll need frameworks and libraries to build applications around them. I've worked with many of these tools and can help you understand which ones might be right for your project.

Code-First Solutions

flowchart TB

A[Code-First Development] --> B[Hugging Face Transformers]

A --> C[TensorFlow/PyTorch]

A --> D[LangChain]

A --> E[LlamaIndex]

B --> B1[Model loading]

B --> B2[Tokenization]

B --> B3[Fine-tuning]

C --> C1[Neural network building]

C --> C2[Training pipelines]

C --> C3[Model optimization]

D --> D1[Chain creation]

D --> D2[Agent building]

D --> D3[Tool integration]

E --> E1[Data connectors]

E --> E2[Query engines]

E --> E3[Knowledge retrieval]

Hugging Face Transformers has become the de facto standard for working with transformer-based models. It provides a unified API for thousands of pre-trained models and makes it easy to:

- Load and run models in just a few lines of code

- Fine-tune models on your own data

- Convert models between different formats

- Optimize models for inference performance

from transformers import pipeline

generator = pipeline('text-generation', model='mistralai/Mistral-7B-v0.1')

result = generator("The key benefit of local AI development is",

max_length=100,

do_sample=True)

print(result[0]['generated_text'])

For connecting models with external data sources, LangChain and LlamaIndex have become essential tools in my development stack:

LangChain

Provides a framework for developing applications powered by language models, with a focus on composability and chains of operations.

- Chain multiple operations together

- Create autonomous agents

- Integrate with various tools and APIs

- Implement memory and state management

LlamaIndex

Specializes in connecting LLMs with external data sources, enabling knowledge retrieval and question answering over custom data.

- Index documents and data sources

- Create knowledge graphs from text

- Build powerful query engines

- Implement RAG (Retrieval-Augmented Generation)

Low-Code and Visual Options

Not all AI development requires extensive coding. Several tools provide visual interfaces for working with AI models:

Jan Framework stands out as a cross-platform, local-first AI application framework that simplifies the process of building AI-powered applications. It provides:

- A visual interface for model management

- Built-in chat interfaces

- Local model execution

- Extensions for various use cases

Similarly, Tune Studio offers a playground specifically designed for fine-tuning and deploying language models without writing code.

When working with these frameworks, I often use PageOn.ai to transform abstract AI concepts into clear visual workflows. This helps me plan my application architecture and communicate it effectively to team members who might not be familiar with all the technical details. The ability to create visual representations of complex AI workflows has been invaluable for both planning and documentation.

Data Management and Vector Databases

Effective data management is crucial for local AI development. Vector databases have become an essential component for storing and retrieving embeddings efficiently.

Vector Database Options

I've worked with several vector database solutions, each with their own strengths:

| Database | Best For | Deployment | Key Features |

|---|---|---|---|

| Chroma | Simple projects, getting started | Local, embedded | Python-first, easy setup, in-memory option |

| FAISS | Performance-critical applications | Local, embedded | Extremely fast, efficient memory usage |

| Pinecone | Production applications | Cloud, managed | Scalable, managed service, rich feature set |

| Milvus | Enterprise applications | Self-hosted, container | Highly scalable, cloud-native architecture |

| Qdrant | Complex filtering needs | Local or self-hosted | Advanced filtering, payload storage |

Integration Patterns

flowchart TD

A[Document/Data] --> B[Text Chunking]

B --> C[Embedding Generation]

C --> D[Vector Database Storage]

D --> E[Similarity Search]

E --> F[Retrieval]

F --> G[Context Augmentation]

G --> H[LLM Response]

subgraph "RAG Pattern"

B

C

D

E

F

G

end

The Retrieval-Augmented Generation (RAG) pattern has become a standard approach for connecting local models with your own data. This pattern involves:

- Breaking documents into manageable chunks

- Converting text chunks into vector embeddings

- Storing these embeddings in a vector database

- Performing similarity searches when a query comes in

- Retrieving relevant context to augment the LLM's knowledge

- Generating responses based on both the model's knowledge and the retrieved context

Working with vector databases and embeddings can be conceptually challenging. I've found that using PageOn.ai to visualize complex data relationships and embeddings helps tremendously in understanding how these systems work. Creating visual representations of vector spaces and similarity search processes makes these abstract concepts much more concrete and easier to work with.

Deployment and Production Considerations

Moving from development to production with local AI systems requires careful planning and the right tools. I've deployed numerous AI applications and have learned what works best for different scenarios.

Deployment Platforms

Several platforms have emerged to simplify the deployment of AI models to production:

PoplarML

Enables deployment of production-ready, scalable ML systems with minimal engineering effort.

Datature

All-in-one platform for building and deploying vision AI solutions.

BentoML

Framework for serving, managing, and deploying machine learning models.

Monitoring and Observability

Monitoring your deployed AI systems is crucial for ensuring reliability and performance:

Langfuse has emerged as a popular open-source monitoring platform specifically designed for LLM applications. It helps teams:

- Track model performance and usage

- Debug issues in complex AI pipelines

- Analyze user interactions

- Collaborate on model improvements

Scaling Strategies

Scaling local AI systems for production use presents unique challenges. Some effective strategies include:

flowchart TD

A[Scaling Strategies] --> B[Horizontal Scaling]

A --> C[Model Optimization]

A --> D[Caching]

A --> E[Load Balancing]

B --> B1[Multiple model instances]

B --> B2[Request distribution]

C --> C1[Quantization]

C --> C2[Pruning]

C --> C3[Distillation]

D --> D1[Response caching]

D --> D2[Embedding caching]

E --> E1[Round-robin]

E --> E2[Least connections]

E --> E3[Resource-based]

Documenting deployment architectures and processes is essential for team collaboration and system maintenance. I've found that PageOn.ai's conversation-based approach to creating visual deployment documentation makes it much easier to capture and share complex deployment workflows. Being able to transform technical deployment details into clear visual representations has helped my team maintain consistent deployment practices across projects.

Specialized Tools for Specific AI Applications

Beyond general-purpose frameworks, the open source community has developed specialized tools for specific AI applications. I've explored many of these and can help you find the right tool for your particular needs.

Text Generation and Chat Interfaces

If you're looking to build chat applications similar to ChatGPT but running locally, several excellent options are available:

Oobabooga Text Generation WebUI

A comprehensive web interface for running text generation models locally with a chat-like interface.

- Supports multiple model backends

- Character templates and personas

- Advanced generation parameters

- Training and fine-tuning capabilities

Jan.AI

A cross-platform desktop application for running AI models locally with a clean, user-friendly interface.

- Built-in model marketplace

- Chat, document Q&A, and image generation

- Extensible plugin system

- Local-first, privacy-focused design

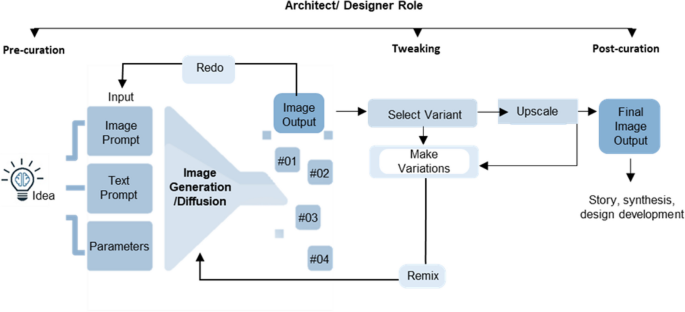

Image Generation

The field of open source AI image generators has exploded in recent years, with several powerful options now available to run locally:

Popular options include:

- Stable Diffusion WebUI - The most feature-rich interface for running Stable Diffusion locally

- ComfyUI - Node-based interface for creating complex image generation workflows

- Fooocus - Simplified interface focused on ease of use

- InvokeAI - Professional-grade interface with advanced features

These tools can be particularly useful for creating design logos and other visual assets without relying on cloud services.

Code Assistance Tools

Several open source tools now provide code completion and assistance capabilities similar to GitHub Copilot but running entirely on your local machine:

Working with these specialized tools often involves complex workflows and integration patterns. I've found that using PageOn.ai to prototype and visualize these workflows helps tremendously in planning and implementing AI applications. Being able to create visual representations of how different components interact makes it much easier to design effective systems.

Building a Complete Local AI Stack: Practical Architectures

Putting all these components together into a cohesive local AI stack requires careful planning. I've built several complete local AI systems and can share some reference architectures that work well for different use cases.

Reference Architecture: Personal Assistant

flowchart TD

A[User Interface] --> B[API Layer]

B --> C[Local LLM]

B --> D[Vector Database]

B --> E[Tool Integrations]

C --> C1[Ollama]

C1 --> C2[Mistral 7B]

D --> D1[Chroma]

D1 --> D2[Personal Data]

D1 --> D3[Knowledge Base]

E --> E1[Calendar]

E --> E2[Notes]

E --> E3[Email]

E --> E4[Web Search]

F[LangChain] --> B

G[Local Embedding Model] --> D

This architecture provides a complete personal assistant that runs entirely on your local machine, with access to your personal data while maintaining privacy.

Reference Architecture: Creative Studio

This setup combines text, image, and potentially audio generation capabilities to create a complete creative studio running locally.

Hardware Considerations

| Use Case | Minimum Specs | Recommended Specs | Notes |

|---|---|---|---|

| Basic Text Generation | 16GB RAM, Intel/AMD CPU | 32GB RAM, NVIDIA GPU (8GB+) | Can run small models (7B) on CPU |

| Image Generation | 16GB RAM, NVIDIA GPU (6GB+) | 32GB RAM, NVIDIA GPU (12GB+) | GPU is essential for reasonable speed |

| Full Creative Suite | 32GB RAM, NVIDIA GPU (12GB+) | 64GB RAM, NVIDIA GPU (24GB+) | Multiple models loaded simultaneously |

| Production Server | 64GB RAM, NVIDIA GPU (24GB+) | 128GB+ RAM, Multiple GPUs | Consider cooling and power requirements |

Integration Patterns

When building a complete local AI stack, these integration patterns have proven effective:

- API-First Design - Expose all AI capabilities through consistent REST APIs

- Event-Driven Architecture - Use message queues for asynchronous processing of resource-intensive tasks

- Microservices Approach - Deploy different models as separate services that can be scaled independently

- Unified Authentication - Implement a single authentication system across all components

- Centralized Logging - Collect logs from all components in a single system for easier debugging

When planning these complex AI stacks, I've found PageOn.ai's structured visual approach invaluable. It allows me to create comprehensive diagrams of the entire system, making it easier to identify potential issues and optimize the architecture. Being able to visualize how all the components fit together has helped me build more reliable and efficient AI systems.

Community Resources and Continuous Learning

The open source AI ecosystem is evolving rapidly, making continuous learning essential. I've found these community resources invaluable for staying updated and solving problems.

Key GitHub Repositories

Model Repositories

- HuggingFace Transformers

- GGML/GGUF Model Collections

- Ollama Model Library

- TheBloke's Quantized Models

Tool Repositories

- LangChain

- LlamaIndex

- Stable Diffusion WebUI

- Awesome Local AI

Community Forums and Discussion Groups

These communities are excellent places to ask questions, share experiences, and learn from others who are working with local AI tools.

Documentation Strategies

Documenting your AI projects is crucial for long-term maintenance and knowledge sharing. I recommend:

- Architecture Diagrams - Visual representations of system components and their relationships

- Model Cards - Detailed documentation of model capabilities, limitations, and performance characteristics

- Setup Guides - Step-by-step instructions for recreating environments

- Performance Benchmarks - Records of system performance under different conditions

- Decision Logs - Documentation of key architectural and design decisions

For students and educators, there are many free AI tools for students that can help with learning about AI development.

Creating shareable visual explanations of complex AI concepts is where PageOn.ai really shines. I've used it to create diagrams and visual guides that help others understand the systems I've built. These visual explanations have been particularly valuable when onboarding new team members or sharing knowledge with the broader community.

Transform Your AI Development Workflow with PageOn.ai

Ready to take your local AI development to the next level? PageOn.ai helps you visualize complex AI architectures, create clear documentation, and communicate your ideas effectively. Turn abstract AI concepts into stunning visual expressions that your team and stakeholders can easily understand.

Conclusion: Embracing the Local AI Revolution

Throughout this guide, I've shared the essential open source tools that form the backbone of local AI development and deployment. From foundation models like Llama and Mistral to execution environments like Ollama, and from development frameworks to specialized applications, the open source AI ecosystem offers powerful tools for building AI systems that run entirely on your own hardware.

The benefits of local AI development—enhanced privacy, complete control, and freedom from API costs—make it an increasingly attractive option for developers, researchers, and organizations. While challenges remain, particularly around hardware requirements and technical complexity, the rapid evolution of tools and techniques is making local AI more accessible than ever.

As you embark on your local AI development journey, remember that visualization tools like PageOn.ai can be invaluable for planning, documenting, and communicating complex AI systems. The ability to transform abstract concepts into clear visual representations will help you build more effective AI applications and share your knowledge with others. The local AI revolution is just beginning, and with these tools at your disposal, you're well-equipped to be part of it.

You Might Also Like

Transforming Marketing Teams: From AI Hesitation to Strategic Implementation Success

Discover proven strategies to overcome the four critical barriers blocking marketing AI adoption. Transform your team from hesitant observers to strategic AI implementers with actionable roadmaps and success metrics.

Visualizing the AI Revolution: From AlphaGo to AGI Through Key Visual Milestones

Explore the visual journey of AI evolution from AlphaGo to AGI through compelling timelines, infographics and interactive visualizations that map key breakthroughs in artificial intelligence.

Mapping the Great Depression: Visualizing Economic Devastation and Recovery

Explore how data visualization transforms our understanding of the Great Depression, from unemployment heat maps to New Deal program impacts, bringing America's greatest economic crisis to life.

Transform Your AI Results by Mastering the Art of Thinking in Prompts | Strategic AI Communication

Master the strategic mindset that transforms AI interactions from fuzzy requests to crystal-clear outputs. Learn professional prompt engineering techniques that save 20+ hours weekly.