Language Diffusion Models: Transforming Text Generation Through Progressive Refinement

Discover how iterative refinement is revolutionizing how AI generates and enhances text

The Evolution of Language Generation Models

The landscape of natural language processing has undergone a revolutionary transformation with the emergence of Language Diffusion Models (LDMs). Unlike traditional approaches that generate text in a single pass, diffusion models introduce an iterative refinement process that has fundamentally changed how AI systems create high-quality text.

The evolution of text generation models from traditional approaches to iterative diffusion-based methods.

Traditional vs. Diffusion-Based Language Models

Key differences in how these model families approach text generation:

The journey from traditional generation to diffusion-based approaches has been marked by several key milestones:

- 2020: Initial experiments applying diffusion concepts from image generation to text

- 2021: Development of discrete diffusion for language tokens

- 2022: Introduction of masked diffusion approaches for text

- 2023: Scaling to models with billions of parameters, such as LLaDA

- 2024: Integration with multimodal capabilities and performance rivaling traditional LLMs

The crossover of diffusion models from computer vision to natural language processing represents one of the most significant paradigm shifts in AI in recent years. While Stable Diffusion AI image creation revolutionized visual generation, the application of similar principles to language has opened new frontiers in text generation capabilities.

Core Mechanics of Language Diffusion Models

At their foundation, Language Diffusion Models operate on a principle of controlled corruption and systematic restoration. This process fundamentally differs from the typical autoregressive generation seen in traditional language models.

The Language Diffusion Process

How text gradually emerges through iterative denoising:

flowchart LR

A[Noise/Masks] -->|Step 1| B[Partial Text]

B -->|Step 2| C[Refined Text]

C -->|Step 3| D[Further Refined]

D -->|Step N| E[Final Output]

style A fill:#f9d6ff

style B fill:#ffe6cc

style C fill:#d9f2e6

style D fill:#cce6ff

style E fill:#FF8000,color:white

The mathematical foundation of language diffusion models can be described through a series of transformations on the text representation space. The process involves:

- Forward Diffusion: A gradual process of adding noise (or applying masks) to an initial text

- Learned Denoising: Training the model to reverse this process by predicting the less noisy state

- Sampling: Iteratively applying the denoising process to generate new text from pure noise

Models like LLaDA implement this through a sophisticated masking strategy, where tokens are masked randomly during pretraining at a ratio t ~ U[0,1], and only response tokens may be masked during supervised fine-tuning (SFT).

Progressive text generation through the diffusion process, moving from masked tokens to complete text.

The masking strategies employed in Language Diffusion Models are reminiscent of techniques used in masked language modeling but applied in a fundamentally different way. Rather than simply predicting masked tokens, LDMs leverage this masking as part of a generative process that allows for more controlled, iterative refinement of text.

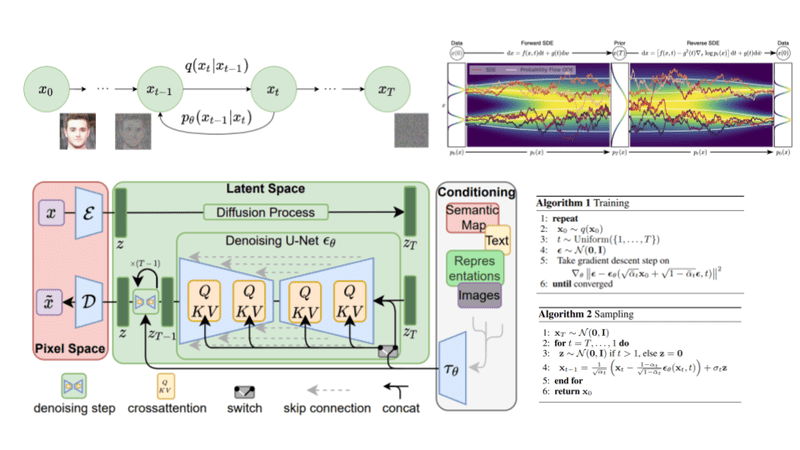

Architectural Elements Unique to Language Diffusion Models

The architecture of Language Diffusion Models introduces several distinctive elements that set them apart from traditional language models. These architectural choices enable the iterative refinement process that is central to their operation.

Architectural overview of a masked diffusion model for language generation.

Key Architectural Innovations

Masked Diffusion Approach

Unlike pixel-based diffusion in images, language diffusion operates on discrete tokens through sophisticated masking strategies that gradually reveal content.

Flexible Remasking

The ability to dynamically remask tokens during generation, allowing for more controlled refinement and multiple pathways to final text.

Parallel Prediction

LDMs can predict all masked tokens simultaneously at each step, enabling more efficient refinement compared to token-by-token approaches.

Diffusion Scheduling

Sophisticated noise scheduling mechanisms that control the pace and pattern of the diffusion process for optimal text generation.

Impact of Model Scale on Performance

How different parameter sizes affect generation quality:

The significance of scale in Language Diffusion Models cannot be overstated. Models like LLaDA with 8B parameters demonstrate that diffusion-based approaches can achieve performance comparable to leading traditional language models, such as LLaMA3 8B. As model sizes increase, the quality and coherence of the generated text improves dramatically, particularly in the diffusion model's ability to maintain consistency across multiple refinement steps.

Modern multilingual classroom presentations can benefit from these architectural advancements by leveraging models that can progressively refine content across multiple languages with greater accuracy.

Practical Applications and Use Cases

The iterative refinement capability of Language Diffusion Models opens up a wide range of practical applications that go beyond what traditional language models can offer. These applications leverage the unique strengths of diffusion-based approaches to provide enhanced control and quality.

Creative Writing Assistance

LDMs excel at iteratively improving draft content, allowing writers to progressively refine their ideas through guided AI suggestions that maintain the author's voice and intent.

Content Ideation Expansion

Starting with core concepts, diffusion models can progressively expand ideas outward, helping creators develop comprehensive content plans from initial seed thoughts.

Translation Refinement

Beyond simple translation, LDMs can progressively adapt content between languages while preserving contextual nuances and cultural relevance through their iterative improvement process.

Educational Content Creation

Language educators can leverage LDMs to create progressively challenging learning materials that adapt to different proficiency levels through controlled generation complexity.

Content Transformation with PageOn.ai

How rough content drafts become polished visual expressions:

flowchart LR

A[Rough Draft] -->|Initial Analysis| B[Content Structure]

B -->|Apply Diffusion Model| C[Refined Text]

C -->|PageOn Visualization| D[Interactive Visual]

D -->|User Feedback| E[Final Polished Content]

style A fill:#f9ebff

style B fill:#ffe6cc

style C fill:#e6f7ff

style D fill:#d9f2e6

style E fill:#FF8000,color:white

PageOn.ai's implementation of diffusion techniques transforms content creation by applying the principles of progressive refinement to both textual content and its visual representation. This approach enables users to start with rough drafts and iteratively improve both the language and visual elements until reaching a polished final product.

PageOn.ai's interface showing the progressive refinement of content from initial draft to visual presentation.

For those interested in generating paper topics, language diffusion models offer a powerful tool that can progressively develop and refine academic concepts through multiple iterations, ensuring topics are both original and thoroughly developed.

Technical Implementation Challenges

Despite their promising capabilities, implementing Language Diffusion Models in practical applications presents several significant technical challenges that must be addressed.

Technical Challenge Assessment

Relative difficulty of overcoming various implementation challenges:

Key Implementation Hurdles

Computational Intensity

Running multiple forward passes through the model for each generation – typically 10-50 iterations – significantly increases computational demands compared to single-pass language models.

Speed vs. Quality Trade-off

More diffusion steps generally yield better quality but at the cost of slower generation. Finding the optimal balance for real-time applications remains challenging.

Training Complexity

Training diffusion models requires specialized approaches to dataset preparation, noise scheduling, and optimization that differ significantly from traditional language model training.

Integration with Existing Pipelines

Incorporating iterative models into systems designed for single-pass generation requires substantial architecture modifications and possibly new API design patterns.

PageOn.ai's AI Blocks framework addresses many of these challenges by providing an abstraction layer that simplifies the integration of diffusion models. This modular approach allows developers to incorporate complex diffusion-based generation without having to manage the underlying computational complexities.

PageOn.ai AI Blocks Integration

Simplified integration architecture for language diffusion models:

graph TD

A[Client Application] -->|API Request| B[PageOn.ai Gateway]

B -->|Routing| C[AI Blocks Framework]

C -->|Model Selection| D{Model Dispatcher}

D -->|Diffusion Tasks| E[LDM Engine]

D -->|Standard Tasks| F[Traditional LLM]

E -->|Results| G[Response Handler]

F -->|Results| G

G -->|Formatted Response| B

B -->|API Response| A

style C fill:#FF8000,color:white

style D fill:#FF8000,color:white

style E fill:#ffe6cc

The framework's abstraction layer intelligently manages computational resources, optimizes the number of diffusion steps based on quality requirements, and provides standardized interfaces that make language diffusion models more accessible to developers without specialized expertise in diffusion mechanics.

Comparing Performance with Traditional Models

How do Language Diffusion Models stack up against traditional transformer-based language models? The performance comparison reveals interesting strengths and trade-offs that help determine which approach is best suited for different applications.

Performance Metrics: LLaDA vs. LLaMA3 8B

Comparison across standard language model benchmarks:

| Characteristic | Traditional LLMs | Language Diffusion Models |

|---|---|---|

| Generation Speed | Faster (single pass) | Slower (multiple iterations) |

| Output Controllability | Limited by one-shot nature | High (iterative refinement) |

| Text Diversity | Can require special techniques | Naturally higher through diffusion |

| Coherence | Strong in shorter contexts | Improves with iterations |

| Resource Efficiency | Higher (inference) | Lower (multiple passes) |

| Long-form Content | Struggles with coherence | Excels through progressive refinement |

The analysis of LLaDA's performance compared to LLaMA3 8B reveals some fascinating patterns. While traditional models generally perform better on fact-based tasks and structured problem-solving, language diffusion models show particular strength in creative endeavors and maintaining coherence across lengthy contexts.

This performance profile suggests that language diffusion models are particularly valuable for applications where creative quality and refinement are more important than raw generation speed. For language functions lesson planning, the iterative nature of diffusion models can help educators develop materials that progressively build on language concepts with increasing sophistication.

PageOn.ai's Deep Search technology can help users identify which model approach is best suited for their specific content needs, automatically selecting between diffusion-based and traditional models based on the content type and quality requirements.

The Future Landscape of Language Diffusion Models

The field of Language Diffusion Models is rapidly evolving, with several emerging trends and directions poised to shape its future development. Understanding these trajectories helps organizations prepare for the next wave of language AI capabilities.

Conceptual illustration of future multimodal language diffusion models combining text and visual elements.

Emerging Research Directions

Projected Research Focus Areas (2024-2026)

Distribution of research attention across emerging directions:

Multimodal Convergence

Perhaps the most exciting direction is the convergence of language diffusion with multimodal capabilities. Future models will likely generate and refine text while simultaneously considering visual content, creating more coherent cross-modal experiences. This integration would allow for text that not only reads well but also aligns perfectly with accompanying visual elements.

Efficiency Breakthroughs

A major focus of current research is addressing the computational intensity of diffusion models. Techniques like distillation, adaptive step sizing, and specialized hardware acceleration promise to make language diffusion models more practical for real-time applications.

Controllable Generation Frameworks

Future developments will likely emphasize even greater control over the generation process, allowing users to guide not just what content is created but how it evolves through each refinement step. This could revolutionize creative writing assistance and content development workflows.

Over the next 2-3 years, we can anticipate several key developments in the language diffusion landscape:

- Models reaching 100B+ parameters with dramatically improved efficiency

- Specialized diffusion models for specific domains like legal, medical, and technical content

- Consumer-friendly applications that hide the complexity while delivering the quality benefits

- Integration with agent frameworks for more sophisticated assistive capabilities

- Novel hybrid approaches combining strengths of diffusion and traditional methods

PageOn.ai's agentic approach positions users to take advantage of these evolving capabilities by providing an abstraction layer that can adapt as the underlying models improve. This future-proof design ensures that as language diffusion technology advances, users can immediately leverage new capabilities without significant workflow changes.

For content that involves present and past tense words, future diffusion models will likely offer even more sophisticated handling of temporal elements in language, ensuring consistent tense usage throughout generated content.

Building Visual Understanding with Language Diffusion

Complex concepts in language diffusion models become more accessible when visualized effectively. PageOn.ai provides tools specifically designed to transform these abstract ideas into clear, intuitive visual representations.

Visualizing the Language Diffusion Process

A step-by-step representation of how text emerges through diffusion:

graph TD

subgraph "Forward Process (Adding Noise)"

A[Original Text] -->|t=0.2| B[Slightly Masked]

B -->|t=0.5| C[Partially Masked]

C -->|t=0.8| D[Heavily Masked]

D -->|t=1.0| E[Fully Masked]

end

subgraph "Reverse Process (Denoising)"

E -->|Step 1| F[Initial Prediction]

F -->|Step 2| G[Improved Prediction]

G -->|Step 3| H[Further Refined]

H -->|Step N| I[Final Generated Text]

end

style A fill:#e6f7ff

style E fill:#f9ebff

style I fill:#FF8000,color:white

PageOn.ai's visualization tools help transform technical concepts into accessible diagrams and interactive experiences. This makes language diffusion models more understandable to audiences without extensive technical backgrounds.

Interactive Step Visualization

PageOn.ai allows users to create interactive presentations where audience members can examine each step of the diffusion process, seeing exactly how text emergence occurs through progressive denoising.

Visual Features

- Token-by-token emergence visualization

- Heat maps showing prediction confidence

- Comparative views across diffusion steps

- Animated transitions between states

PageOn.ai's interactive diffusion process visualization interface.

Educational Applications

The visualization tools are particularly valuable in educational contexts, where understanding the progression of language models is crucial for student comprehension. Instructors can create interactive lessons that demonstrate:

Concept Progression

How complex language concepts build through iterative steps, much like the diffusion process itself

Error Correction

Visual demonstration of how language errors are progressively identified and corrected

Content Evolution

The transformation of basic ideas into sophisticated, well-structured content

An example educational slide created with PageOn.ai to explain language diffusion models.

By leveraging PageOn.ai's visualization capabilities, complex language diffusion concepts become accessible to wider audiences. This visual approach helps bridge the gap between technical understanding and practical application, enabling more effective communication about these powerful new language models.

Transform Your Visual Expressions with PageOn.ai

Harness the power of language diffusion models to create stunning visual content that communicates complex ideas with clarity and impact. PageOn.ai's intuitive platform makes it easy to transform technical concepts into engaging visual stories.

Start Creating with PageOn.ai TodayEmbracing the Future of Language Diffusion

As Language Diffusion Models continue to evolve, they represent a significant shift in how we approach text generation and refinement. Their iterative nature provides unprecedented control over the quality and characteristics of generated content, opening new possibilities for content creators across industries.

PageOn.ai stands at the forefront of this transformation, providing tools that not only leverage the capabilities of language diffusion but also make them accessible through intuitive visualization and content creation interfaces. By translating complex diffusion processes into clear visual expressions, PageOn.ai empowers users to communicate sophisticated ideas effectively.

Whether you're an educator explaining language concepts, a content creator developing engaging materials, or a technical professional communicating complex ideas, the combination of language diffusion models and PageOn.ai's visualization tools offers a powerful approach to creating compelling, clear, and impactful content.

You Might Also Like

How to Design Science Lesson Plans That Captivate Students

Create science lesson plans that captivate students with hands-on activities, clear objectives, and real-world applications to foster curiosity and critical thinking.

How to Write a Scientific Review Article Step by Step

Learn how to write a review article in science step by step. Define research questions, synthesize findings, and structure your article for clarity and impact.

How to Write a Self-Performance Review with Practical Examples

Learn how to write a self-performance review with examples and tips. Use an employee performance review work self evaluation sample essay to guide your process.

How to Write a Spec Sheet Like a Pro? [+Templates]

Learn how to create a professional spec sheet with key components, step-by-step guidance, and free templates to ensure clarity and accuracy.