Revolutionizing 3D Graphics Creation: How AI is Transforming Visual Design Workflows

A new era of 3D creation powered by artificial intelligence

I've witnessed firsthand how AI technologies are fundamentally changing the way we approach 3D graphics creation. From game development to architectural visualization, the barriers to creating stunning 3D visuals are falling away as AI tools become more powerful and accessible.

The Evolution of 3D Graphics Creation

I've been fascinated by how 3D graphics creation has transformed over the decades. In the early days, creating 3D models was an extremely technical process requiring specialized knowledge of complex software tools like Maya, 3ds Max, and Blender. Artists would spend countless hours manually placing vertices, creating polygons, and meticulously crafting textures.

From Manual to AI-Assisted

The limitations of traditional approaches were significant:

- High technical barriers to entry

- Extremely time-consuming creation process

- Specialized training requirements

- Limited iteration capabilities

The shift to AI-assisted workflows has democratized 3D creation, making it accessible to creators without technical expertise in modeling.

The 3D Creation Evolution Timeline

timeline

title Evolution of 3D Graphics Creation

1980s : Early 3D wireframe graphics

1990s : Polygon-based modeling tools

: Rise of specialized 3D software

2000s : Improved texturing techniques

: Physics-based rendering

2010s : Procedural generation tools

: Real-time rendering advances

2020s : Text-to-3D AI generation

: Image-to-3D conversion

: AI-assisted modeling workflows

2023+ : Conversation-based 3D creation (PageOn.ai)

: Democratized 3D creation for non-technical users

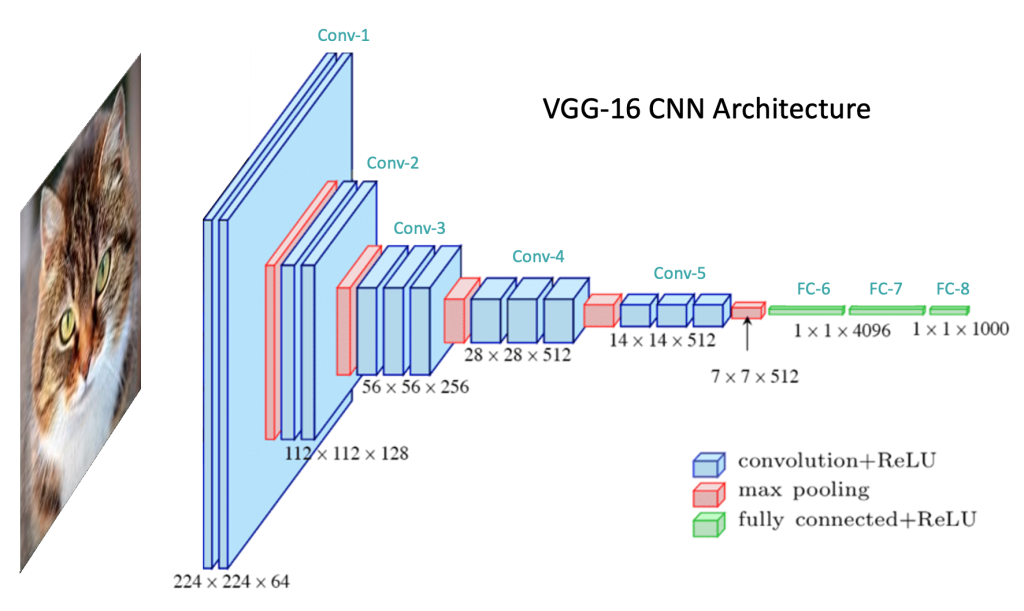

The technological breakthroughs enabling AI-generated 3D graphics have been remarkable. Deep learning architectures like diffusion models, neural radiance fields (NeRF), and transformer-based networks have made it possible to generate complex 3D assets from simple text prompts or 2D images.

PageOn.ai's conversation-based creation approach represents the next evolution in this journey. Instead of requiring technical prompts or modeling knowledge, I can now simply describe what I want to create in natural language, and the AI helps translate my vision into visual 3D concepts that can be easily understood and shared with others.

Understanding AI-Powered 3D Generation Technologies

Text-to-3D Model Generation

I find text-to-3D generation particularly fascinating. These systems allow me to describe an object or scene in natural language, and the AI interprets my description to create a corresponding 3D model. The technology works by:

- Processing natural language to understand desired shape, texture, and context

- Generating an initial 3D representation using latent diffusion models

- Refining the model through multiple iterations

- Applying textures and materials based on the text description

This technology has made creating 3D models from images with AI increasingly accessible to non-technical users.

Text-to-3D Generation Process

flowchart LR

A[Text Prompt] -->|Natural Language Processing| B[Semantic Understanding]

B --> C[3D Shape Generation]

B --> D[Material Properties]

C --> E[Initial Mesh]

D --> F[Texture Maps]

E --> G[Refined 3D Model]

F --> G

G --> H[Final 3D Asset]

style A fill:#FF8000,stroke:#333,stroke-width:2px

style H fill:#FF8000,stroke:#333,stroke-width:2px

Image-to-3D Conversion

Image-to-3D conversion is another powerful approach I've used that transforms 2D images into three-dimensional models. This technology is particularly useful when I have reference images but lack the technical skills to model from scratch.

The process typically involves:

- Analyzing the 2D image to understand depth and structure

- Extracting depth maps to understand spatial relationships

- Generating a 3D mesh based on the interpreted geometry

- Projecting the original image as texture onto the 3D model

Recent advances in neural radiance fields (NeRF) have dramatically improved the quality of these conversions, allowing for the creation of highly detailed 3D assets from even single images in some cases.

Deep Learning for Textures and Materials

The role of deep learning in creating realistic textures and materials has been transformative. Modern AI systems can:

- Generate physically accurate material properties

- Create consistent texture maps (diffuse, normal, roughness, etc.)

- Intelligently unwrap UV coordinates

- Simulate material aging and weathering effects

PageOn.ai's AI Blocks feature helps me visualize these complex 3D concepts without requiring technical knowledge. I can create modular visual explanations of 3D generation processes, material properties, or rendering techniques that make sense to stakeholders across technical and non-technical backgrounds.

By using AI graphic generators, I can quickly illustrate concepts that would otherwise require extensive technical modeling skills.

Practical Applications Across Industries

Game Development: Rapid Prototyping

In my experience working with game developers, AI-generated 3D models have revolutionized the prototyping process. Teams can now:

- Generate placeholder assets in minutes instead of days

- Quickly iterate on environmental designs

- Explore multiple character concepts simultaneously

- Create diverse props and objects without dedicated 3D artists

This allows smaller indie studios to compete with larger teams by reducing the resource requirements for asset creation.

XR (Extended Reality) Content Creation

The immersive nature of XR experiences demands rich, detailed 3D content. AI tools are helping creators meet this demand by:

- Generating environment assets that respond to real-world physics

- Creating interactive objects with appropriate collision properties

- Developing realistic avatars from simple descriptions or photos

- Building entire virtual spaces from conceptual descriptions

These capabilities are particularly valuable for educational XR experiences, where specific environments or objects might be needed to illustrate concepts.

Industry Adoption of AI-Generated 3D Content

3D Printing: From Concept to Physical Object

I've seen AI tools transform the 3D printing workflow by bridging the gap between idea and printable model:

- Converting sketches or descriptions into print-ready 3D models

- Automatically optimizing models for structural integrity

- Suggesting appropriate infill patterns and support structures

- Generating articulated or functional parts based on requirements

This has made custom manufacturing and prototyping accessible to hobbyists and small businesses who previously lacked the technical modeling skills required.

Architectural Visualization and Product Design

In architecture and product design, AI-generated 3D models are streamlining workflows by:

- Creating photorealistic architectural visualizations from floor plans

- Generating multiple design variations for client review

- Producing detailed product mockups from concept sketches

- Simulating different materials and lighting conditions

PageOn.ai's Deep Search functionality helps me integrate industry-specific 3D assets into presentations, making it easy to find the perfect visual reference to illustrate concepts across these different application areas. This is particularly valuable when communicating with stakeholders who may not have technical 3D knowledge but need to understand spatial concepts or design decisions.

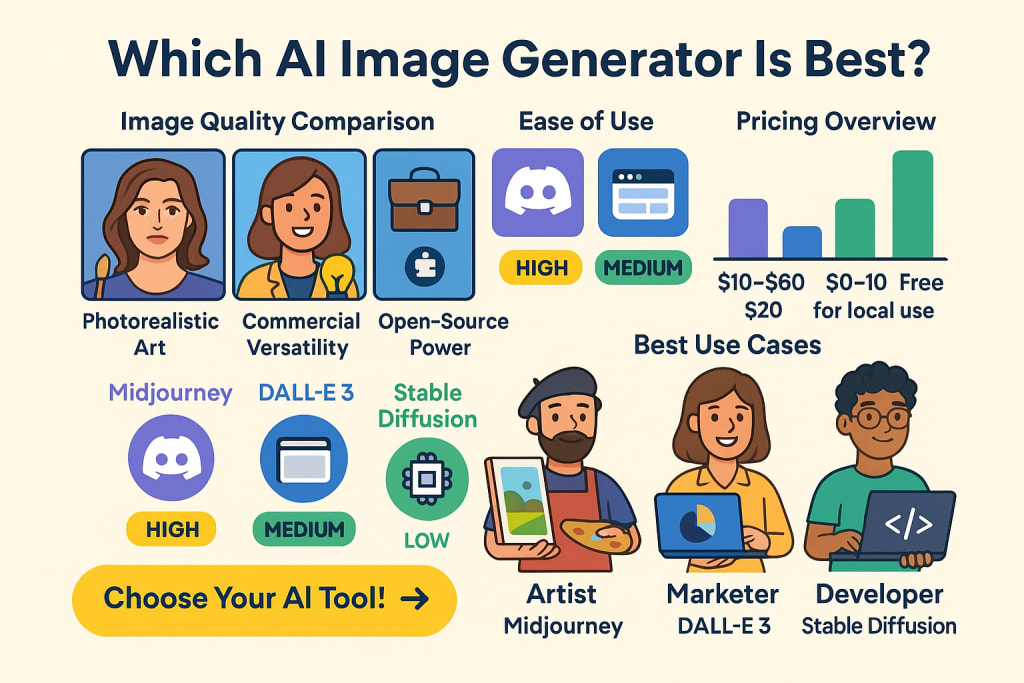

Comparing Leading AI 3D Generation Tools

In my exploration of AI 3D generation tools, I've evaluated various solutions across different categories. Each has unique strengths depending on your specific needs and workflow requirements.

Text-Based 3D Generators

These tools allow you to create 3D models by describing them in natural language:

| Tool | Strengths | Limitations | Best For |

|---|---|---|---|

| Tool A | High fidelity, complex geometry support | Learning curve for prompt engineering | Detailed character models |

| Tool B | Fast generation, intuitive interface | Limited control over fine details | Rapid prototyping |

| Tool C | Export formats for all major platforms | Subscription cost, internet dependency | Production-ready assets |

The field of AI graphic image generation continues to evolve rapidly, with new tools emerging regularly.

Image-Based 3D Conversion Tools

These tools transform 2D images into 3D models:

- Accuracy: Varies based on image quality and complexity

- Use cases: Product visualization, simple object recreation

- Limitations: May struggle with complex geometry or occluded parts

- Advantages: Quick conversion from existing references

AI 3D Tool Comparison Across Key Metrics

Video-to-3D Solutions

This emerging technology creates 3D models from video footage:

- Uses multiple frames to build more complete geometry

- Better captures objects from multiple angles

- Often employs neural radiance fields (NeRF) technology

- Particularly useful for capturing real-world environments

While still developing, these tools show great promise for capturing dynamic objects and environments.

Evaluation Criteria

When assessing AI 3D generation tools, I consider these key factors:

- Cost: Pricing models range from free tiers to subscription-based services

- Feature Set: Available model types, export formats, and customization options

- Ease of Use: Interface design and learning curve for new users

- Output Quality: Fidelity, topology quality, and texture resolution

- Support: Documentation, tutorials, and customer service

PageOn.ai's structured content blocks make it easy to create visual comparisons of these tools, helping teams make informed decisions about which solutions best fit their specific needs and workflows. I can quickly generate comparison tables, feature matrices, and decision trees that clearly communicate the strengths and limitations of different approaches.

Workflow Integration Strategies

Incorporating AI-Generated 3D Models into Design Pipelines

I've found several effective strategies for integrating AI-generated 3D assets into existing workflows:

AI-Enhanced 3D Design Pipeline

flowchart TD

A[Concept Development] --> B{AI or Manual?}

B -->|AI Generation| C[Text/Image Input]

B -->|Traditional| D[Manual Modeling]

C --> E[AI Model Generation]

E --> F[Post-Processing]

D --> F

F --> G[Integration with Project]

G --> H[Rendering/Export]

H --> I[Final Delivery]

style A fill:#FF8000,stroke:#333,stroke-width:2px

style E fill:#FF8000,stroke:#333,stroke-width:2px

style I fill:#FF8000,stroke:#333,stroke-width:2px

Key integration points in the workflow include:

- Initial concept development and ideation

- Rapid prototyping of multiple design variations

- Asset creation for background or secondary elements

- Placeholder generation for later refinement

Hybrid Approaches: Combining AI Generation with Manual Refinement

In my experience, the most effective workflows combine AI generation with manual refinement:

- Generate initial model with AI tools

- Import into traditional 3D software

- Clean up topology and optimize mesh

- Enhance textures and materials

- Add details and refine proportions

- Optimize for target platform

This approach leverages AI for speed while maintaining quality control through human expertise.

Asset Management for AI-Created 3D Content

Managing the growing library of AI-generated assets requires thoughtful organization:

- Consistent naming conventions for quick identification

- Metadata tagging for searchability (style, purpose, project)

- Version control to track iterations and refinements

- Storage solutions that balance accessibility and security

- Prompt libraries to recreate successful generations

Effective asset management becomes increasingly important as teams scale their use of AI-generated content.

Collaboration Workflows for Teams Using AI 3D Tools

When teams collaborate on AI-generated 3D projects, I recommend these practices:

- Shared prompt libraries with annotations on effective approaches

- Clear documentation of AI tools and settings used

- Defined roles for AI generation vs. manual refinement

- Regular review sessions to maintain quality and style consistency

- Cross-training team members on both AI and traditional tools

PageOn.ai helps teams collaborate on visual 3D concepts without technical barriers by providing a shared workspace where both technical and non-technical team members can contribute to the development of visual ideas. The platform's conversation-based approach means that team members can describe what they want to see, and the AI helps translate those descriptions into visual concepts that everyone can understand and refine together.

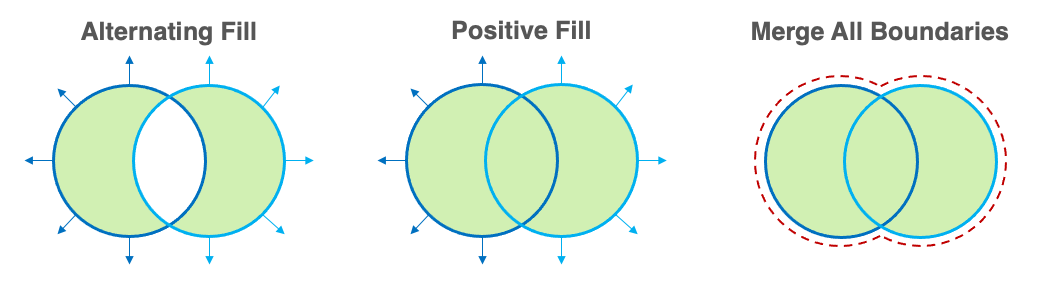

Overcoming Technical Challenges

Common Limitations in AI-Generated 3D Models

Despite impressive advances, AI-generated 3D models often face several limitations:

- Topology issues: Non-optimal edge flow and polygon distribution

- Manifold errors: Non-watertight meshes with holes or intersecting geometry

- UV mapping problems: Stretched or inefficient texture coordinates

- Detail inconsistency: Uneven level of detail across the model

- Physical implausibility: Models that couldn't exist in the real world

Common Issues in AI-Generated 3D Models

Techniques for Improving Texture and Material Quality

I've developed several approaches to enhance the quality of AI-generated textures:

- Manual touch-up of texture maps in image editing software

- Re-baking textures from high-poly to optimized low-poly models

- Creating custom shader networks for more realistic material behavior

- Using AI upscaling tools specifically designed for texture enhancement

- Combining procedural textures with AI-generated base maps

These techniques can significantly improve the final appearance of AI-generated assets, particularly for close-up views and hero objects.

Optimizing 3D Assets for Different Platforms

AI-generated models often need optimization for specific platforms:

| Platform | Optimization Focus | Typical Requirements |

|---|---|---|

| Mobile VR/AR | Polygon count, texture size | <10K triangles, 1K-2K textures |

| Desktop Games | LOD system, texture streaming | 10K-100K triangles, 2K-4K textures |

| 3D Printing | Watertight mesh, wall thickness | Manifold geometry, minimum 1-2mm walls |

| Film/Pre-rendered | Detail, subdivision capability | High-res meshes, 4K-8K textures |

Tools like AI vector graphics generators can help create scalable assets that work well across different platforms.

When to Use AI vs. Traditional Modeling Approaches

Based on my experience, here's when each approach makes the most sense:

AI Modeling Excels At:

- Rapid prototyping and concept exploration

- Creating background or secondary assets

- Generating organic forms and natural elements

- Projects with tight deadlines or budget constraints

Traditional Modeling Excels At:

- Highly technical or precise models

- Assets requiring specific engineering constraints

- Hero objects requiring perfect topology

- Stylized designs with specific artistic direction

PageOn.ai helps teams clearly communicate technical requirements to non-technical stakeholders by visualizing these challenges and solutions in an accessible way. For example, I can create interactive diagrams that show the before-and-after of optimization processes, or visually explain the trade-offs between different approaches to 3D asset creation.

Future Directions in AI-Generated 3D Graphics

Emerging Technologies and Research Trends

I'm particularly excited about these cutting-edge developments in AI-generated 3D graphics:

- Neural Radiance Fields (NeRF): Creating photorealistic 3D scenes from images

- Differentiable Rendering: Enabling AI to understand and manipulate 3D scenes

- Physics-Informed Neural Networks: Generating models with realistic physical properties

- Multi-modal Generation: Creating 3D content from combined text, image, and audio inputs

Convergence of Generative AI with Real-Time Rendering

One of the most promising trends I've observed is the integration of generative AI directly into real-time rendering pipelines:

- On-demand asset generation within game engines

- AI-enhanced procedural generation for infinite worlds

- Dynamic content adaptation based on player behavior

- Real-time style transfer for instant visual customization

- AI directors that create environmental storytelling elements

These developments will enable increasingly dynamic and responsive virtual environments that can adapt to user needs and preferences in real-time.

AI 3D Technology Adoption Timeline

Democratization of 3D Creation

The democratization of 3D creation through increasingly intuitive tools is perhaps the most transformative trend:

- Conversation-based interfaces that require no technical knowledge

- AR/VR tools that allow spatial modeling through natural gestures

- Automated rigging and animation systems for non-animators

- Collaborative platforms where teams can co-create in real-time

- Educational systems that teach 3D concepts through AI assistance

These developments are opening 3D creation to entirely new audiences, including AI tools for visual novel creation and other creative fields previously limited by technical barriers.

Ethical Considerations

As AI-generated 3D content becomes more prevalent, several ethical considerations emerge:

- Copyright and ownership of AI-generated 3D models

- Potential for creating misleading or deceptive visual content

- Environmental impact of compute-intensive 3D generation

- Accessibility and equity in access to AI creation tools

- Displacement of traditional 3D artists and modelers

Addressing these concerns proactively will be crucial for the healthy development of the field.

PageOn.ai's agentic approach anticipates the future of 3D content creation by focusing on natural language interaction and collaborative workflows. Rather than requiring users to learn complex 3D modeling software or specialized prompt engineering, PageOn.ai allows creators to express their ideas conversationally and receive visual feedback immediately. This aligns perfectly with the broader trend toward democratization of creative tools and makes advanced visualization accessible to everyone, regardless of technical background.

Getting Started: A Practical Guide

Essential Tools for Beginners

If you're just starting with AI-generated 3D graphics, I recommend these beginner-friendly tools:

- Text-to-3D: Accessible platforms with user-friendly interfaces

- Image-to-3D: Tools that can convert reference photos to simple models

- Post-Processing: Basic mesh editing software for refinements

- Visualization: Real-time viewers to examine and share your creations

Step-by-Step Process for Your First AI-Generated 3D Model

Beginner's Workflow for AI 3D Creation

flowchart TD

A[Define Your Goal] --> B[Choose Input Type]

B --> C1[Text Description]

B --> C2[Reference Image]

C1 --> D[Write Clear Prompt]

C2 --> E[Prepare Reference]

D --> F[Generate Initial Model]

E --> F

F --> G[Evaluate Results]

G --> H{Satisfied?}

H -->|No| I[Refine Prompt/Image]

I --> F

H -->|Yes| J[Download & Post-Process]

J --> K[Final Use/Export]

style A fill:#FF8000,stroke:#333,stroke-width:2px

style K fill:#FF8000,stroke:#333,stroke-width:2px

Follow these steps for your first AI-generated 3D model:

- Define your goal: Start with a clear idea of what you want to create

- Choose your input method: Text description or reference image

- Prepare your input: Write a detailed prompt or select a good reference image

- Generate your model: Use your chosen AI tool to create the initial 3D asset

- Evaluate and iterate: Assess the result and refine as needed

- Export and post-process: Download your model and make any necessary adjustments

- Use in your project: Integrate the final asset into your intended application

Resources for Learning and Skill Development

To continue developing your AI 3D creation skills, I recommend these resources:

- Online communities dedicated to AI-generated 3D art

- Tutorial channels focusing on prompt engineering for 3D

- Documentation from AI tool providers

- Basic 3D modeling courses to understand fundamentals

- Forums where creators share techniques and workflows

The field is evolving rapidly, so engaging with active communities is one of the best ways to stay current with new techniques and tools.

Building a Portfolio of AI-Assisted 3D Work

As you develop your skills, consider these approaches to building a compelling portfolio:

- Focus on a specific style or niche to develop a recognizable aesthetic

- Document your process, including prompts and refinement steps

- Showcase before-and-after comparisons of AI generation and your enhancements

- Create series of related models to demonstrate consistency and range

- Include practical applications of your models in real contexts

A thoughtfully curated portfolio can help you stand out in this emerging field, whether you're seeking clients, collaborators, or employment opportunities.

PageOn.ai is an invaluable tool for documenting and presenting your 3D creation process visually. You can create step-by-step visual guides that show your workflow from initial concept to final model, complete with annotations and explanations. This makes it easy to share your process with clients or collaborators who may not understand the technical aspects of 3D creation but need to see how their ideas are being transformed into visual assets.

Transform Your Visual Expressions with PageOn.ai

Ready to create stunning 3D visuals without the technical complexity? PageOn.ai's intuitive, conversation-based approach makes it easy to bring your ideas to life and communicate complex concepts clearly.

Start Creating with PageOn.ai TodayEmbracing the AI-Powered 3D Future

As we've explored throughout this guide, AI is fundamentally transforming how we create and work with 3D graphics. From rapid prototyping in game development to architectural visualization and beyond, the barriers to creating compelling 3D content are falling away.

The combination of AI-generated foundations with human creativity and refinement represents the most powerful approach available today. By understanding the strengths and limitations of current AI tools, we can leverage them effectively while continuing to apply our unique human perspective and artistic judgment.

As these technologies continue to evolve, the democratization of 3D creation will accelerate, bringing new voices and visions into the world of 3D design. PageOn.ai stands at the forefront of this transformation, making complex visual expression accessible to everyone through intuitive, conversation-based creation.

Whether you're a professional designer looking to streamline your workflow or someone with creative ideas but no technical 3D skills, the AI-powered future of 3D graphics creation is here—and it's more accessible than ever before.

You Might Also Like

Visualizing America's Debt Challenge: The Grand Bargain Solution to the Crisis

Explore a comprehensive visual analysis of America's debt crisis and the Grand Bargain approach to resolving it through balanced spending cuts and revenue increases for long-term fiscal sustainability.

Pop Mart's Global Expansion: Crafting a Collectible Empire Through Strategic Visualization

Explore Pop Mart's international toy market strategy, from IP-driven business models to visual storytelling. Learn how this collectible empire achieved 375% overseas revenue growth across 100 countries.

Transforming Collectible Experiences: From Blind Boxes to Theme Parks | Visual Storytelling Guide

Discover how collectible experiences have evolved from mystery blind boxes to immersive theme parks. Learn visual storytelling strategies to create compelling collectible ecosystems and experiences.

Visualizing Greek Debt Crisis Warning Signs: Essential Lessons for Economic Stability

Discover how visual data analysis could have predicted the Greek debt crisis through GDP patterns, growth trajectories, and economic indicators. Learn key warning signs for future crisis prevention.