Beyond the Numbers: A Practical Guide to Selecting AI Tools That Actually Deliver

The Great Disconnect Between Benchmarks and Reality

In an era where AI benchmarks dominate headlines but fail to predict real-world success, discover how to build evaluation frameworks that actually matter. Learn to assess AI tools based on practical utility, sustained performance, and genuine impact on your workflow.

The Great Disconnect Between Benchmarks and Reality

Traditional AI benchmarks have created a dangerous illusion of progress. While models achieve impressive scores on standardized tests, they often stumble when faced with the messy, unpredictable nature of real-world applications. This disconnect has led to a crisis of expectations where highly-benchmarked tools consistently underperform in practice.

The fundamental issue lies in how benchmarks are designed. They evaluate AI systems in controlled, sanitized environments that bear little resemblance to the chaotic, context-rich scenarios where these tools must actually function. Recent research highlights the growing obsolescence of traditional benchmarks, emphasizing the need for practical utility assessments that bridge the gap between technical performance and everyday functionality.

Why Benchmarks Mislead Decision Makers

- • Marketing-driven metrics prioritize impressive numbers over practical utility

- • Controlled testing environments eliminate the variables that matter most in real usage

- • Static evaluation criteria fail to capture dynamic, evolving user needs

- • Benchmark gaming incentivizes optimization for tests rather than user value

The Performance Reality Gap

How benchmark scores translate to real-world effectiveness

graph TD

A[AI Tool Benchmark Score: 95%] --> B{Real-World Application}

B --> C[Complex Context: 70%]

B --> D[Edge Cases: 45%]

B --> E[User Integration: 60%]

B --> F[Sustained Performance: 55%]

C --> G[Actual Utility: 58%]

D --> G

E --> G

F --> G

style A fill:#ff8000,stroke:#333,stroke-width:2px,color:#fff

style G fill:#e74c3c,stroke:#333,stroke-width:2px,color:#fff

style B fill:#3498db,stroke:#333,stroke-width:2px,color:#fff

Consider the case of a highly-benchmarked language model that achieved 96% accuracy on reading comprehension tests but struggled to maintain coherent conversations beyond three exchanges in customer service applications. The benchmark measured isolated question-answering capability, but real-world performance required contextual memory, emotional intelligence, and graceful error handling—none of which were evaluated.

Building Your Personal AI Testing Framework

The solution to benchmark inadequacy isn't better benchmarks—it's personalized evaluation frameworks that reflect your specific needs and contexts. Building a robust testing system requires developing task-specific criteria, creating realistic test scenarios, and establishing metrics that correlate with actual outcomes.

Essential Framework Components

- • 15-20 real-world test scenarios from your actual workflow

- • Baseline performance metrics tied to business outcomes

- • Edge case documentation and failure mode analysis

- • Integration complexity and learning curve assessments

PageOn.ai Integration Benefits

- • Vibe Creation for prototyping tool combinations

- • Visual comparison matrices for multiple AI tools

- • Dynamic testing scenarios with real-time updates

- • Collaborative evaluation with team feedback loops

Your testing framework should evolve continuously. Start with a core set of tasks that represent 80% of your AI tool usage, then gradually expand to cover edge cases and emerging needs. Document not just what works, but why it works and under what conditions it fails. This approach aligns with current AI tool trends for 2025, which emphasize practical utility over theoretical performance.

Personal Testing vs. Standard Benchmarks

Accuracy in predicting real-world AI tool performance

Leveraging PageOn.ai for Framework Development

PageOn.ai's Vibe Creation feature enables you to prototype and test different AI tool combinations in realistic scenarios. Rather than evaluating tools in isolation, you can create comprehensive testing environments that mirror your actual workflow complexity, leading to more accurate performance predictions.

The Multi-Model Reality: Why No Single Tool Rules Them All

One of the most liberating realizations in AI tool selection is that there is no "best" model—every AI tool excels at different tasks and fails in predictable ways. This understanding shifts the focus from finding the perfect solution to building a diverse toolkit that can adapt to varying requirements and contexts.

This multi-model approach reflects real-world usage patterns where professionals seamlessly switch between different AI writing tools based on task requirements, audience needs, and output formats. The key is mapping tool strengths to specific use cases rather than expecting universal excellence.

| AI Tool Category | Optimal Use Cases | Common Limitations | Integration Strategy |

|---|---|---|---|

| Large Language Models | Complex reasoning, content creation, code generation | Hallucination, outdated information, consistency | Primary for creative tasks, verify factual claims |

| Specialized Coding AI | Code completion, debugging, refactoring | Limited domain knowledge, security oversights | Development acceleration, human code review |

| Visual AI Tools | Image generation, design assistance, visual analysis | Style consistency, text rendering, brand alignment | Concept development, iterate with human designers |

| Data Analysis AI | Pattern recognition, statistical analysis, reporting | Causal interpretation, domain context, bias detection | Exploratory analysis, validate with domain experts |

Building an effective AI toolkit requires understanding these complementary strengths and developing workflows that leverage each tool's optimal performance zone. Visual AI tools, for example, excel at rapid concept generation but require human oversight for brand consistency and strategic alignment.

Integrated AI Tool Workflow

How different AI tools work together in a typical project

flowchart TD

A[Project Brief] --> B[Research AI]

B --> C[Content Planning AI]

C --> D[Writing AI]

D --> E[Visual AI]

E --> F[Code Generation AI]

F --> G[Quality Assurance AI]

G --> H[Final Review]

B --> I[Data Analysis AI]

I --> C

D --> J[Translation AI]

J --> E

style A fill:#3498db,stroke:#333,stroke-width:2px,color:#fff

style H fill:#27ae60,stroke:#333,stroke-width:2px,color:#fff

style B fill:#FF8000,stroke:#333,stroke-width:1px,color:#fff

style C fill:#FF8000,stroke:#333,stroke-width:1px,color:#fff

style D fill:#FF8000,stroke:#333,stroke-width:1px,color:#fff

style E fill:#FF8000,stroke:#333,stroke-width:1px,color:#fff

style F fill:#FF8000,stroke:#333,stroke-width:1px,color:#fff

style G fill:#FF8000,stroke:#333,stroke-width:1px,color:#fff

style I fill:#FF8000,stroke:#333,stroke-width:1px,color:#fff

style J fill:#FF8000,stroke:#333,stroke-width:1px,color:#fff

Using PageOn.ai's AI Blocks for Hybrid Workflows

PageOn.ai's AI Blocks feature enables you to create hybrid workflows that combine multiple AI tools effectively. Instead of managing separate tools in isolation, you can design integrated processes where each AI component contributes its unique strengths to a cohesive output, dramatically improving overall effectiveness.

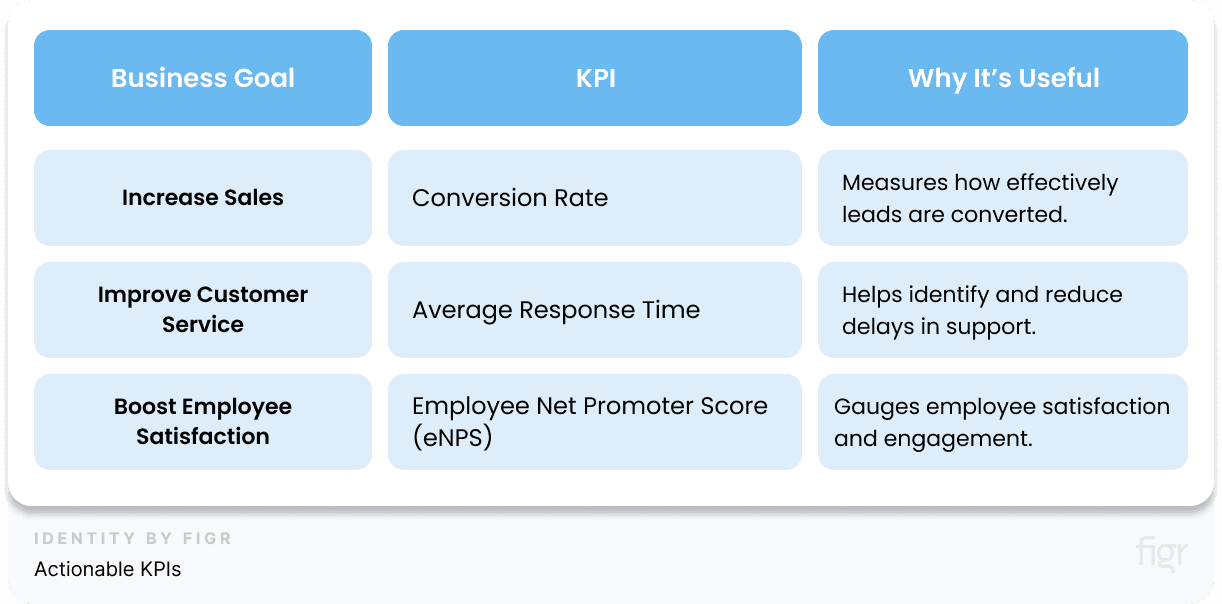

Practical Utility Assessments That Actually Matter

Moving beyond technical performance metrics requires a fundamental shift toward effectiveness measurements that capture real value creation. Practical utility assessments evaluate AI systems based on time saved, quality improvements, user satisfaction, and long-term performance trends rather than isolated task performance.

These assessments bridge the gap between technical capability and business value, focusing on outcomes that stakeholders can understand and measure. They emphasize sustained performance over peak performance, integration ease over raw capability, and user adoption over theoretical potential.

Practical vs Technical Performance Metrics

Correlation with actual business value creation

Key Effectiveness Measurements

- • Time-to-value: How quickly users achieve meaningful results

- • Quality consistency: Performance stability across different contexts

- • User satisfaction: Subjective experience and adoption willingness

- • Error recovery: Graceful handling of unexpected situations

Long-term Performance Tracking

- • Performance degradation patterns over time

- • Adaptation to changing user requirements

- • Integration compatibility with evolving systems

- • Total cost of ownership including hidden expenses

Creating effective feedback loops requires systematic data collection that captures both quantitative metrics and qualitative insights. Users often develop workarounds for AI tool limitations that aren't reflected in usage statistics, making regular feedback sessions and observational studies crucial for understanding true performance.

Utilizing PageOn.ai's Deep Search for Performance Data

PageOn.ai's Deep Search capability can gather and visualize real user experiences and performance data from across the web, helping you understand how AI tools perform in diverse real-world contexts. This aggregated intelligence provides insights that no single benchmark or internal test can capture.

Real-World Performance Indicators to Track

Effective AI tool evaluation requires tracking indicators that correlate with sustained success rather than initial impressions. These metrics should capture not just what an AI tool can do, but how well it integrates into existing workflows and maintains performance under real-world conditions.

Critical Performance Indicators by Priority

Ranked by correlation with long-term AI tool success

Adoption Metrics

- • Initial user engagement rates

- • Sustained usage over 90+ days

- • Feature utilization depth

- • User-driven expansion to new use cases

Reliability Indicators

- • Performance variance across contexts

- • Graceful degradation under stress

- • Recovery time from failures

- • Consistency across user types

Integration Success

- • Workflow integration complexity

- • System compatibility maintenance

- • Data portability and standard compliance

- • Update and migration smoothness

Cost-effectiveness analysis must include hidden implementation costs that traditional evaluations miss. These include training time, integration effort, ongoing maintenance, and opportunity costs from tool switching. A tool with lower upfront costs but high hidden expenses often proves more expensive than premium alternatives with smoother integration paths.

Total Cost of Ownership Analysis

Visible and hidden costs in AI tool adoption

graph TD

A[AI Tool Selection] --> B[Visible Costs]

A --> C[Hidden Costs]

B --> D[License/Subscription: $50/month]

B --> E[Setup Fee: $200]

C --> F[Training Time: $2,400]

C --> G[Integration Work: $3,200]

C --> H[Maintenance: $800/month]

C --> I[Switching Costs: $5,000]

D --> J[Total 12-month Cost: $18,600]

E --> J

F --> J

G --> J

H --> J

style A fill:#3498db,stroke:#333,stroke-width:2px,color:#fff

style B fill:#27ae60,stroke:#333,stroke-width:2px,color:#fff

style C fill:#e74c3c,stroke:#333,stroke-width:2px,color:#fff

style J fill:#FF8000,stroke:#333,stroke-width:2px,color:#fff

Performance consistency across different user types and contexts often reveals more about an AI tool's real-world viability than peak performance metrics. Tools that work well for power users but frustrate casual users typically struggle with broad adoption, regardless of their technical capabilities.

Making Informed Decisions in the Post-Benchmark Era

The post-benchmark era requires decision frameworks that prioritize practical outcomes over theoretical performance. This shift demands new methodologies for evaluation, community-driven feedback systems, and iterative testing processes that evolve with changing needs and contexts.

Building effective decision frameworks requires balancing innovation adoption with proven reliability. Early adoption of cutting-edge tools can provide competitive advantages, but it also introduces risks that must be carefully managed through incremental rollouts and fallback strategies.

Decision Framework Components

Evaluation Criteria

- • Task-specific performance validation

- • Integration complexity assessment

- • Long-term viability indicators

- • Community feedback aggregation

Risk Management

- • Incremental deployment strategies

- • Fallback option preparation

- • Performance monitoring systems

- • Exit strategy planning

Community-driven evaluation networks provide insights that no individual assessment can capture. These networks aggregate experiences across diverse use cases, industries, and user types, revealing patterns that might not be apparent in isolated testing. However, they require careful curation to maintain signal-to-noise ratio and prevent gaming.

Decision Framework Effectiveness Over Time

Success rate of AI tool selections using different approaches

Iterative testing processes that evolve with your needs ensure that evaluation frameworks remain relevant as both AI tools and business requirements change. Regular framework updates, based on lessons learned and emerging patterns, prevent evaluation drift and maintain decision quality over time.

Leveraging PageOn.ai's Agentic Capabilities

PageOn.ai's Agentic capabilities can transform scattered tool evaluations into comprehensive, visual decision-making frameworks. By automatically aggregating performance data, user feedback, and comparative analyses, these agents create dynamic dashboards that evolve with your evaluation criteria and provide real-time insights for informed decision-making.

Transform Your AI Tool Selection Process with PageOn.ai

Stop relying on misleading benchmarks and start making decisions based on real-world performance. PageOn.ai's comprehensive visualization and evaluation tools help you build testing frameworks that actually predict AI tool success in your specific context.

The Future of AI Tool Evaluation

The movement away from benchmark-driven evaluation represents more than a methodological shift—it's a maturation of the AI industry toward practical utility and sustainable value creation. As organizations develop more sophisticated evaluation frameworks, the gap between AI marketing claims and real-world performance will become increasingly apparent.

This transformation requires tools that can visualize complex performance relationships, aggregate diverse feedback sources, and adapt to evolving requirements. PageOn.ai's platform addresses these needs by providing comprehensive frameworks for AI tool evaluation that prioritize practical outcomes over theoretical benchmarks.

The future belongs to organizations that can effectively navigate the multi-tool reality, building diverse AI capabilities that complement each other while maintaining focus on measurable business outcomes. Success in this environment requires both sophisticated evaluation methodologies and the tools to implement them effectively.

Ready to move beyond misleading benchmarks? PageOn.ai provides the visual intelligence and evaluation frameworks you need to make AI tool decisions based on real-world performance and practical utility.

You Might Also Like

Visualizing Your Path to Personal Success: Map and Measure What Truly Matters

Discover how to map and visualize your personal success metrics, align them with your core values, and create a customized tracking system that motivates genuine fulfillment.

Visualizing Spooky Action at a Distance: Making Quantum Entanglement Comprehensible

Explore quantum entanglement visualization techniques that transform Einstein's 'spooky action at a distance' from abstract theory into intuitive visual models for better understanding.

Mapping the Great Depression: Visualizing Economic Devastation and Recovery

Explore how data visualization transforms our understanding of the Great Depression, from unemployment heat maps to New Deal program impacts, bringing America's greatest economic crisis to life.

Visualizing Electronics Fundamentals: ROHM's Component Guide for Beginners to Experts

Explore ROHM's electronics basics through visual guides covering essential components, power semiconductors, sensors, automotive applications, and design resources for all skill levels.