Visualizing the Scale: Google's 980 Trillion Monthly Tokens

Unpacking the unprecedented scale of AI processing and what it means for the future of information visualization

The Unprecedented Scale of Google's AI Operations

I've been closely following the AI industry for years, and even I was stunned by Google's recent announcement. Processing 980 trillion tokens monthly represents a scale of AI operations that was unimaginable just a few years ago. This isn't just a big number—it's a fundamental shift in how we should think about AI's integration into our digital infrastructure.

To put this in perspective, Google is now processing more tokens each month than are contained in Common Crawl (approximately 100 trillion tokens), one of the primary datasets used to train foundation models. We're witnessing AI systems that process and generate more content than what was used to train them in the first place.

This translates to approximately 150-200 million tokens being processed every second across Google's AI services. For context, Google handles roughly 100,000 searches per second globally. If we were to attribute all token processing to search (which isn't accurate but helps visualize the scale), it would mean each search is utilizing about 1,000-2,000 tokens of AI processing power.

Understanding Tokens and Their Significance

When we talk about AI processing "tokens," I'm referring to the fundamental units that AI models use to process language. For models like Gemini, a token is equivalent to about 4 characters, and 100 tokens typically represent about 60-80 English words.

flowchart TD

A[Input Text] --> B[Tokenization Process]

B --> C[Token 1]

B --> D[Token 2]

B --> E[Token 3]

B --> F[...]

B --> G[Token n]

C --> H[AI Processing]

D --> H

E --> H

F --> H

G --> H

H --> I[Output Text]

classDef process fill:#FF8000,color:white,stroke:#FF8000

classDef token fill:#E6F2FF,stroke:#3399FF

classDef io fill:#FFF0E6,stroke:#FF8000

class A,I io

class B,H process

class C,D,E,F,G token

Diagram: How text is processed into tokens for AI computation

These tokens directly correlate to computational resources and operational costs. When I consider that Google is processing nearly 1 quadrillion tokens monthly, I'm looking at an industrial-scale AI operation that requires massive data centers, specialized hardware, and sophisticated software optimizations.

Token Processing by the Numbers:

- 1 token ≈ 4 characters

- 100 tokens ≈ 60-80 English words

- 1 page of text ≈ 500-800 tokens

- 1 trillion tokens ≈ content of ~1.5 billion books

- 980 trillion tokens ≈ processing the equivalent of the entire Library of Congress ~800 times monthly

This massive scale changes how we should think about AI integration. We're no longer talking about occasional AI assistance—we're looking at AI systems that are constantly processing vast amounts of information, learning from interactions, and generating new content at unprecedented rates.

The Competitive AI Infrastructure Landscape

Google's processing capacity significantly outpaces its competitors. For comparison, Microsoft Azure reported processing approximately 50 trillion tokens monthly in their Q3 2025 earnings call—roughly 1/20th of Google's current capacity. This massive difference highlights the infrastructure gap that exists even among tech giants.

This advantage stems from Google's decade-long investment in building a complete AI stack. As Sundar Pichai noted during the earnings call, "For the last 10 years, we've deliberately and meticulously built every layer of the AI stack, which has positioned us so well today."

The scale of token processing directly impacts what's possible with AI services. Higher processing capacity enables more complex reasoning tasks, more sophisticated responses, and better handling of multimodal inputs. When I work with PageOn.ai's visual structuring tools, I appreciate how they help organize the increasingly complex outputs that these massive AI systems can generate.

Consumer-Facing Applications Driving Token Growth

The explosion in token processing isn't happening in a vacuum—it's being driven by rapidly expanding consumer adoption. Google's AI Overviews now reaches 2 billion monthly users across 200 countries and territories, supporting 40 languages. This represents massive global penetration for an AI feature that was only introduced relatively recently.

Gemini App

Veo 3 Video Generation

On the enterprise side, Google announced that more than 85,000 businesses are currently using Gemini, representing a 35-fold year-over-year growth. This rapid enterprise adoption suggests that businesses are finding tangible value in AI integration across their operations.

The expansion of AI into video generation with tools like Veo 3 is particularly notable, as video processing requires significantly more computational resources than text. When I consider that over 70 million videos have already been generated, I can see how multimodal AI is contributing substantially to the overall token processing volume.

Business Implications of Massive-Scale AI Processing

The scale of Google's AI operations has profound implications for businesses integrating AI into their workflows. We're moving from simple text completion tasks that might use a few hundred tokens to complex reasoning tasks that can consume millions of tokens in a single interaction.

flowchart TD

A[Simple Completion] --> B[100-1,000 tokens]

C[Basic Research] --> D[1,000-10,000 tokens]

E[Complex Reasoning] --> F[10,000-100,000 tokens]

G[Deep Research] --> H[100,000-1,000,000 tokens]

I[Agent Workflows] --> J[1,000,000-10,000,000+ tokens]

classDef simple fill:#E6F2FF,stroke:#3399FF

classDef complex fill:#FFF0E6,stroke:#FF8000

classDef future fill:#FFECE6,stroke:#FF4000

class A,B,C,D simple

class E,F,G,H complex

class I,J future

Token usage by AI task complexity

For content creators and businesses, this means both opportunities and challenges. On one hand, AI systems can now tackle much more sophisticated problems and generate more comprehensive outputs. On the other hand, managing the complexity of these outputs becomes increasingly difficult without proper tools.

This is where I've found AI productivity tools like PageOn.ai particularly valuable. As AI outputs grow in complexity and volume, having visual organization capabilities becomes essential rather than optional. PageOn.ai's ability to transform complex information into clear visual structures helps teams make sense of the increasingly sophisticated AI-generated content they're working with.

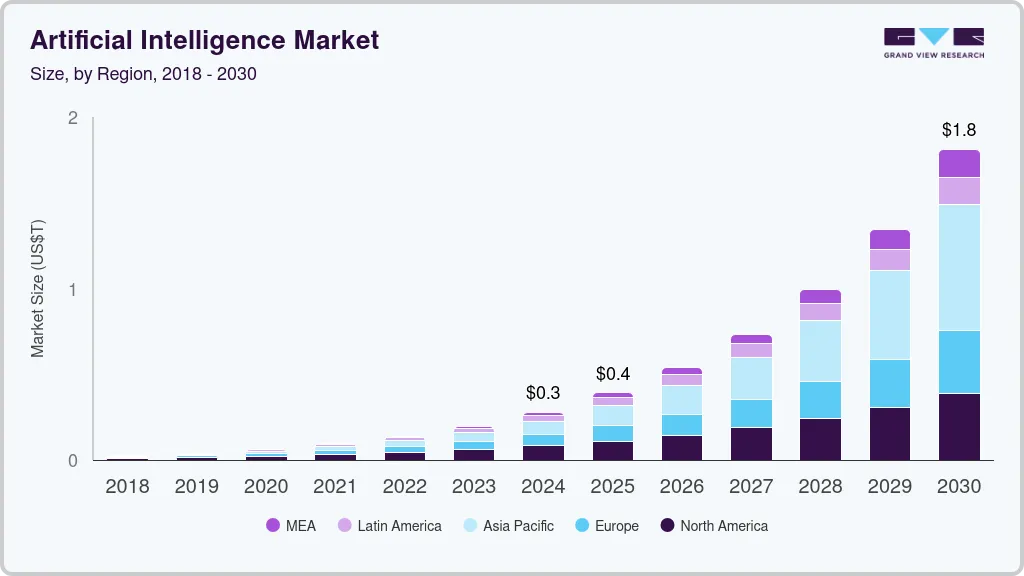

Visualizing AI's Transformation Through Data

Token processing volume provides us with a concrete metric to measure AI adoption and impact. What's particularly striking is that Google's monthly token generation now exceeds the volume of data used to train many foundation models. This represents a fundamental shift in the AI landscape.

This visualization highlights something remarkable: AI-generated content is rapidly approaching and may soon exceed human-generated content online. We're entering an era where AI systems are not just consuming information but are becoming primary producers of digital content.

As this transformation accelerates, the ability to structure and visualize massive amounts of information becomes crucial. I've found that Google AI search tools combined with PageOn.ai's visualization capabilities create a powerful workflow for making sense of the exponentially growing information landscape.

Future Trajectory and Implications

With monthly token processing approaching 1 quadrillion, it's clear that we're still in the early stages of AI adoption and integration. The exponential growth curve suggests that we'll see continued acceleration rather than plateau in the near term.

Google's significant infrastructure investments position it to maintain leadership in AI processing capabilities, but the US-China AI race and competition from other tech giants ensure continued innovation across the industry.

As AI systems become more capable, they'll be applied to increasingly complex problems that require even more computational resources. This creates a virtuous cycle of investment and innovation that will further accelerate AI adoption across industries. In this environment, PageOn.ai's focus on turning fuzzy thoughts into clear visuals becomes crucial as information complexity increases exponentially.

The Technical Infrastructure Behind the Numbers

Google's AI Hypercomputer serves as the backbone for its massive token processing capability. This system combines specialized hardware like TPUs (Tensor Processing Units) and GPUs with sophisticated software optimizations to achieve unprecedented scale.

flowchart TD

A[User Requests] --> B[Load Balancers]

B --> C[Request Processing Layer]

C --> D[Model Serving Infrastructure]

D --> E[TPU Clusters]

D --> F[GPU Clusters]

D --> G[CPU Servers]

E --> H[Response Generation]

F --> H

G --> H

H --> I[Response Optimization]

I --> J[User Response]

classDef input fill:#E6F2FF,stroke:#3399FF

classDef process fill:#FFF0E6,stroke:#FF8000

classDef hardware fill:#F5F5F5,stroke:#9E9E9E

classDef output fill:#E8F5E9,stroke:#66BB6A

class A,J input

class B,C,D,H,I process

class E,F,G hardware

Simplified architecture of Google's AI serving infrastructure

The distributed systems architecture allows for horizontal scaling to meet growing demand. Google's data centers are optimized for AI workloads, with specialized cooling systems, power management, and network infrastructure designed to handle the unique requirements of large-scale AI processing.

Understanding this infrastructure helps contextualize the resources needed for enterprise AI adoption. While few organizations will need Google's scale, the architectural principles of distributed processing, specialized hardware, and optimized software apply at all levels of AI implementation. For businesses looking to adopt AI, tools like Google Document AI provide access to these capabilities without requiring massive infrastructure investments.

Practical Applications for Businesses and Content Creators

Massive token processing enables more sophisticated AI assistance for content creation and analysis. Organizations can leverage these capabilities through APIs and cloud services to enhance their operations without building infrastructure from scratch.

Content Creation

AI systems can now generate complete articles, reports, and multimedia content with minimal human guidance

Data Analysis

Complex datasets can be processed and visualized automatically, surfacing insights that would take humans days or weeks to discover

Customer Interaction

AI agents can handle increasingly complex customer queries across multiple languages and domains

I've found that PageOn.ai's visual organization tools help teams make sense of the exponential growth in AI-generated content. When AI systems are producing vast amounts of information, having tools to structure and visualize that information becomes essential.

Content strategies must adapt to a world where AI systems are processing and generating content at unprecedented scale. This means focusing on higher-level planning and creative direction while leveraging AI for implementation and optimization. For those looking to get started, resources like the Google AI Foundational Course provide an excellent introduction to the capabilities and limitations of current AI systems.

Ethical and Sustainability Considerations

Processing nearly 1 quadrillion tokens monthly raises important questions about energy consumption and environmental impact. The power requirements for this level of AI processing are substantial, making data center efficiency and renewable energy sources critical considerations.

The environmental impact of AI infrastructure must be balanced against productivity benefits. While AI systems consume significant resources, they also enable optimizations in other areas—from more efficient logistics to reduced commuting through remote work tools—that can result in net environmental benefits.

PageOn.ai's efficiency in structuring information can help reduce unnecessary token processing through clearer visualization. When information is well-organized and clearly presented, it reduces the need for repetitive queries and redundant processing, contributing to more sustainable AI usage.

Transform Your Visual Expressions with PageOn.ai

In a world where AI is processing nearly 1 quadrillion tokens monthly, making sense of complex information is more challenging than ever. PageOn.ai helps you transform fuzzy thoughts into crystal-clear visual expressions that communicate your ideas effectively.

You Might Also Like

Smart Icon Libraries: Transform Your Document Design with Visual Intelligence

Discover how smart icon libraries can enhance your document design. Learn strategic icon selection, AI-powered systems, and visual communication techniques for better engagement and retention.

Mastering Visual Weight in Design: Creating Hierarchy, Balance, and Impact

Explore the principles of visual weight in design and learn how to create compelling hierarchies, perfect balance, and maximum impact in your visual compositions.

Perfecting Slide Flow: Adjusting Transition Speeds for Professional Presentations

Master the art of slide transition speeds for professional presentations. Learn optimal timing techniques, avoid common pitfalls, and create engaging presentation flow that captivates your audience.

Crafting Emotionally Resonant Digital Experiences: AI-Powered Vibe Design

Explore how AI-powered vibe design is transforming digital interfaces from functional to emotionally resonant experiences. Learn techniques for creating interfaces that connect with users on a deeper level.