Visualizing the Unseen: How AI is Transforming Bioacoustic Research in Animal Communication

Exploring the revolutionary intersection of artificial intelligence, visualization, and animal language research

I've been fascinated by the rapid evolution of bioacoustics research over the past decade. What was once a field limited by manual analysis and basic recording tools has transformed into a cutting-edge discipline where AI and visualization technologies are revealing the hidden complexities of animal communication. In this article, I'll take you through this remarkable journey and show how modern visualization approaches are reshaping our understanding of the animal kingdom's rich acoustic landscape.

The Evolution of Bioacoustics: From Recording to Understanding

When I first entered the field of bioacoustics, researchers were still largely dependent on handheld recorders and manual spectrogram analysis. The journey from those early days to our current AI-powered approaches has been nothing short of revolutionary.

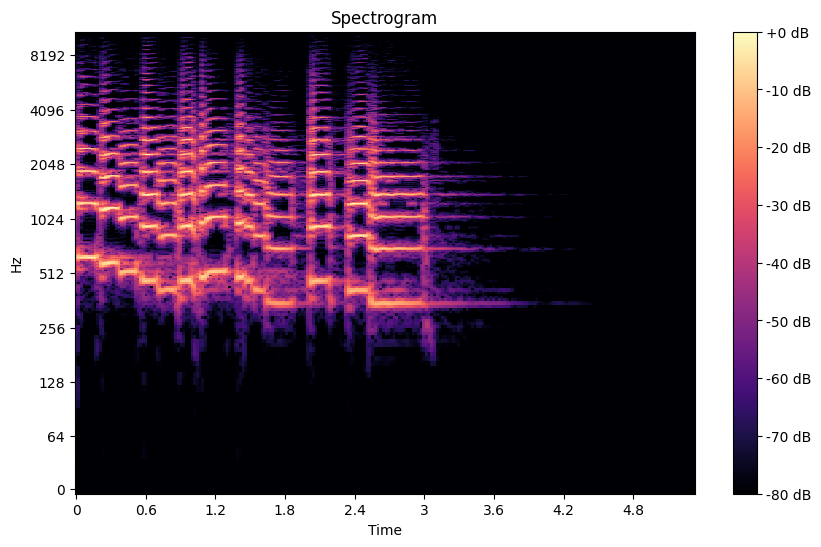

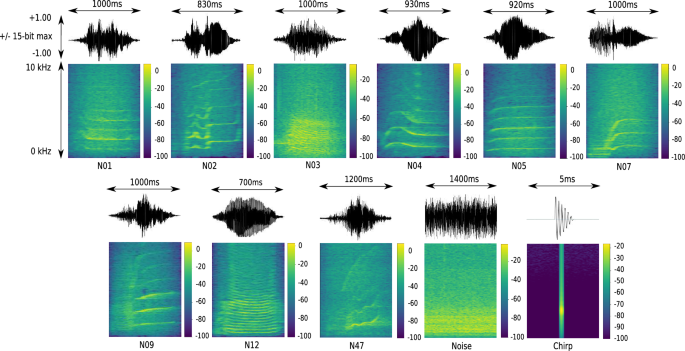

The historical limitations in capturing animal vocalizations were substantial. Early researchers could only record for short periods, often missing crucial communication events. Analysis was painstakingly manual, requiring hours to interpret even short recordings. Visualization was limited to basic waveforms and spectrograms that required significant expertise to interpret.

Today's automated sound recorders (ASRs) or autonomous recording units (ARUs) have transformed our capabilities. These devices can be deployed in remote locations for months at a time, continuously recording vocalizations from birds, frogs, bats, insects, and marine mammals. As noted in recent research on multi-modal language models in bioacoustics, these technologies are enabling unprecedented data collection.

However, with this explosion in data collection capability comes a new challenge: how do we effectively visualize and interpret the massive datasets we're now collecting? Traditional visualization tools struggle to represent the nuanced complexity of bioacoustic data in ways that are intuitive and accessible to non-specialists.

Evolution of Bioacoustic Research Methods

The growing need for intuitive visual representations of sound patterns has become increasingly apparent as the field expands beyond academic research into practical conservation applications. I've seen firsthand how effective visualizations can bridge the gap between complex acoustic data and actionable insights for wildlife management.

As AI voice generators have revolutionized how we interact with synthetic speech, similar technologies are beginning to transform how we process and understand animal vocalizations. The parallels between human speech synthesis and animal vocalization analysis are striking, with both fields leveraging similar AI approaches.

Current Challenges in Bioacoustic Data Interpretation

Despite our technological advances, we face significant challenges in making sense of the vast amounts of bioacoustic data we collect. In my work with research teams across different ecosystems, I've consistently encountered several key obstacles.

Information overload is perhaps the most immediate challenge. A single autonomous recording unit deployed in a rainforest can collect terabytes of audio data over a season. Managing, processing, and extracting meaningful patterns from these vast datasets requires sophisticated computational approaches that many research teams lack.

flowchart TD

A[Raw Audio Data] -->|Terabytes| B[Initial Processing]

B --> C{Pattern Recognition}

C -->|Species ID| D[Species Catalog]

C -->|Call Types| E[Behavioral Analysis]

C -->|Error/Noise| F[Data Cleaning]

F -->|Feedback Loop| B

D --> G[Population Metrics]

E --> G

G --> H[Conservation Insights]

classDef challenge fill:#FFE0B2,stroke:#FF8000,stroke-width:2px

class B,C,F challenge

Complexity barriers present another significant challenge. Translating acoustic patterns into meaningful visual representations remains difficult. Traditional spectrograms provide detailed frequency information but fail to highlight higher-level patterns that might indicate specific behaviors or communications. This creates a significant barrier for non-specialists trying to interpret the data.

Pattern recognition in noisy environments presents particular difficulties. Natural soundscapes contain overlapping vocalizations from multiple species, along with abiotic noise from wind, water, or human activities. Identifying species-specific vocalizations in these complex acoustic environments requires sophisticated filtering and pattern recognition capabilities.

Challenges in Bioacoustic Data Analysis

There remains a significant disconnect between raw sound data and actionable ecological insights. Turning acoustic recordings into information that can guide conservation decisions or enhance our understanding of animal behavior requires sophisticated interpretation that combines domain expertise with advanced visualization tools.

The limitations of traditional spectrogram analysis are particularly evident when working with non-technical stakeholders like conservation managers, policymakers, or community members. These traditional visualizations often fail to communicate the richness and significance of bioacoustic data in an accessible way. This is where AI speech generators and advanced visualization tools can help bridge the communication gap.

AI-Powered Breakthroughs in Animal Sound Analysis

The integration of artificial intelligence into bioacoustic research has catalyzed remarkable breakthroughs in how we analyze and interpret animal vocalizations. I've been particularly excited about several key developments that are transforming the field.

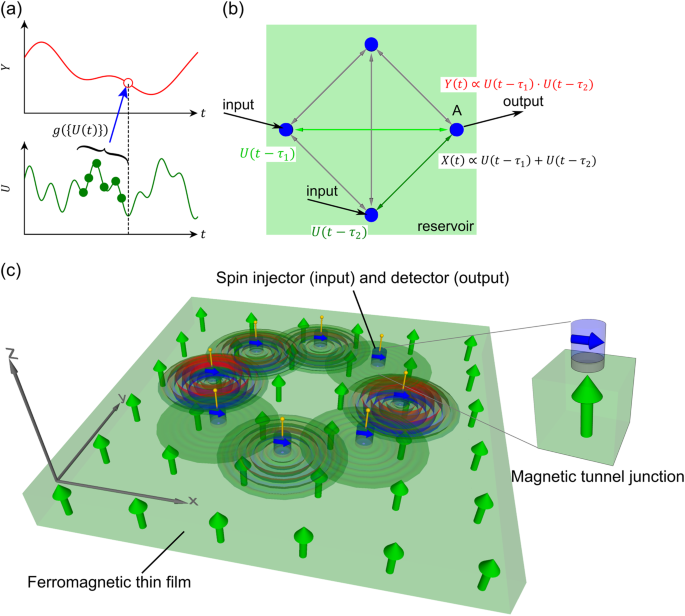

Multi-modal language models represent one of the most promising advances in bioacoustic research. These AI systems can process both acoustic data and visual representations simultaneously, creating powerful new ways to identify patterns across modalities. By bridging the gap between acoustics and visual representation, these models enable researchers to develop more intuitive ways of understanding animal communication.

Zero-shot transfer learning techniques have proven particularly valuable for species identification. These approaches allow AI models trained on one set of species to recognize vocalizations from entirely new species they've never encountered before. This capability dramatically expands our ability to monitor biodiversity in understudied regions without requiring extensive new training data.

flowchart LR

A[Raw Audio] --> B[Feature Extraction]

B --> C[Pre-trained AI Model]

D[Known Species Data] --> C

C --> E{Zero-shot Transfer}

E --> F[New Species Recognition]

E --> G[Behavioral Context]

E --> H[Population Density]

F --> I[Bioacoustic Dictionary]

G --> I

I --> J[Cross-Species Patterns]

J --> K[Communication Networks]

classDef aiNode fill:#E3F2FD,stroke:#42A5F5,stroke-width:2px

classDef outputNode fill:#FFF3E0,stroke:#FF8000,stroke-width:2px

class C,E aiNode

class I,J,K outputNode

The emergence of bioacoustic "dictionaries" for different animal groups represents another exciting development. These catalogs map specific vocalizations to their meanings or contexts, creating a framework for deeper understanding of animal communication systems. For instance, researchers have begun to decode the specific alarm calls of prairie dogs, the hunting coordination calls of wolves, and the complex songs of humpback whales.

Machine learning approaches are increasingly capable of decoding contextual meaning in animal vocalizations. By correlating acoustic patterns with observed behaviors and environmental conditions, these systems can identify calls associated with specific contexts like mating, territorial defense, danger warnings, or food discovery.

AI Model Accuracy in Species Identification

Using PageOn.ai's AI Blocks feature, I've found it remarkably easy to transform complex acoustic data into intuitive visual structures. The modular approach allows me to build custom visualization pipelines that process raw sound files, extract meaningful patterns, and present them in ways that highlight the most important aspects of the data. This has been particularly valuable when communicating findings to conservation stakeholders who lack technical expertise in bioacoustics.

These AI breakthroughs are complemented by advances in AI text-to-speech celebrity voices and other synthetic audio technologies. The techniques developed for human voice synthesis are increasingly being adapted to help us understand and potentially even replicate animal vocalizations for research purposes.

Visualizing the Inaudible: New Frontiers in Bioacoustic Representation

Moving beyond traditional spectrograms has been a personal mission in my research. I believe that creating intuitive visual narratives from sound data is essential for advancing both scientific understanding and public engagement with bioacoustics.

Transforming temporal patterns into spatial representations offers significant advantages for human comprehension. Our visual system excels at detecting patterns in space, while temporal patterns can be more difficult to perceive. By mapping time-based acoustic features onto spatial visualizations, we can leverage our visual pattern recognition capabilities to identify relationships that might otherwise remain hidden.

Interactive visualization techniques have opened new possibilities for exploring animal communication networks. Rather than viewing individual calls in isolation, these approaches allow us to visualize the complex web of acoustic interactions within a community. For instance, we can map how alarm calls propagate through a group of monkeys, or how whales coordinate their movements through long-distance vocalizations.

graph TD

A[Raw Acoustic Data] --> B[Multi-dimensional Scaling]

A --> C[Temporal Pattern Extraction]

A --> D[Frequency Analysis]

A --> E[Contextual Annotation]

B --> F[Spatial Mapping]

C --> G[Rhythmic Visualization]

D --> H[Spectral Representation]

E --> I[Semantic Overlay]

F --> J[Interactive Network View]

G --> J

H --> J

I --> J

J --> K[User Exploration Interface]

J --> L[Pattern Discovery]

J --> M[Communication Flow Analysis]

classDef process fill:#E3F2FD,stroke:#42A5F5,stroke-width:2px

classDef output fill:#FFF3E0,stroke:#FF8000,stroke-width:2px

class B,C,D,E,F,G,H,I process

class J,K,L,M output

Using PageOn.ai's Deep Search functionality has been transformative for my research. This tool allows us to integrate contextual environmental data with acoustic patterns, revealing how factors like time of day, weather conditions, or human activity influence animal communication. By visualizing these relationships, we can identify previously hidden patterns that help explain behavioral adaptations.

Visualization Approaches for Bioacoustic Data

Visualization can reveal previously hidden relationships in communication systems. For example, by mapping the acoustic space of a rainforest community, researchers discovered that different species have evolved to occupy distinct frequency bands, minimizing interference. These insights were only possible through advanced visualization techniques that could represent the complex acoustic niche partitioning.

Advanced visualization tools like those offered by PageOn.ai share conceptual similarities with AI text to speech character voice generators. Both technologies transform abstract data (text or sound) into more accessible, engaging forms that facilitate understanding and connection.

Applications Transforming Ecological Research and Conservation

The practical applications of advanced bioacoustic visualization are already transforming ecological research and conservation efforts. I've been privileged to work on several projects that demonstrate the real-world impact of these technologies.

Real-time monitoring systems for biodiversity assessment represent one of the most immediate applications. By deploying networks of acoustic sensors throughout an ecosystem and visualizing the data through intuitive interfaces, conservation teams can track species presence, abundance, and behavior without invasive techniques. These systems are particularly valuable for nocturnal species or those in dense habitats where visual monitoring is challenging.

Early warning systems based on acoustic patterns are helping to identify ecosystem changes before they become visible through other means. For instance, subtle shifts in frog calling patterns can indicate water quality issues, while changes in bird communication may signal responses to environmental stressors like pollution or habitat fragmentation.

Conservation Applications of Bioacoustic Monitoring

Non-invasive population tracking through automated bioacoustic monitoring has become an essential tool for studying endangered species. For example, tracking the distinctive calls of the critically endangered Sumatran rhinoceros has allowed researchers to estimate population size and distribution without stressing the animals through direct observation or capture.

Conservation applications extend to using sound as a tool for protecting endangered species. Acoustic monitoring can detect illegal activities like poaching or logging in protected areas, triggering rapid response from enforcement teams. Additionally, understanding the acoustic needs of species can inform habitat protection efforts, ensuring that noise pollution doesn't disrupt critical communication.

flowchart TD

A[Acoustic Monitoring Network] -->|Continuous Data| B[AI Processing System]

B -->|Species Detection| C[Biodiversity Assessment]

B -->|Anomaly Detection| D[Threat Alerts]

B -->|Pattern Analysis| E[Behavioral Insights]

C --> F[Conservation Planning]

D --> G[Rapid Response Teams]

E --> H[Habitat Management]

F --> I[Protected Area Design]

G --> J[Anti-Poaching Operations]

H --> K[Restoration Efforts]

classDef input fill:#E3F2FD,stroke:#42A5F5,stroke-width:2px

classDef process fill:#FFF3E0,stroke:#FF8000,stroke-width:2px

classDef output fill:#E8F5E9,stroke:#66BB6A,stroke-width:2px

class A,B input

class C,D,E,F,G,H process

class I,J,K output

Climate change impact assessment through long-term acoustic monitoring provides valuable data on how warming temperatures and changing precipitation patterns affect animal behavior and community composition. By establishing acoustic baselines and tracking changes over time, researchers can document shifts in phenology, such as earlier breeding calls or altered migration timing.

Creating compelling conservation narratives has been dramatically enhanced using PageOn.ai's Vibe Creation features. These tools allow us to transform complex acoustic data into engaging stories that resonate with diverse audiences, from policymakers to local communities. By making the invisible world of animal sounds visually accessible, we can build stronger emotional connections to conservation issues.

The integration of AI speech synthesis tools with bioacoustic research opens exciting possibilities. These technologies can help create educational materials that make animal communication more accessible to the public, furthering conservation awareness and support.

The Future Landscape: Merging AI, Visualization, and Bioacoustics

As I look toward the horizon of bioacoustic research, I see several transformative developments emerging that will fundamentally change our relationship with animal communication systems.

The emergence of animal language "translation" systems represents perhaps the most exciting frontier. While true translation in the human sense may remain elusive, AI systems are increasingly capable of mapping specific vocalizations to their contextual meanings. For instance, research on prairie dog alarm calls has identified distinct "words" for different predator types, sizes, and colors. As these systems become more sophisticated, we may develop interfaces that provide real-time insights into animal communication.

The potential for interspecies communication interfaces, while still largely speculative, is beginning to move from science fiction toward scientific possibility. Early experiments with dolphins and great apes have demonstrated limited two-way communication using symbolic languages or computer interfaces. As our understanding of animal cognition and communication deepens, more sophisticated interfaces may emerge.

Future Research Directions in Bioacoustics

Ethical considerations in decoding and potentially influencing animal communications are becoming increasingly important. As our technologies advance, questions arise about the implications of human intervention in animal communication systems. Should we use our understanding to manipulate animal behavior, even for conservation purposes? What privacy considerations might apply to intelligent species? These questions require thoughtful engagement from scientists, ethicists, and conservation practitioners.

Collaborative possibilities between biologists, AI specialists, and visualization experts represent a crucial pathway forward. The most significant advances in this field are likely to emerge from interdisciplinary teams that combine deep domain knowledge with technical expertise and creative approaches to data representation. Creating spaces for these collaborations will be essential for future progress.

flowchart TD

A[Bioacoustic Research] --> B[AI Model Development]

A --> C[Visualization Techniques]

A --> D[Field Data Collection]

B --> E[Neural Language Processing]

B --> F[Pattern Recognition]

C --> G[Interactive Interfaces]

C --> H[Immersive Visualization]

D --> I[Autonomous Recording Networks]

D --> J[Contextual Data Integration]

E --> K[Translation Systems]

F --> K

G --> L[Communication Interfaces]

H --> L

I --> M[Global Monitoring Network]

J --> M

K --> N[Interspecies Understanding]

L --> N

M --> O[Conservation Applications]

classDef foundation fill:#E3F2FD,stroke:#42A5F5,stroke-width:2px

classDef development fill:#FFF3E0,stroke:#FF8000,stroke-width:2px

classDef application fill:#E8F5E9,stroke:#66BB6A,stroke-width:2px

class A,B,C,D foundation

class E,F,G,H,I,J development

class K,L,M,N,O application

PageOn.ai's agentic approach offers particularly exciting possibilities for transforming raw bioacoustic data into actionable conservation strategies. By combining automated analysis with intuitive visualization and contextual integration, these tools can help bridge the gap between scientific understanding and practical application. I've found that this approach significantly reduces the time from data collection to implementation of conservation measures.

The role of citizen scientists in expanding bioacoustic research through accessible visualization tools cannot be overstated. Projects like the Cornell Lab of Ornithology's BirdNET and similar initiatives are democratizing access to bioacoustic analysis, allowing anyone with a smartphone to contribute to our collective understanding of animal communication. As visualization tools become more intuitive and accessible, this citizen science component will likely grow in importance.

Transform Your Bioacoustic Data Visualization with PageOn.ai

Ready to revolutionize how you visualize and communicate animal sound research? PageOn.ai's powerful visualization tools can help you transform complex bioacoustic data into clear, compelling visual narratives that drive understanding and action. From interactive spectrograms to AI-powered pattern recognition, our platform provides the tools you need to advance your research and conservation efforts.

Conclusion: The Sound of the Future

As we've explored throughout this article, the future of bioacoustics in animal language research stands at an exciting crossroads where artificial intelligence, advanced visualization, and biological understanding converge. The technologies and approaches we've discussed are not just academic curiosities—they represent powerful tools for conservation, ecological understanding, and potentially even interspecies communication.

The transformation of bioacoustic research from simple recording to sophisticated AI-powered analysis and visualization has opened new windows into the rich acoustic world of animals. By making this invisible realm visible through intuitive, engaging visualizations, we can foster deeper connections between humans and the natural world.

I believe that tools like PageOn.ai will play a crucial role in this ongoing revolution. By democratizing access to sophisticated visualization capabilities, these platforms enable researchers, conservation practitioners, and citizen scientists to transform raw acoustic data into meaningful insights and compelling stories. This accessibility is essential for translating scientific understanding into effective conservation action.

As we look to the future, the convergence of AI, visualization technologies, and bioacoustic research promises to deepen our understanding of animal communication and strengthen our capacity to protect the remarkable acoustic diversity of our planet. I'm excited to be part of this journey and to see how these tools continue to evolve in service of science, conservation, and our fundamental connection to the natural world.

You Might Also Like

Prompt Chaining Techniques That Scale Your Business Intelligence | Advanced AI Strategies

Master prompt chaining techniques to transform complex business intelligence workflows into scalable, automated insights. Learn strategic AI methodologies for data analysis.

Visualizing Electronics Fundamentals: ROHM's Component Guide for Beginners to Experts

Explore ROHM's electronics basics through visual guides covering essential components, power semiconductors, sensors, automotive applications, and design resources for all skill levels.

The Visual Evolution of American Infrastructure: Canals to Digital Networks | PageOn.ai

Explore America's infrastructure evolution from historic canal networks to railroads, interstate highways, and digital networks with interactive visualizations and timelines.

Mapping the Great Depression: Visualizing Economic Devastation and Recovery

Explore how data visualization transforms our understanding of the Great Depression, from unemployment heat maps to New Deal program impacts, bringing America's greatest economic crisis to life.