Building Intelligent Applications with Anthropic and Fal.ai APIs

A comprehensive guide for developers creating next-generation AI-powered applications

The Evolution of AI-Powered Application Development

I've been watching the AI development landscape transform dramatically over the past few years. The recent advancements in API capabilities from Anthropic and Fal.ai are revolutionizing how we build intelligent applications. What used to require extensive infrastructure and specialized expertise is now accessible through powerful, developer-friendly APIs.

flowchart TD

A[Traditional App Development] --> B[Basic AI Integration]

B --> C[Sophisticated AI Agents]

C --> D[Multi-modal Intelligence]

style A fill:#f9f9f9,stroke:#ccc

style B fill:#fff4e6,stroke:#ffbb77

style C fill:#ffe9d9,stroke:#ff9955

style D fill:#ffdfc8,stroke:#ff7733

We're witnessing a paradigm shift from basic AI integration to sophisticated, agentic applications with specialized capabilities. These APIs now enable developers to create applications that can process, analyze, and generate content with minimal infrastructure requirements.

The combination of Anthropic's language understanding and reasoning capabilities with Fal.ai's real-time visual processing opens up entirely new possibilities for application development. Let's explore how these powerful APIs can work together to create truly intelligent applications.

Understanding Anthropic's Claude API Capabilities

Core Features of Claude's API Ecosystem

Claude's API offers remarkable capabilities that form the foundation for advanced AI applications. The massive context windows (up to 200,000 tokens) allow applications to process extensive documents and maintain lengthy conversations without losing context - a game-changer for complex applications.

Anthropic's Constitutional AI approach ensures that Claude's responses remain ethical, transparent, and accurate - crucial considerations for enterprise applications where trust and reliability are paramount. The API also supports multiple Claude models optimized for different use cases, balancing performance needs with cost efficiency.

New Developer-Focused Capabilities

I'm particularly excited about the new developer-focused capabilities that Anthropic has recently introduced. The code execution tool transforms Claude from a code-writing assistant into a full-fledged data analyst, capable of running Python code for analysis and visualization directly within API calls.

MCP Connector

Connect Claude to any remote Model Context Protocol server without writing custom client code. Simply add a server URL to your API request.

Files API

Upload documents once and reference them repeatedly across conversations, streamlining workflows for applications working with large document sets.

Code Execution Tool

Run Python code in a sandboxed environment for data analysis and visualization directly within API calls.

Extended Prompt Caching

Choose between 5-minute TTL or extended 1-hour TTL, reducing costs by up to 90% for long-running agent workflows.

The MCP connector is another game-changer, enabling seamless integration with third-party tools without the need for custom client code. Combined with the Files API for efficient document storage and the extended prompt caching option, these features create a powerful ecosystem for building sophisticated AI applications with minimal infrastructure overhead.

Fal.ai's Complementary API Infrastructure

Real-time AI Processing Capabilities

While Anthropic excels at language understanding and reasoning, Fal.ai provides specialized capabilities for image and video processing with minimal latency. This complementary approach allows developers to build truly multi-modal applications.

flowchart LR

A[User Input] --> B{Input Type}

B -->|Text| C[Anthropic Claude API]

B -->|Image/Video| D[Fal.ai API]

C --> E[Text Processing]

D --> F[Visual Processing]

E --> G[Response Generation]

F --> G

style A fill:#f9f9f9,stroke:#ccc

style B fill:#f0f0f0,stroke:#ccc

style C fill:#ffe9d9,stroke:#ff9955

style D fill:#e6f7ff,stroke:#66b3ff

style E fill:#fff4e6,stroke:#ffbb77

style F fill:#e6f0ff,stroke:#99c2ff

style G fill:#f9f9f9,stroke:#ccc

Fal.ai's serverless architecture automatically scales with your application needs, removing the burden of infrastructure management. Their developer-friendly SDKs make integration straightforward, even for teams without extensive AI expertise.

How Fal.ai Enhances Anthropic's Capabilities

By combining text intelligence (Claude) with visual processing (Fal.ai), developers can create applications that understand and process both textual and visual content. This multi-modal approach enables more comprehensive data analysis and user interactions.

For example, an application could use Claude to understand a user's natural language query about an image, then use Fal.ai to process and analyze the image, and finally use Claude again to generate a comprehensive response that incorporates both textual and visual information.

Designing Intelligent App Features with Combined APIs

Architectural Patterns for API Integration

When designing applications that leverage both Anthropic and Fal.ai APIs, I've found several architectural patterns to be particularly effective. Event-driven architectures work exceptionally well for responsive, real-time AI features, allowing different components to communicate asynchronously.

flowchart TD

A[Client Request] --> B[API Gateway]

B --> C{Request Type}

C -->|Text Analysis| D[Anthropic Service]

C -->|Image Analysis| E[Fal.ai Service]

C -->|Combined| F[Orchestration Service]

F --> D

F --> E

D --> G[Response Aggregator]

E --> G

F --> G

G --> H[Client Response]

style A fill:#f9f9f9,stroke:#ccc

style B fill:#f0f0f0,stroke:#ccc

style C fill:#f0f0f0,stroke:#ccc

style D fill:#ffe9d9,stroke:#ff9955

style E fill:#e6f7ff,stroke:#66b3ff

style F fill:#f5f5f5,stroke:#ccc

style G fill:#f5f5f5,stroke:#ccc

style H fill:#f9f9f9,stroke:#ccc

Middleware implementation strategies are crucial for seamless API coordination. I recommend creating an abstraction layer that handles the complexities of working with multiple APIs, providing your application with a unified interface.

Effective caching strategies are essential to optimize performance and reduce costs. By caching frequent requests and responses, you can minimize redundant API calls while ensuring your application remains responsive. With Anthropic's new extended prompt caching option, you can maintain context for up to an hour at reduced costs.

I've found that using API integration patterns for AI with PageOn.ai's AI Blocks helps visualize and structure these complex API relationships, making it easier to design and implement sophisticated multi-API applications.

Practical Implementation Approaches

Authentication & Security Best Practices

- Store API keys securely using environment variables or a secrets manager

- Implement API key rotation policies

- Use middleware to validate requests before forwarding to AI services

- Consider implementing request signing for additional security

Managing rate limits and optimizing token usage across services requires careful planning. I recommend implementing queuing mechanisms for high-traffic applications and designing your prompts efficiently to minimize token usage without sacrificing quality.

Building resilient applications means implementing proper error handling and fallback mechanisms. When working with multiple AI APIs, it's important to gracefully handle situations where one service might be temporarily unavailable or return unexpected results.

For finding and integrating relevant visual assets into your workflow, PageOn.ai's Deep Search functionality is invaluable. It helps locate and incorporate the right visual elements to complement your AI-generated content, creating a more engaging user experience.

Real-World Application Scenarios

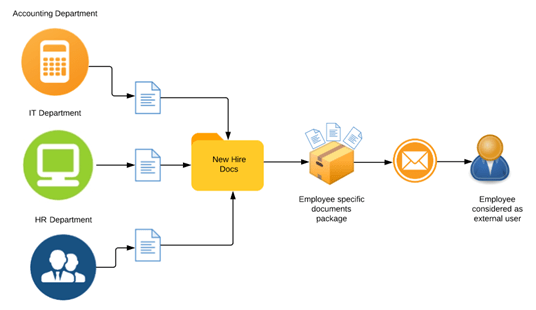

Intelligent Document Processing Systems

I've helped build several intelligent document processing systems that leverage Claude's massive context window and Files API to handle complex documents. These applications can process hundreds of pages, extract key insights, and generate comprehensive summaries without losing context.

For example, a legal tech application we developed uses Claude to analyze lengthy contracts and legal documents, identifying key clauses, potential risks, and obligations. The Files API allows the system to maintain access to these documents across multiple analysis sessions without requiring re-uploads.

Visualizing document relationships and hierarchies is simplified with PageOn.ai's visual structuring capabilities, making it easier for users to understand complex document sets and their interrelationships.

Advanced Data Analysis Applications

Combining Claude's code execution capabilities with Fal.ai's visual processing creates powerful applications that can analyze both textual and visual data. I recently worked on a retail analytics platform that uses this combination to analyze customer behavior, product placement effectiveness, and sales data.

The application uses Fal.ai to process in-store camera footage, identifying customer movement patterns and product interactions. Claude then analyzes this visual data alongside textual sales reports, generating comprehensive insights that would be impossible with either API alone.

When working with AI agents, PageOn.ai's visualization tools help transform fuzzy data concepts into clear visual representations, making complex insights accessible to non-technical stakeholders.

Conversational Agents with Enhanced Capabilities

sequenceDiagram

participant User

participant Agent

participant Claude as Claude API

participant Fal as Fal.ai API

participant Tools as External Tools

User->>Agent: Question with image

Agent->>Claude: Process text query

Agent->>Fal: Analyze image

Fal-->>Agent: Image analysis results

Agent->>Claude: Combine text and image context

Claude->>Agent: Generate initial response

Agent->>Tools: Request additional data via MCP

Tools-->>Agent: Tool execution results

Agent->>Claude: Refine response with tool data

Claude-->>Agent: Final comprehensive response

Agent->>User: Deliver enhanced response

Building AI assistants that maintain context over extended interactions is now practical with Claude's extended caching options. These agents can engage in meaningful conversations that span multiple interactions without losing track of previous context.

Integrating third-party tools via the MCP connector expands these agents' capabilities significantly. For instance, a customer support agent we developed can access order management systems, knowledge bases, and ticketing systems, providing comprehensive assistance without switching contexts.

By integrating Fal.ai's capabilities, these conversational agents can also process and discuss visual content. This is particularly valuable for applications in fields like healthcare, where agents might need to discuss medical images, or in e-commerce, where visual product information is crucial.

Performance Optimization and Cost Management

Balancing Performance and Expenses

I've found that strategic use of different Claude models based on task complexity can significantly optimize costs without sacrificing quality. For instance, using Claude Haiku for initial query classification and simpler tasks, while reserving Claude Opus for complex reasoning or sensitive content generation.

Implementing tiered processing approaches has proven effective in many applications. The system first attempts to handle requests with simpler, less expensive models, only escalating to more powerful models when necessary. This approach can reduce costs by 40-60% while maintaining high-quality results.

Optimizing prompt design is another crucial strategy for reducing token usage. By carefully crafting prompts that provide clear instructions without unnecessary verbosity, you can minimize token consumption while maintaining response quality. This becomes especially important when working with large context windows.

Monitoring and Analytics

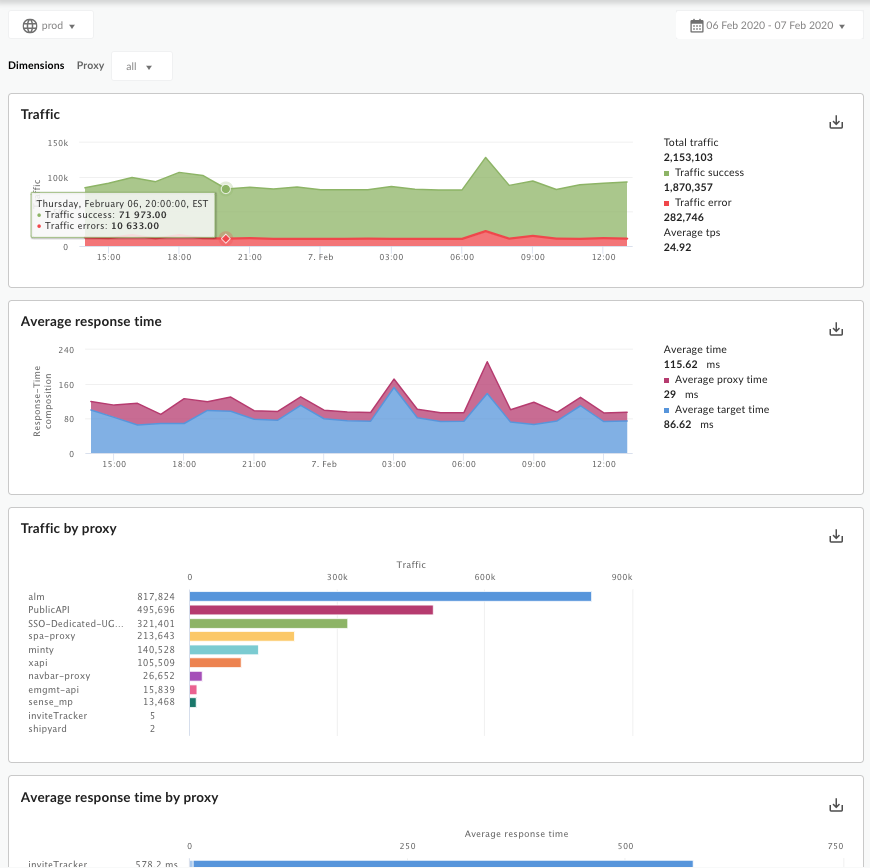

Tracking API usage patterns is essential for identifying optimization opportunities. I recommend implementing comprehensive logging and analytics to monitor token usage, response times, and error rates across different API endpoints and models.

For multi-API applications, implementing observability solutions becomes even more critical. Tools like distributed tracing can help identify bottlenecks and performance issues across your entire application stack, not just within individual API calls.

PageOn.ai's visualization capabilities are particularly valuable for monitoring performance metrics and identifying bottlenecks. By creating clear visual representations of your application's performance data, you can quickly spot patterns and optimization opportunities that might otherwise go unnoticed.

Future-Proofing Applications with Flexible API Integration

Designing for API Evolution

flowchart TD

A[Application Layer] --> B[API Abstraction Layer]

B --> C[Feature Flag Service]

C --> D{API Selection}

D -->|Current| E[Anthropic API v1]

D -->|New Feature| F[Anthropic API v2]

D -->|Fallback| G[Alternative API]

style A fill:#f9f9f9,stroke:#ccc

style B fill:#fff4e6,stroke:#ffbb77

style C fill:#e6f7ff,stroke:#66b3ff

style D fill:#f0f0f0,stroke:#ccc

style E fill:#ffe9d9,stroke:#ff9955

style F fill:#ffe9d9,stroke:#ff9955

style G fill:#e6ffe6,stroke:#66cc66

Creating abstraction layers to accommodate API changes and updates has been invaluable in my experience. By designing a flexible interface between your application and the underlying AI APIs, you can adapt to new features or API versions without significant code changes.

I strongly recommend implementing feature flags for gradual adoption of new capabilities. This approach allows you to test new API features with a subset of users or requests before fully committing to them, reducing risk and ensuring a smooth transition.

Building modular systems that can incorporate emerging AI features ensures your application remains adaptable. For example, when Anthropic introduced the code execution tool, applications with a modular design could quickly integrate this capability without major refactoring.

When building applications that integrate with multiple AI services like Anthropic and Fal.ai, it's important to consider how these ai-powered app integration strategies can evolve over time.

Scaling Considerations

As your user base and processing needs grow, architectural approaches for handling increased load become crucial. I recommend designing your application with horizontal scalability in mind from the start, using microservices or serverless architectures that can scale independently based on demand.

Load balancing strategies across multiple AI services can help distribute traffic efficiently and provide redundancy. For instance, you might implement a routing layer that directs requests to different AI providers based on current load, response times, or specific capability requirements.

PageOn.ai's agentic capabilities are particularly useful for transforming complex scaling requirements into visual action plans. By visualizing your scaling strategy, you can more easily communicate technical requirements to stakeholders and ensure all team members understand the approach.

Getting Started: Implementation Roadmap

Development Environment Setup

Required Dependencies

pip install anthropic

pip install fal

npm install @anthropic-ai/sdk

npm install @fal-ai/serverless-client

Setting up your development environment is the first step toward building with these powerful APIs. After installing the required dependencies, you'll need to configure authentication for both Anthropic and Fal.ai. I recommend using environment variables to store your API keys securely.

For testing, I suggest creating a separate environment with limited API quotas to avoid unexpected charges during development. This approach allows you to experiment freely without worrying about costs.

First Integration Steps

Basic Claude API Call (Python)

import anthropic

client = anthropic.Anthropic()

message = client.messages.create(

model="claude-3-5-sonnet-20240620",

max_tokens=1024,

messages=[

{"role": "user", "content": "Analyze this quarterly report and highlight key insights."}

]

)

print(message.content)

Starting with simple API calls helps you understand the basics before moving on to more complex integrations. I recommend building small proof-of-concept applications that demonstrate key capabilities, such as code execution or MCP connections.

For developers interested in exploring OpenAI Assistants API features alongside Anthropic's offerings, comparing the approaches can provide valuable insights into different implementation strategies.

Resources for Continued Learning

Anthropic Resources

- Official API Documentation

- Claude Cookbook

- Developer Forum

- Sample Projects Repository

Fal.ai Resources

- API Reference

- Quickstart Guides

- Model Documentation

- Example Applications

Staying current with API updates and new features is essential in the rapidly evolving AI landscape. I recommend subscribing to both Anthropic's and Fal.ai's developer newsletters and joining their respective community forums to keep up with the latest developments.

Remember that building with these powerful APIs is an iterative process. Start small, experiment frequently, and gradually incorporate more advanced features as you become comfortable with the basics. With the right approach, you can create truly intelligent applications that leverage the best of both Anthropic's language capabilities and Fal.ai's visual processing prowess.

Transform Your Visual Expressions with PageOn.ai

Ready to take your AI-powered applications to the next level? PageOn.ai provides powerful tools for visualizing complex API relationships, transforming data insights into clear visual expressions, and creating stunning diagrams that communicate your ideas effectively.

Conclusion: The Future of Intelligent Applications

As we've explored throughout this guide, the combination of Anthropic's Claude API and Fal.ai's visual processing capabilities opens up remarkable possibilities for intelligent application development. These powerful APIs enable developers to create sophisticated applications with minimal infrastructure overhead.

The recent additions to Anthropic's API ecosystem—code execution, MCP connector, Files API, and extended prompt caching—further expand what's possible, allowing for more complex, capable, and cost-effective AI applications.

By adopting the architectural patterns and implementation approaches we've discussed, you can build applications that not only leverage these powerful APIs effectively but also remain adaptable to future advancements in AI technology.

I'm excited to see what you'll build with these tools. Whether you're creating intelligent document processors, advanced data analysis applications, or conversational agents with enhanced capabilities, the combination of Anthropic and Fal.ai APIs provides the foundation you need to bring your vision to life.

You Might Also Like

Transform ChatGPT Prompts into Professional PowerPoint Slides | Ultimate Guide

Learn how to transform ChatGPT prompts into stunning PowerPoint presentations with our comprehensive guide. Discover expert techniques, tools, and workflows for AI-powered slides.

Step-by-Step MCP Server Configuration for Free PowerPoint Presentations | PageOn.ai

Learn how to set up and configure an MCP server for creating free PowerPoint presentations using AI assistants like ChatGPT and Claude with this comprehensive guide.

Mastering Visual Weight in Design: Creating Hierarchy, Balance, and Impact

Explore the principles of visual weight in design and learn how to create compelling hierarchies, perfect balance, and maximum impact in your visual compositions.

Revolutionizing Presentations: How AI-Generated Visuals Transform Slide Design

Discover how AI-generated visuals are transforming presentation design, saving hours of effort while creating stunning slides that engage audiences and communicate ideas effectively.