Foundation Model Selection: Visualizing the Differences Between GPT, Llama, and Mistral

Understanding Foundation Models in Today's AI Landscape

Understanding Foundation Models in Today's AI Landscape

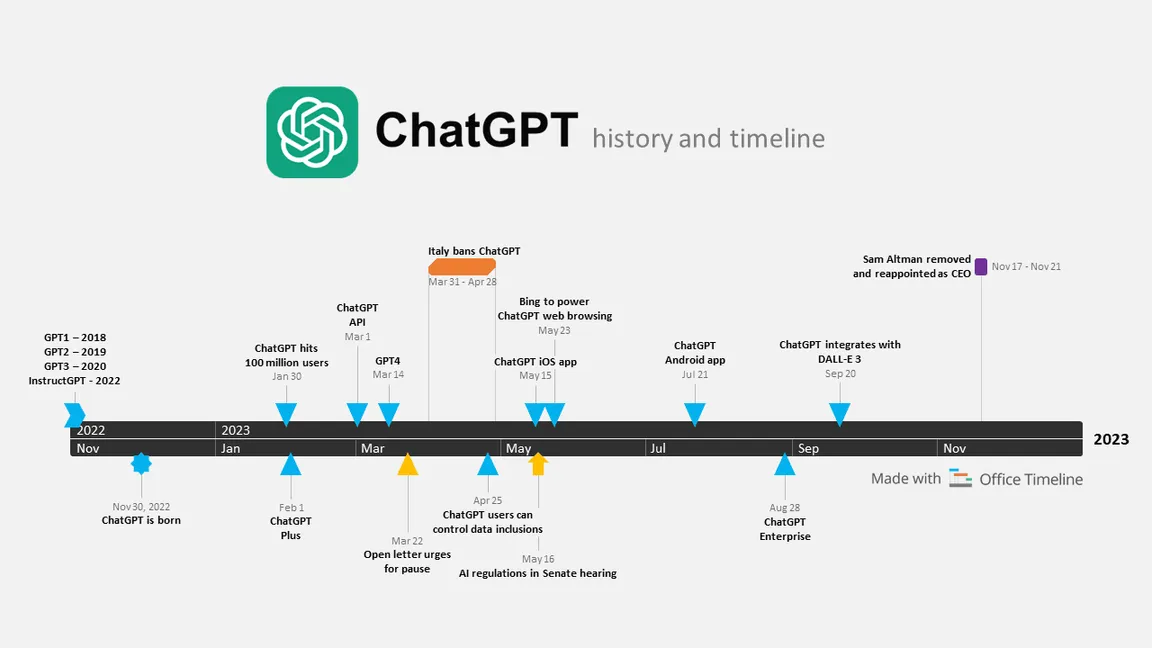

I've been fascinated by the rapid evolution of large language models (LLMs) over the past few years. These foundation models have fundamentally transformed how we approach AI applications, from simple chatbots to complex enterprise solutions. As someone who works closely with these technologies, I've observed firsthand how the competitive landscape between leading models has intensified in 2025.

When I talk to clients about foundation models, I often find they're confused about the differences between proprietary solutions like GPT and open-source alternatives like Llama and Mistral. The distinctions go far beyond simple licensing models and touch on architecture, parameter count, and practical implementation considerations.

Foundation Model Landscape 2025

Model Architecture Comparison

flowchart TD

subgraph "Foundation Models"

GPT["GPT Models\n(Proprietary)"]

Llama["Llama Models\n(Open Source)"]

Mistral["Mistral Models\n(Efficient Design)"]

end

subgraph "Key Characteristics"

P["Parameter Count"]

A["Architecture"]

L["Licensing"]

R["Resource Requirements"]

C["Customization Options"]

end

GPT --> P

Llama --> P

Mistral --> P

GPT --> A

Llama --> A

Mistral --> A

GPT --> L

Llama --> L

Mistral --> L

GPT --> R

Llama --> R

Mistral --> R

GPT --> C

Llama --> C

Mistral --> C

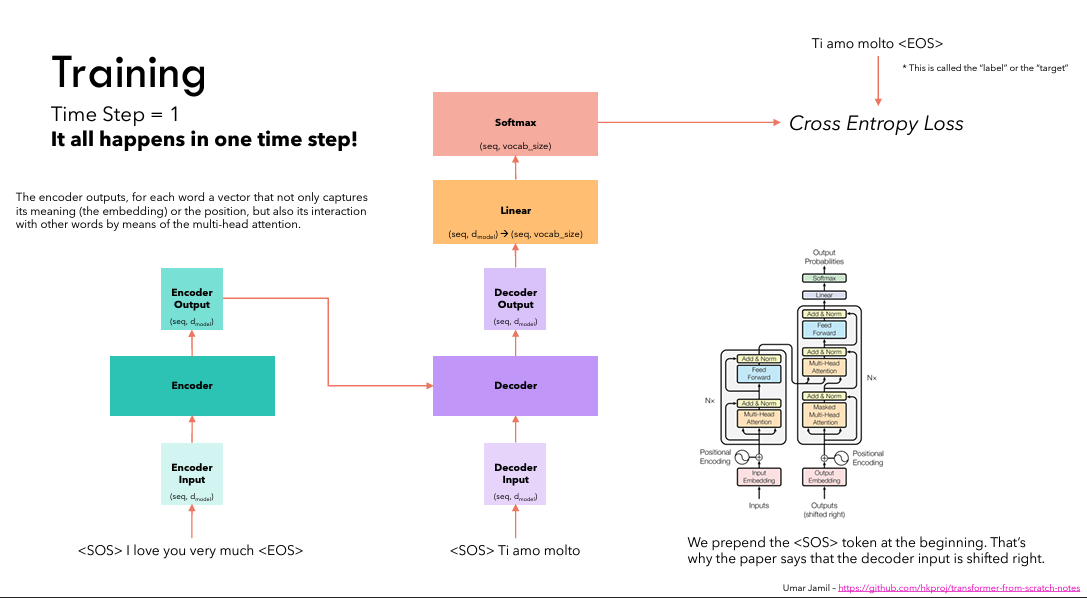

As an AI professional, I've found that visualization tools are invaluable when helping decision-makers understand these complex differences. When I need to explain the nuances between transformer architectures or parameter efficiency, I turn to ChatGPT for work efficiency to generate initial explanations, then use visual tools to transform these concepts into clear, actionable insights.

Throughout this guide, I'll share the visual frameworks I've developed to help organizations make informed decisions about which foundation model best suits their specific needs, constraints, and ambitions.

GPT Models: Capabilities and Considerations

In my work with enterprise clients, I've seen OpenAI's GPT models evolve from impressive but limited tools to sophisticated AI systems capable of handling incredibly complex tasks. The evolution from GPT-3 to the latest iterations has been remarkable, with each generation bringing significant improvements in understanding, reasoning, and specialized capabilities.

GPT Evolution Timeline

One of the most striking aspects of GPT models is their sheer scale. When I explain the computational requirements to clients, many are surprised by the infrastructure needed to train and run these models at full capacity. This visualization helps illustrate the parameter size and computational demands:

Parameter Size Comparison

In my experience, GPT models excel in natural language understanding and generation tasks. When I've implemented these models for clients in customer service applications, they've consistently outperformed other options in handling nuanced queries and generating human-like responses. However, this performance comes with trade-offs.

GPT Models: Key Considerations

- Proprietary Nature: Closed source with limited customization options

- Cost Structure: Usage-based pricing that can scale significantly with volume

- API Access: Simple integration but potential rate limiting and dependency concerns

- Data Privacy: Potential concerns about data usage for model improvement

- Versioning: Limited control over model updates and changes

When comparing GPT to traditional search approaches, I've found that its contextual understanding provides significant advantages for certain use cases. For more details on this comparison, I've written about the differences between ChatGPT vs traditional search engines and how they complement each other in information retrieval workflows.

Llama: Meta's Open-Source Alternative

In my work with startups and research teams, I've seen growing interest in Meta's Llama models. What fascinates me about Meta's approach is their strategic decision to embrace open-source AI development while still producing highly competitive models. This has created exciting possibilities for organizations that need more control over their AI infrastructure.

Llama's Architecture Overview

One of the most impressive aspects of Llama models is their parameter efficiency. When I've deployed these models for specialized applications, I've been able to achieve performance comparable to much larger proprietary models while using significantly fewer computational resources. According to recent model comparisons, Llama models are closing the performance gap with proprietary options while offering much more flexibility.

Deployment Options Comparison

In my experience implementing Llama models, I've found they offer significant advantages for specialized industry applications. For example, when I worked with a healthcare organization that needed to process sensitive patient data, we were able to customize and deploy a Llama model on-premises, ensuring complete data sovereignty while still providing sophisticated AI capabilities.

Llama Self-Hosting Requirements

flowchart TD

Start["Self-Hosting Llama"] --> Hardware

Hardware --> CPU["CPU Options"]

Hardware --> GPU["GPU Requirements"]

Hardware --> Memory["Memory Needs"]

CPU --> CPUMin["Minimum: 8+ Core\nModern CPU"]

CPU --> CPURec["Recommended: 16+ Core\nServer-Grade CPU"]

GPU --> GPUMin["Minimum: 1x RTX 3090\nor Equivalent (24GB VRAM)"]

GPU --> GPURec["Recommended: Multiple\nA100/H100 GPUs"]

Memory --> MemMin["Minimum: 32GB RAM"]

Memory --> MemRec["Recommended: 64GB+\nRAM for 70B Model"]

Software --> Quantization["Quantization Options:\n- GGUF Format\n- 4-bit, 5-bit, 8-bit"]

Software --> Frameworks["Deployment Frameworks:\n- llama.cpp\n- vLLM\n- Hugging Face"]

Software --> API["API Layer:\n- Text Generation UI\n- LangChain\n- Custom REST API"]

Start --> Software

Costs --> ComputeCost["Compute Costs:\n$2K-$10K+ Initial\n+ Ongoing Power"]

Costs --> MaintenanceCost["Maintenance:\nUpdates, Security,\nScaling"]

Start --> Costs

For organizations considering implementing their own foundation model infrastructure, I recommend creating a comprehensive MCP implementation roadmap that accounts for both the technical and organizational aspects of deployment. This structured approach has helped my clients avoid common pitfalls when transitioning to self-hosted AI solutions.

Mistral: The Efficient French Contender

I've been particularly impressed by Mistral AI's rapid rise in the foundation model space. As someone who works with organizations that have varying computational resources, I appreciate how Mistral has prioritized efficiency without sacrificing performance. Their approach has made sophisticated AI capabilities accessible to a much broader range of organizations.

Mistral's Efficiency Breakthrough

What makes Mistral particularly noteworthy is how it achieves competitive performance with significantly fewer parameters than many rivals. When I first deployed Mistral models for clients with limited GPU resources, I was skeptical about whether they could handle complex tasks. The results were impressive—Mistral Large 2, with its 123B parameters, performed at a level comparable to models twice its size.

Efficiency Comparison: Performance vs. Resource Requirements

In my consulting work, I've found that Mistral models are particularly well-suited for organizations that need to balance sophisticated AI capabilities with practical resource constraints. The licensing is another advantage—Mistral offers both open models under Apache 2.0 and proprietary options with commercial support, giving organizations flexibility as their needs evolve.

Mistral Models: Key Advantages

- Remarkable Efficiency: Competitive performance with fewer parameters

- Flexible Deployment: Options for both cloud API access and self-hosting

- Lower Resource Requirements: Smaller models can run on consumer-grade hardware

- Mixed Licensing: Both open and commercial options available

- Strong Technical Support: Growing ecosystem and community resources

When evaluating Mistral against other options, I often create detailed SWOT analysis templates to help clients understand the strategic implications of their model choice. This structured approach helps identify where Mistral's efficiency advantages align with specific organizational needs and constraints.

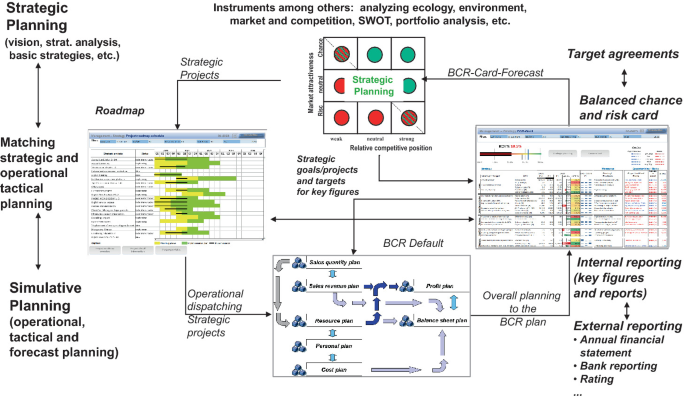

Decision Framework: Selecting the Right Foundation Model

Based on my experience implementing foundation models across different organizations, I've developed a visual decision framework to guide the selection process. This framework helps my clients navigate the complex trade-offs between performance, cost, flexibility, and other critical factors.

Foundation Model Decision Tree

flowchart TD

Start["Foundation Model Selection"] --> Q1{"Data Privacy\nRequirements?"}

Q1 -->|"High (On-Premises Required)"| Q2{"Available\nCompute Resources?"}

Q1 -->|"Standard (Cloud OK)"| Q3{"Budget\nConstraints?"}

Q2 -->|"Enterprise-Grade\n(Multiple GPUs)"| A1["Consider: Llama 2 70B\nor Mistral Large 2"]

Q2 -->|"Limited\n(Single GPU)"| A2["Consider: Mistral 7B\nor Llama 2 7B"]

Q3 -->|"Minimal Concerns"| Q4{"Performance\nPriority?"}

Q3 -->|"Cost-Sensitive"| Q5{"Customization\nNeeded?"}

Q4 -->|"Maximum Performance"| A3["Consider: GPT-4\nor Claude 3 Opus"]

Q4 -->|"Balanced Performance"| A4["Consider: GPT-3.5\nor Mistral Large API"]

Q5 -->|"High Customization"| A5["Consider: Mistral API\nor Fine-tuned Llama"]

Q5 -->|"Minimal Customization"| A6["Consider: GPT-3.5\nor Mistral Medium"]

subgraph "Key Factors"

F1["Privacy & Data Sovereignty"]

F2["Available Computing Resources"]

F3["Budget Allocation"]

F4["Performance Requirements"]

F5["Customization Needs"]

end

When I work with clients on model selection, I emphasize that there's rarely a one-size-fits-all solution. Instead, I help them evaluate their specific requirements across several dimensions:

| Selection Factor | GPT Models | Llama Models | Mistral Models |

|---|---|---|---|

| Resource Requirements | API only (no self-hosting) | High (24-80GB VRAM for larger models) | Moderate (8-24GB VRAM for efficient models) |

| Cost Structure | Pay-per-token API pricing | Upfront hardware + ongoing operation | Mixed (API or self-hosted options) |

| Customization | Limited (fine-tuning options only) | Extensive (full model access) | Moderate to Extensive |

| Data Privacy | External API (some privacy options) | Full control (self-hosted) | Full control (self-hosted) or API |

| Implementation Complexity | Low (simple API integration) | High (infrastructure setup required) | Moderate (varies by deployment) |

I've found that industry-specific requirements often play a crucial role in model selection. For example, when I worked with healthcare organizations, data privacy and regulatory compliance were non-negotiable, making self-hosted models like Llama or Mistral the only viable options despite their higher implementation complexity.

For organizations evaluating alternatives to their current knowledge management systems, I often recommend exploring how foundation models can be integrated with notion alternatives to create more intelligent, context-aware information systems. This hybrid approach can provide the best of both structured knowledge management and AI-powered insights.

Performance Benchmarks: Visual Comparisons

When advising clients on model selection, I find that abstract discussions about model capabilities are far less effective than concrete performance comparisons. That's why I've compiled benchmark data across standard evaluation metrics as well as real-world tasks that matter to businesses.

Standard Benchmark Performance

While academic benchmarks provide useful standardized comparisons, I've learned that real-world task performance often matters more to organizations. Based on my implementation experience across different industries, I've compiled this practical performance comparison:

Real-World Task Performance

Another critical factor I consider when advising clients is the cost efficiency of different models. This analysis helps organizations understand the performance-to-cost ratio they can expect:

Cost Efficiency Analysis

In my experience, the most effective approach is to evaluate models based on the specific tasks that matter most to your organization. For example, if your primary use case is customer support automation, focusing on benchmarks related to contextual understanding and response quality will be more valuable than general coding ability metrics.

Implementation Considerations and Future-Proofing

When I guide organizations through foundation model implementation, I emphasize that the technical infrastructure is just one piece of the puzzle. Successful deployment requires careful planning across multiple dimensions, from hardware requirements to integration strategies.

Infrastructure Requirements Comparison

One of the most challenging aspects of foundation model implementation is planning for scalability. I've created this visual framework to help my clients understand how their infrastructure needs will evolve as usage grows:

Scaling Considerations Framework

flowchart TD

Start["Foundation Model\nImplementation"] --> Planning

Planning --> ResourcePlanning["Resource Planning"]

Planning --> IntegrationStrategy["Integration Strategy"]

Planning --> ScalingStrategy["Scaling Strategy"]

ResourcePlanning --> ComputeResources["Compute Resources"]

ResourcePlanning --> StorageNeeds["Storage Requirements"]

ResourcePlanning --> NetworkCapacity["Network Capacity"]

IntegrationStrategy --> APILayer["API Layer Design"]

IntegrationStrategy --> SecurityModel["Security & Authentication"]

IntegrationStrategy --> MonitoringSystem["Monitoring & Observability"]

ScalingStrategy --> HorizontalScaling["Horizontal Scaling"]

ScalingStrategy --> VerticalScaling["Vertical Scaling"]

ScalingStrategy --> LoadBalancing["Load Balancing"]

ComputeResources --> GPUCluster["GPU Cluster (Self-Hosted)"]

ComputeResources --> CloudResources["Cloud Provider Resources"]

ComputeResources --> HybridApproach["Hybrid Approach"]

HorizontalScaling --> MultipleInstances["Multiple Model Instances"]

HorizontalScaling --> ShardingStrategy["Request Sharding"]

VerticalScaling --> LargerGPUs["Larger/More GPUs"]

VerticalScaling --> OptimizedInference["Optimized Inference"]

VerticalScaling --> ModelQuantization["Model Quantization"]

Integration complexity is another critical factor I consider when advising clients. Based on my experience implementing different models across various organizational contexts, I've developed this assessment framework:

Integration Complexity Assessment

Future-proofing is another critical consideration I discuss with clients. The foundation model landscape is evolving rapidly, and organizations need strategies to maintain flexibility as new models and capabilities emerge. I recommend creating a visual implementation timeline that includes regular reassessment points:

Implementation Timeline with Reassessment Points

In my experience, the most successful implementations are those that build in flexibility from the beginning. By designing systems with modular components and clear APIs between layers, organizations can more easily swap out foundation models as better options emerge without disrupting their entire AI infrastructure.

Case Studies: Foundation Models in Action

Throughout my consulting work, I've had the opportunity to help organizations across various industries implement foundation models. These real-world examples illustrate how different models can be applied to solve specific business challenges.

Healthcare: Patient Support System

A major healthcare provider needed to enhance their patient support capabilities while maintaining strict data privacy. We implemented a self-hosted Llama model that processed sensitive patient queries without exposing data to external APIs.

Financial Services: Document Analysis

A financial institution needed to process thousands of complex financial documents daily. GPT-4's advanced reasoning capabilities proved ideal for extracting structured data from unstructured text.

Manufacturing: Technical Support

A manufacturing company needed to provide 24/7 technical support for their equipment. Mistral's efficient models allowed them to deploy AI assistance even at remote sites with limited connectivity.

Education: Personalized Learning

An educational technology company needed to provide personalized learning experiences at scale. They implemented a hybrid approach using both GPT and Mistral models for different aspects of their platform.

ROI Comparison Across Case Studies

One of the most valuable lessons I've learned from these implementations is the importance of clear visual documentation throughout the process. By using PageOn.ai to create detailed diagrams of the implementation architecture, data flows, and integration points, we were able to ensure all stakeholders had a shared understanding of the system.

Before/After Workflow Comparison

These case studies demonstrate that the "right" foundation model depends entirely on the specific requirements and constraints of each organization. By carefully assessing needs and aligning them with model capabilities, we've consistently achieved impressive results across diverse use cases.

Conclusion: Creating Your Foundation Model Strategy

After working with dozens of organizations on foundation model implementation, I've found that success depends on developing a clear, visually documented strategy that balances current needs with future flexibility. The foundation model landscape will continue to evolve rapidly, and organizations need to position themselves to take advantage of new capabilities as they emerge.

Decision Framework Template

flowchart TD

Start["Your Foundation\nModel Strategy"] --> Assess

Assess --> UseCase["Identify Primary\nUse Cases"]

Assess --> Resources["Evaluate Available\nResources"]

Assess --> Constraints["Document Key\nConstraints"]

UseCase --> UC1["Content Generation"]

UseCase --> UC2["Data Analysis"]

UseCase --> UC3["Customer Interaction"]

UseCase --> UC4["Creative Tasks"]

UseCase --> UC5["Code Generation"]

Resources --> R1["Budget"]

Resources --> R2["Technical Expertise"]

Resources --> R3["Infrastructure"]

Resources --> R4["Timeline"]

Constraints --> C1["Data Privacy"]

Constraints --> C2["Performance Needs"]

Constraints --> C3["Customization Requirements"]

Constraints --> C4["Integration Points"]

Assess --> ModelSelection["Model Selection"]

ModelSelection --> GPT["GPT Family"]

ModelSelection --> Llama["Llama Family"]

ModelSelection --> Mistral["Mistral Family"]

ModelSelection --> Hybrid["Hybrid Approach"]

ModelSelection --> Implementation["Implementation Plan"]

Implementation --> Pilot["Pilot Project"]

Implementation --> Evaluation["Evaluation Metrics"]

Implementation --> Scaling["Scaling Strategy"]

Implementation --> Reassessment["Regular Reassessment"]

Based on my experience, I recommend these key steps for developing your foundation model strategy:

Strategy Development Framework

- Conduct a thorough needs assessment: Document specific use cases, performance requirements, and constraints

- Create a visual decision framework: Map your requirements to model capabilities

- Consider hybrid approaches: Different models may be optimal for different tasks

- Build in flexibility: Design systems with modular components that can be updated

- Establish clear metrics: Define how you'll measure success

- Plan for regular reassessment: Schedule quarterly reviews of model performance and new options

- Document everything visually: Ensure all stakeholders share a common understanding

For organizations considering hybrid approaches, I've found that maintaining clear visual documentation of the overall architecture is essential. PageOn.ai has been an invaluable tool for my clients, helping them create and maintain comprehensive visual documentation of their AI strategy that can be easily updated as requirements evolve and new models emerge.

Visual Reassessment Timeline

By following this approach, organizations can make informed decisions about which foundation model—GPT, Llama, Mistral, or a combination—best suits their specific needs while maintaining the flexibility to adapt as the AI landscape continues to evolve.

Transform Your Visual Expressions with PageOn.ai

Ready to create stunning visualizations that make complex foundation model comparisons clear and actionable? PageOn.ai helps you transform technical concepts into beautiful, intuitive visual expressions that drive better decision-making.

Start Creating with PageOn.ai TodayYou Might Also Like

The Science Behind Success: How AI-Powered Content Creation Delivers 25% Higher Success Rates

Discover why AI users report 25% higher content success rates. Learn proven strategies, productivity gains, and competitive advantages of AI-powered content creation.

Bridging Worlds: How Diffusion Models Are Reshaping Language Generation | PageOn.ai

Explore the revolutionary convergence of diffusion models and language generation. Discover how diffusion techniques are creating new paradigms for NLP, bridging visual and linguistic domains.

How 85% of Marketers Transform Content Strategy with AI Visual Tools | PageOn.ai

Discover how 85% of marketers are revolutionizing content strategy with AI tools, saving 3 hours per piece while improving quality and output by 82%.

Visualizing the AI Revolution: From AlphaGo to AGI Through Key Visual Milestones

Explore the visual journey of AI evolution from AlphaGo to AGI through compelling timelines, infographics and interactive visualizations that map key breakthroughs in artificial intelligence.