Running Open-Source Image AI Models Locally

A Complete Guide for Visual Creators

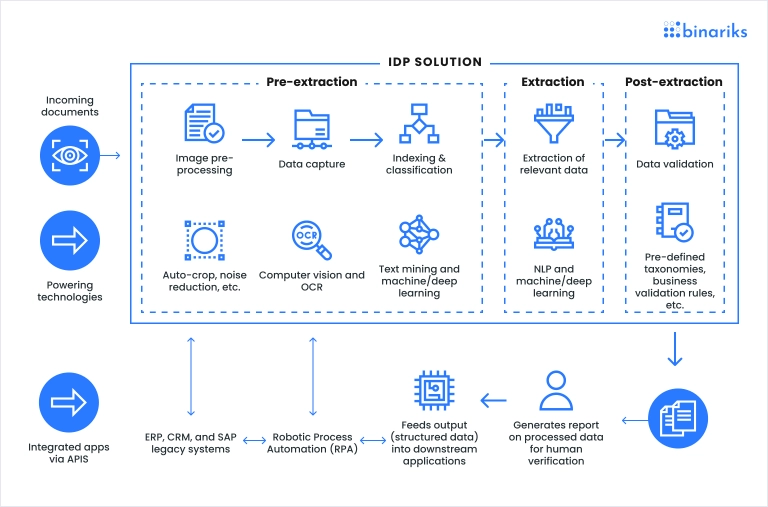

Understanding the Power of Local Image AI Processing

I've been exploring the world of AI image generation for years, and I can confidently say that running these models locally on my own computer has completely transformed my creative workflow. When I first started, I relied heavily on cloud-based solutions, but the limitations quickly became apparent.

Key Benefits of Local Processing

- Complete Data Privacy - My images and prompts never leave my computer

- No Subscription Fees - One-time hardware investment instead of ongoing costs

- Full Customization - Freedom to modify models for my specific needs

- Offline Functionality - Create anywhere without internet dependency

Cloud vs. Local: Making the Right Choice

When comparing cloud-based and local image AI solutions, I've found that each has its place depending on your creative needs. Cloud solutions offer convenience and require minimal setup, but local processing gives you unparalleled control and privacy.

flowchart TD

A[Image Generation Need] --> B{Hardware Available?}

B -->|Yes: Powerful GPU| C[Local Processing]

B -->|Limited or No GPU| D[Cloud Processing]

C --> E[Complete Control & Privacy]

C --> F[One-time Investment]

C --> G[Customization Options]

D --> H[Accessibility Anywhere]

D --> I[No Hardware Requirements]

D --> J[Subscription Costs]

I've found that organizing my locally-generated assets can become challenging as my collection grows. This is where I use PageOn.ai to create visual organizational structures that help me track different styles, prompts, and outputs. The visual nature of PageOn makes it perfect for creative workflows where seeing relationships between elements matters.

Hardware Requirements for Smooth Image Generation

From my experience, the hardware you use dramatically impacts your local AI image generation experience. I've tested various setups and found that while you don't need a supercomputer, certain specifications make the process much more enjoyable.

Hardware Component Importance for AI Image Generation

Based on my testing, here's how different components impact your image generation experience:

Essential Components for Efficient AI Image Generation

| Component | Entry Level | Recommended | Professional |

|---|---|---|---|

| GPU | NVIDIA RTX 3050 (6GB VRAM) | NVIDIA RTX 3070/4060 (8GB+ VRAM) | NVIDIA RTX 4080/4090 (16GB+ VRAM) |

| CPU | Intel i5/Ryzen 5 (recent gen) | Intel i7/Ryzen 7 | Intel i9/Ryzen 9 |

| RAM | 16GB DDR4 | 32GB DDR4/DDR5 | 64GB+ DDR5 |

| Storage | 500GB SSD | 1TB NVMe SSD | 2TB+ NVMe SSD |

| Cooling | Stock + Good Airflow | Enhanced Air Cooling | Liquid Cooling |

I started with a modest setup similar to the entry-level configuration and was able to run smaller models effectively. As I became more invested in AI image creation, upgrading my GPU made the biggest difference in both speed and the size of models I could run.

Budget-Friendly Tip

If you're on a tight budget, I recommend prioritizing GPU VRAM over other specs. Even an older CPU with a decent modern GPU (minimum 6GB VRAM) will outperform a cutting-edge CPU with integrated graphics for AI image generation tasks.

When working with locally generated images, I've found that organizing my workflows visually helps me maximize my hardware's potential. Open source AI image generators can produce thousands of variations quickly, and I use PageOn.ai's AI Blocks to create visual maps of which parameter combinations work best on my specific hardware configuration.

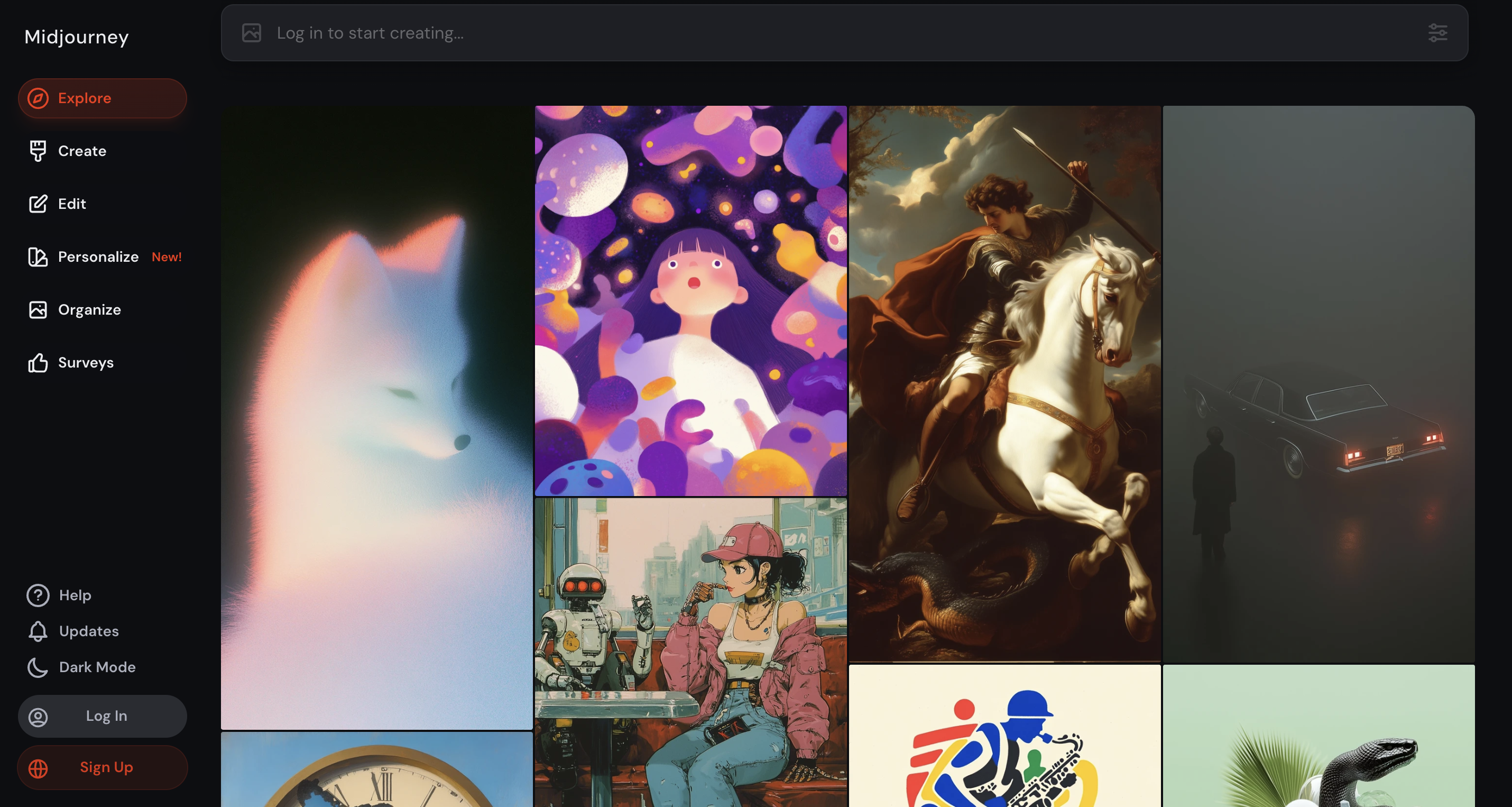

Popular Open-Source Image Models Worth Exploring

The landscape of open-source image AI models is constantly evolving. I've experimented with dozens of models over the past few years, and I'm continually impressed by how quickly the technology advances. Here's my overview of the most impactful models you should consider for your local setup.

The Stable Diffusion Ecosystem

Stable Diffusion has been the backbone of the open-source image generation community. I've watched it evolve from the groundbreaking SD1 through multiple iterations, each bringing significant improvements.

What I find most valuable about the SD ecosystem is the vibrant community that has created specialized versions for different artistic styles and use cases. From photorealistic renders to anime-style illustrations, there's likely a fine-tuned SD model that excels at your preferred aesthetic.

Flux.1 and Emerging Alternatives

As Stability AI has faced challenges, new models like Flux.1 have emerged as powerful alternatives. Many talented developers who previously worked on Stable Diffusion have contributed to these new projects, bringing fresh approaches to image generation.

In my testing, I've found that open source AI tools like Flux.1 often excel at different types of imagery compared to Stable Diffusion. For example, some newer models handle complex scenes with multiple subjects more coherently, while others might specialize in particular artistic styles.

Model Capability Comparison

Based on my extensive testing, here's how different models perform across key metrics:

Finding the Right Balance: Model Size vs. Quality

One of the most important decisions I've had to make when running models locally is choosing between full-size and quantized (compressed) versions. This choice directly impacts both image quality and generation speed.

| Model Type | VRAM Usage | Quality Impact | Best For |

|---|---|---|---|

| Full Size (FP32/FP16) | 8GB-12GB+ | Maximum Quality | Professional work, high-end GPUs |

| Pruned (No EMA) | 6GB-8GB | Slight Reduction | Mid-range GPUs, balanced approach |

| Quantized (8-bit) | 4GB-6GB | Moderate Reduction | Entry-level GPUs, laptops |

| Quantized (4-bit) | 2GB-4GB | Significant Reduction | Integrated graphics, testing |

When I'm looking for reference images to guide my AI generation prompts, I use PageOn.ai's Deep Search functionality to find and integrate perfect examples. This visual approach to prompt engineering has dramatically improved my results, especially when working with more specialized models that require precise guidance.

Setting Up Your Local Environment

Setting up your local environment for AI image generation can seem daunting at first, but I've developed a streamlined process after numerous installations across different systems. Here's my step-by-step approach that works reliably across operating systems.

Installation Workflow

flowchart TD

A[Prepare System] -->|Install Dependencies| B[Install Python & Git]

B --> C[Install CUDA & GPU Drivers]

C --> D[Clone UI Repository]

D --> E{Choose UI}

E -->|Option 1| F[Automatic1111]

E -->|Option 2| G[ComfyUI]

E -->|Option 3| H[Forge]

F & G & H --> I[Configure Model Storage]

I --> J[Download Base Models]

J --> K[Test Installation]

Operating System-Specific Installation Tips

Windows

- Install latest NVIDIA drivers

- Use Python 3.10.x (not 3.11+)

- Install Git for Windows

- Use PowerShell as administrator

- Consider Windows WSL2 for Linux-based UIs

macOS

- Use Homebrew for dependencies

- M1/M2 Macs: Use MPS acceleration

- Install Xcode Command Line Tools

- Use Python virtual environments

- Consider smaller models for Apple Silicon

Linux

- Install CUDA toolkit for your distro

- Use distribution package manager

- Check GPU compatibility with nvidia-smi

- Consider Docker containers

- Set up proper VRAM allocation

Managing Model Storage Efficiently

One of the biggest challenges I faced when I started using multiple interfaces was managing model storage efficiently. AI image models can be massive—some exceeding 10GB—and duplicating them across different UI folders wastes valuable SSD space.

My solution was to create a centralized model repository structure that all UIs can access. This approach has saved me hundreds of gigabytes of storage and made updating models much simpler.

Example Shared Folder Structure

/AI_Models

/checkpoints

/stable-diffusion

/flux

/loras

/embeddings

/controlnet

/upscalers

To visualize my model organization structure, I use offline AI image generators with PageOn.ai to create interactive diagrams. This helps me track which models I'm using for different projects and identify which ones I might need to update or replace as newer versions become available.

User-Friendly Interfaces for Local Image Generation

After experimenting with numerous interfaces for local image generation, I've found that different UIs excel at different aspects of the creative process. Rather than committing to just one, I now use multiple interfaces depending on my specific needs for each project.

Interface Comparison

Interface Breakdown: Choosing the Right Tool

Automatic1111

The most widely used interface with an extensive community and plugin ecosystem. I rely on it for straightforward image generation tasks.

Best For:

- Beginners getting started with AI image generation

- Quick iterations and batch processing

- Extensive extension ecosystem

- Stable, well-documented experience

Limitations:

- Less flexible for complex workflows

- Interface can become cluttered with extensions

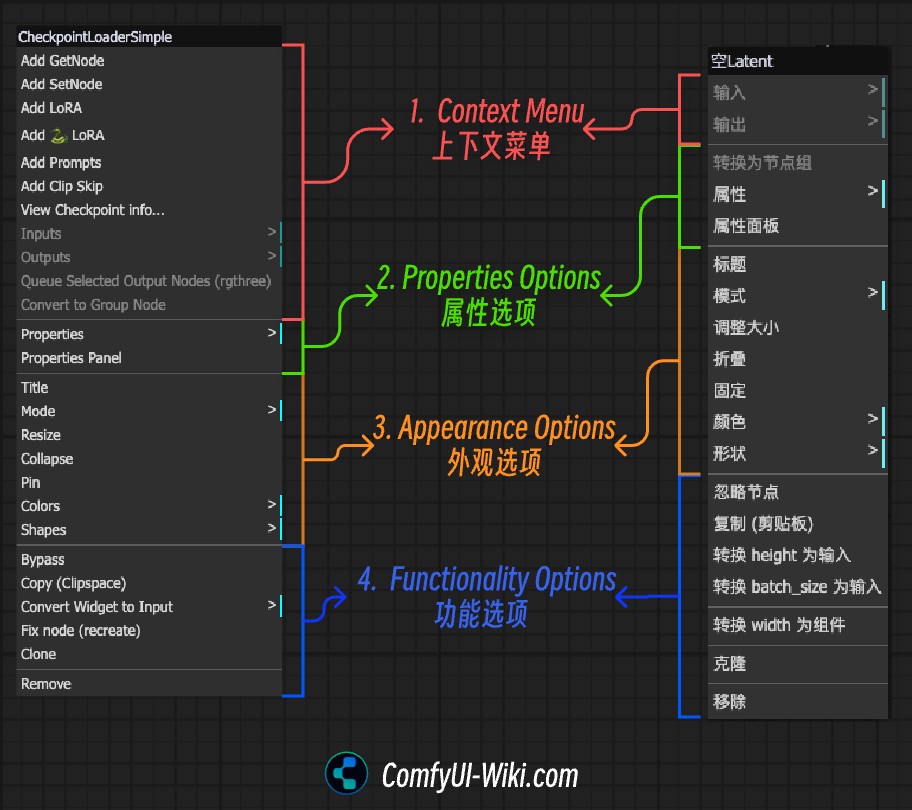

ComfyUI

My go-to for complex workflows. The node-based interface gives unparalleled control over the generation process.

Best For:

- Advanced users who need precise control

- Complex multi-stage generation workflows

- Visual programming approach

- Maximum customization and experimentation

Limitations:

- Steeper learning curve

- Can be overwhelming for beginners

Forge

A newer interface that I find strikes a good balance between ease of use and powerful features. Great modern UI design.

Best For:

- Users who want a modern, clean interface

- Balance of simplicity and features

- Newer hardware compatibility

- More intuitive workflow organization

Limitations:

- Smaller community than Automatic1111

- Fewer extensions and custom resources

Setting Up Shared Model Folders

To efficiently use multiple interfaces without duplicating models, I've set up a system of shared model folders. This approach has saved me hundreds of gigabytes of storage space and simplified my workflow considerably.

Configuration Examples

For Forge (edit web-user.bat):

set PYTHONPATH=%CD% set SD_UI_PATH=D:\AI_Models python -m forge.server --host 127.0.0.1 --port 9000

For ComfyUI (edit extra_models_paths.yaml):

checkpoints_path: D:/AI_Models/checkpoints loras_path: D:/AI_Models/loras vae_path: D:/AI_Models/vae controlnet_path: D:/AI_Models/controlnet

To document my custom image generation workflows, I use free AI tools for generating images alongside PageOn.ai. The visual documentation approach helps me remember complex node setups in ComfyUI or extension configurations in Automatic1111, making it much easier to reproduce successful results later.

Creating Efficient Workflows Between Tools

I've found that each interface has its strengths, so I often use them in combination. For example, I might:

- Prototype and explore ideas quickly in Automatic1111

- Build complex multi-stage workflows in ComfyUI

- Use Forge for final high-resolution renders

Advanced Techniques for Local Image Model Customization

Once you're comfortable with basic image generation, customizing models to your specific needs is where the true power of local AI processing shines. I've spent countless hours experimenting with different customization techniques, and the results have been transformative for my creative projects.

Fine-tuning Models with Personal Datasets

Fine-tuning allows you to customize a model to generate images in a specific style or with particular subjects. I've created several custom models that perfectly match my artistic vision by training them on carefully curated datasets.

The key to successful fine-tuning is creating a high-quality training set. I've found that 20-30 high-quality images with consistent style and subject matter can produce excellent results without requiring excessive training time or computational resources.

Fine-tuning Process Overview

flowchart TD

A[Prepare Training Images] -->|20-30 consistent images| B[Preprocess Images]

B -->|Resize & caption| C[Choose Training Method]

C -->|For styles & concepts| D[Textual Inversion]

C -->|For subjects & characters| E[LoRA Training]

C -->|For complete model changes| F[Dreambooth/Custom Diffusion]

D & E & F --> G[Set Training Parameters]

G -->|GPU-intensive process| H[Monitor Training]

H -->|Evaluate results| I{Results Satisfactory?}

I -->|No| J[Adjust & Retrain]

J --> G

I -->|Yes| K[Save & Use Custom Model]

Customization Methods Compared

| Method | Training Time | VRAM Required | Best For | File Size |

|---|---|---|---|---|

| Textual Inversion | 1-2 hours | 6GB+ | Styles, concepts, simple objects | ~5KB |

| LoRA | 2-4 hours | 8GB+ | Characters, subjects, specific styles | 50-200MB |

| Dreambooth | 4-8+ hours | 12GB+ | Complete model fine-tuning | 2-7GB |

| Hypernetworks | 3-5 hours | 8GB+ | Artistic styles, textures | ~20MB |

Optimizing Prompts for Local Models

I've discovered that prompt engineering for local models differs significantly from cloud-based services. Local models often respond better to more detailed, structured prompts with specific emphasis on technical parameters.

My Prompt Structure for Local Models

- Main subject description (what you want to see)

- Style keywords (artistic influence, medium)

- Technical specifications (lighting, camera details)

- Qualifiers (high quality, detailed, etc.)

- Negative prompt (what to avoid)

I maintain a personal prompt library organized by style and subject to ensure consistent results across projects.

When refining my prompt strategy, I use free AI image animation generators alongside PageOn.ai's Vibe Creation feature. This conversational approach to prompt development helps me explore different aesthetic directions without getting bogged down in technical parameters. I can simply describe what I'm looking for in natural language, and PageOn helps me structure it for optimal results with my local models.

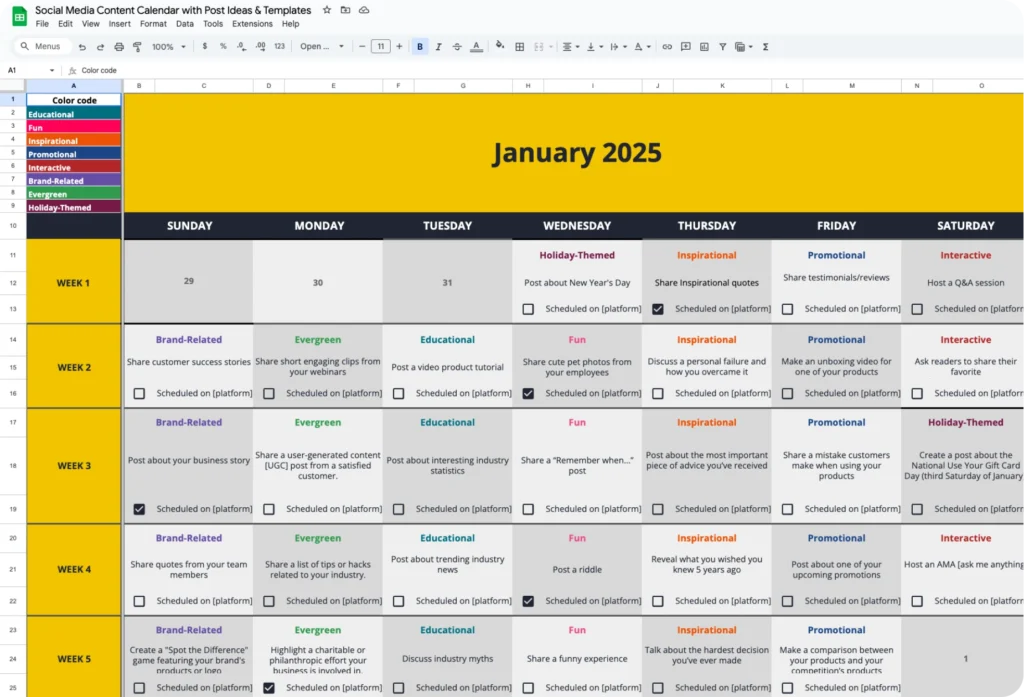

Practical Applications and Creative Workflows

After mastering the technical aspects of running image AI models locally, I've integrated them into various professional and personal creative workflows. The flexibility and control of local generation have opened up possibilities I never had with cloud-based services.

Real-World Use Cases

Professional Design

I use local AI models to rapidly generate concept art, mood boards, and design elements for client projects. The privacy of local generation means client information never leaves my system.

Key benefit: Complete control over iterations without usage limits or subscription costs.

Marketing Content

I create consistent, branded visual content for social media and marketing campaigns. Using custom-trained models ensures brand consistency across all generated images.

Key benefit: Rapid production of on-brand visuals without hiring multiple designers.

Artistic Exploration

I use local models to explore new artistic styles and concepts that inform my traditional artwork. The ability to run unlimited generations encourages experimentation.

Key benefit: Freedom to explore without usage constraints or costs per image.

Integration with Other Creative Tools

I've developed efficient workflows that combine AI generation with traditional design software. This hybrid approach leverages the strengths of both AI and conventional tools.

Integrated Creative Workflow

flowchart LR

A[Concept Development] --> B[Initial AI Generation]

B --> C{Image Review}

C -->|Needs Refinement| D[Prompt Adjustment]

D --> B

C -->|Base Image Acceptable| E[Export to Photoshop]

E --> F[Manual Refinement]

F --> G[Final Composition]

G --> H[Client Presentation]

subgraph "Local AI Process"

B

C

D

end

subgraph "Traditional Design Process"

E

F

G

end

Batch Processing Strategies

For projects requiring multiple related images, I've developed efficient batch processing workflows:

- Create a base prompt template with placeholders

- Prepare a CSV file with variations for each image

- Use script extensions to automate generation

- Process results in bulk using Adobe Bridge

- Apply consistent editing presets across the batch

To maintain consistency across AI-generated assets, I create visual style guides using PageOn.ai. These guides document my prompt structures, parameter settings, and post-processing steps, ensuring that I can reproduce successful results months later, even as models and tools evolve.

Troubleshooting and Community Resources

Running AI models locally inevitably comes with technical challenges. Over time, I've encountered and resolved numerous issues, and I've found that the vibrant community around open-source AI is an invaluable resource for troubleshooting.

Common Issues and Solutions

| Issue | Possible Causes | Solutions |

|---|---|---|

| CUDA Out of Memory | Model too large for GPU, resolution too high |

|

| Black/Blank Images | Negative prompt too restrictive, VAE issues |

|

| Slow Generation | Inefficient settings, background processes |

|

| Installation Fails | Python version mismatch, CUDA issues |

|

Essential Community Resources

Forums & Discussion

- Reddit: r/StableDiffusion

- Reddit: r/LocalLLaMA

- Hugging Face forums

- GitHub discussions for specific projects

- CivitAI forums

Discord Communities

- Stable Diffusion Discord

- ComfyUI Official

- AUTOMATIC1111 Community

- Civitai Discord

- Flux Discord

When I encounter complex issues, I document my troubleshooting process visually using PageOn.ai. This helps me track what solutions I've already tried and share my findings with the community in a clear, structured way. This visual approach to problem-solving has helped me resolve issues much faster than text-only documentation.

Staying Updated

The open-source AI landscape evolves incredibly quickly. I make it a habit to check key GitHub repositories weekly and follow prominent developers on Twitter/X to stay informed about new models, techniques, and optimizations.

Future-Proofing Your Local AI Setup

The pace of development in AI image generation is breathtaking. Models that were state-of-the-art just six months ago are now considered outdated. I've developed strategies to keep my local setup relevant and adaptable as the technology continues to evolve.

Upcoming Trends in Open-Source Image AI

Based on recent developments, I see several important trends emerging in the open-source image AI space:

- More Efficient Models - Newer architectures that require less VRAM while maintaining quality

- Multi-Modal Integration - Combined text, image, and video generation capabilities

- Enhanced Control Methods - More precise ways to guide generation beyond text prompts

- Animation Capabilities - Static image models evolving to support motion

- Specialized Domain Models - Models fine-tuned for specific industries or use cases

Strategic Hardware Planning

Based on the trends I'm observing, here's how I approach hardware planning to stay ahead of model requirements:

Hardware Upgrade Priority

I've found that GPU VRAM capacity remains the primary bottleneck for running newer models. When planning upgrades, I prioritize VRAM capacity over raw compute performance, as many models simply won't load if you don't have sufficient VRAM, regardless of how fast your GPU is otherwise.

Creating a Sustainable Workflow

To ensure my creative process remains efficient despite the rapidly evolving model landscape, I've developed these sustainable workflow practices:

Model Management Strategy

- Maintain a "core models" folder with proven performers

- Use a separate "testing" folder for evaluating new models

- Document model strengths and ideal use cases

- Archive older models rather than deleting them

- Use version control for custom training projects

Output Organization

- Create project-based folder structures

- Use metadata tools to embed generation parameters

- Implement consistent naming conventions

- Regularly back up your generated assets

- Tag images with style and content descriptors

PageOn.ai's AI Blocks approach has been instrumental in helping me adapt to changing model capabilities. I use it to create visual documentation of my workflows that focuses on the creative intent rather than specific technical parameters. This abstraction layer means I can swap out models or tools without disrupting my creative process.

Transform Your Visual Expressions with PageOn.ai

Running open-source image AI models locally gives you unprecedented creative freedom, but organizing and documenting your workflows can be challenging. PageOn.ai helps you create clear visual documentation of your AI image generation processes, making it easier to reproduce successful results and adapt to new models and techniques.

Start Creating with PageOn.ai TodayConclusion: Embracing the Power of Local AI Image Generation

Throughout my journey with local AI image generation, I've discovered that the combination of powerful open-source models and personal computing resources creates unprecedented creative possibilities. While there's certainly a learning curve and some technical hurdles to overcome, the benefits far outweigh the challenges.

Running these models locally has transformed my creative workflow by providing:

- Complete privacy and control over my data and creative assets

- Freedom from subscription costs and usage limitations

- The ability to customize models to my specific aesthetic preferences

- A deeper understanding of how AI image generation actually works

- The flexibility to experiment without constraints

As the technology continues to evolve at a rapid pace, I'm excited to see what new capabilities will emerge in the open-source community. By establishing solid workflows and documentation practices now, I'm prepared to incorporate these advancements smoothly into my creative process.

Whether you're a professional designer, a digital artist, or simply someone curious about AI image creation, I encourage you to explore the world of local image generation. The initial setup may take some time, but the creative freedom you'll gain is truly transformative.

You Might Also Like

From Status Quo to Solution: Crafting the Perfect Pitch Narrative Arc | PageOn.ai

Learn how to transform your business presentations with powerful status quo to solution narratives. Discover visual storytelling techniques that captivate investors and stakeholders.

Transform Presentation Anxiety into Pitch Mastery - The Confidence Revolution

Discover how to turn your biggest presentation weakness into pitch confidence with visual storytelling techniques, AI-powered tools, and proven frameworks for pitch mastery.

The Art of Instant Connection: Crafting Opening Strategies That Captivate Any Audience

Discover powerful opening strategies that create instant audience connection. Learn visual storytelling, interactive techniques, and data visualization methods to captivate any audience from the start.

Mastering Visual Harmony: Typography and Color Selection for Impactful Presentations

Learn how to create professional presentations through strategic typography and color harmony. Discover font pairing, color theory, and design principles for slides that captivate audiences.