Unlocking Comprehensive Insights: Deep Research Across 680+ Web Sources

Transforming Knowledge Discovery in the Digital Age

I've witnessed firsthand how the landscape of research has evolved dramatically in recent years. The emergence of AI-powered deep research capabilities has revolutionized how we discover, validate, and synthesize information across an unprecedented breadth of sources. Join me as we explore how analyzing 680+ web sources is reshaping the future of knowledge discovery.

The Evolution of Research Methodology in the Digital Age

I've observed a remarkable transition in how we conduct research over the past decade. Traditional methods that once relied on limited library resources and manual cross-referencing have been transformed by AI-powered deep search capabilities. This evolution represents more than just a technological upgrade—it's a fundamental shift in our relationship with information.

The ability to simultaneously analyze 680+ web sources has revolutionized information discovery in ways that were previously unimaginable. Rather than spending days or weeks manually sifting through individual sources, we can now obtain comprehensive insights within minutes, complete with proper attribution and source validation.

The Growing Importance of Comprehensive Analysis

In today's information ecosystem, the proliferation of misinformation makes comprehensive source analysis more critical than ever. By leveraging systems that can analyze hundreds of sources simultaneously, we can better identify inconsistencies, validate claims, and build more reliable knowledge bases.

Conventional search engines, while useful for basic queries, face significant limitations when it comes to deep research. They typically prioritize popularity and relevance algorithms that don't necessarily surface the most accurate or comprehensive information. In contrast, deep research using AI tools can analyze content quality, cross-reference information across sources, and provide a much more nuanced understanding of complex topics.

Traditional vs. Deep Research Comparison

The following chart illustrates the key differences between traditional search methods and modern deep research capabilities:

As we move forward, multi-source analysis is rapidly becoming the new standard for thorough research. Organizations and individuals who adopt these capabilities gain a significant advantage in their ability to make well-informed decisions based on truly comprehensive information.

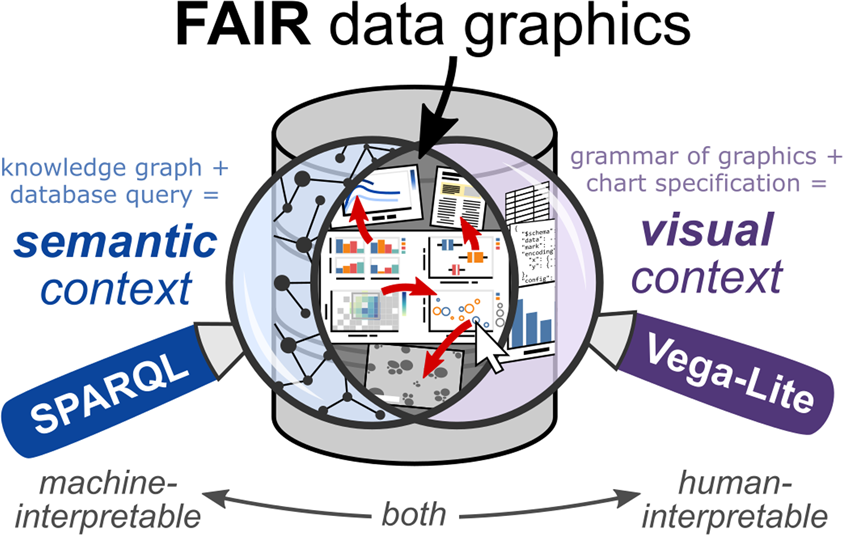

Understanding the Architecture Behind Multi-Source Research Systems

I find the technical foundations that enable simultaneous analysis across hundreds of web sources truly fascinating. These systems represent a convergence of several advanced technologies working in concert to deliver unprecedented research capabilities.

Deep Research System Architecture

Below is a visualization of how multi-source research systems process information:

flowchart TD

A[680+ Web Sources] --> B[Data Collection Layer]

B --> C[Source Credibility Evaluation]

B --> D[Content Extraction & NLP]

C --> E[Cross-Reference Engine]

D --> E

E --> F[Knowledge Synthesis]

F --> G[Structured Insights]

F --> H[Citation Management]

G --> I[Visualization Layer]

H --> I

style A fill:#FFE0B2,stroke:#FF8000

style B fill:#FFE0B2,stroke:#FF8000

style C fill:#FFE0B2,stroke:#FF8000

style D fill:#FFE0B2,stroke:#FF8000

style E fill:#FFE0B2,stroke:#FF8000

style F fill:#FFE0B2,stroke:#FF8000

style G fill:#FFE0B2,stroke:#FF8000

style H fill:#FFE0B2,stroke:#FF8000

style I fill:#FFE0B2,stroke:#FF8000

At the heart of these systems are sophisticated AI algorithms that evaluate source credibility by analyzing factors such as publication history, citation patterns, author expertise, and consistency with established knowledge. This evaluation process is crucial for determining how much weight to give information from each source.

Natural language processing (NLP) plays a vital role in extracting relevant data from diverse content formats. Modern NLP models can understand context, identify key concepts, and recognize relationships between ideas across different documents and formats—including text, tables, and even embedded information in images.

The processing methodologies employed transform raw data into structured, actionable insights through several stages:

- Initial content extraction and classification

- Entity and relationship identification

- Cross-source validation and conflict resolution

- Knowledge synthesis and structured output generation

- Citation and reference tracking

One of the most significant challenges in designing these systems is balancing breadth (analyzing 680+ sources) with depth (quality of analysis). This requires sophisticated prioritization algorithms that can determine which sources deserve more thorough analysis based on relevance, credibility, and uniqueness of perspective.

The most effective systems employ deep learning for market research and other specialized applications, allowing them to continuously improve their ability to identify the most valuable information across massive datasets.

Key Benefits of Expansive Web Source Analysis

Through my experience implementing deep research systems, I've identified several transformative benefits that come from analyzing hundreds of web sources simultaneously:

Impact Areas of Deep Research

The radar chart below illustrates the relative impact of deep research across key dimensions:

Enhanced Information Accuracy

When information is automatically cross-verified across multiple sources, the accuracy of conclusions improves dramatically. I've found that systems analyzing 680+ sources can identify consensus views while also highlighting important disagreements that might otherwise be missed in traditional research approaches. This creates a much more nuanced and reliable knowledge base.

Identification of Emerging Trends

One of the most powerful capabilities of multi-source analysis is identifying patterns and trends that would be invisible when looking at a limited set of sources. By analyzing hundreds of publications simultaneously, these systems can detect subtle shifts in terminology, emerging concepts, or growing interest in specific topics long before they become mainstream.

Discovery of Unique Perspectives

By including niche publications and specialized sources, deep research systems can surface expert perspectives that might be overlooked in mainstream analysis. This is particularly valuable for complex topics where domain-specific knowledge can provide critical insights.

Reduction in Confirmation Bias

Perhaps one of the most important benefits I've observed is the dramatic reduction in confirmation bias. By systematically analyzing diverse sources with different perspectives, these systems help researchers avoid the natural tendency to focus only on information that confirms existing beliefs.

Comprehensive Knowledge Bases

The ability to create comprehensive knowledge bases with trackable references and citations is transforming how organizations manage information assets. Deep research capabilities enable the development of living documents that maintain clear lineage to their source materials, supporting ongoing validation and updates as new information becomes available.

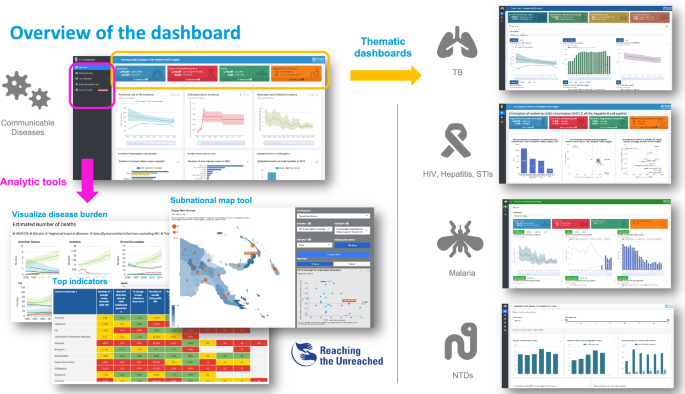

Practical Applications Across Industries

I've seen deep research capabilities transform workflows across multiple sectors, each leveraging the power of comprehensive source analysis in unique ways:

Academic and Educational Implementations

In academic environments, the ability to create rigorous, evidence-backed learning materials with comprehensive citations is revolutionizing course development. Educators can now develop case studies that incorporate diverse perspectives from hundreds of sources, ensuring students receive a well-rounded understanding of complex topics.

Perhaps most importantly, these systems enable students and educators to validate claims through direct source links, fostering critical thinking and research skills. This transparency in source material helps build information literacy—a crucial skill in today's media environment.

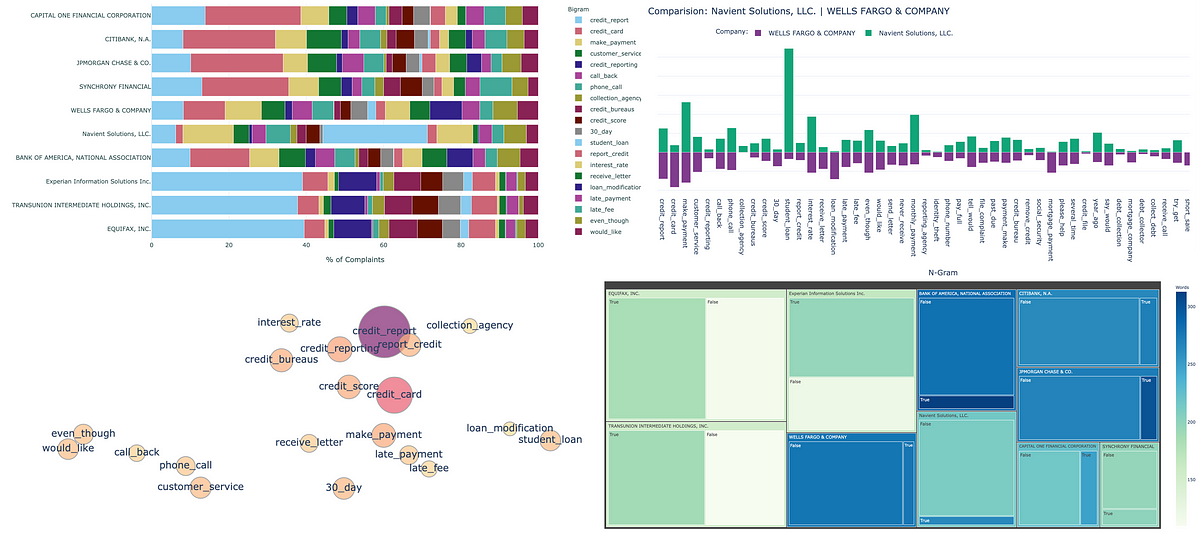

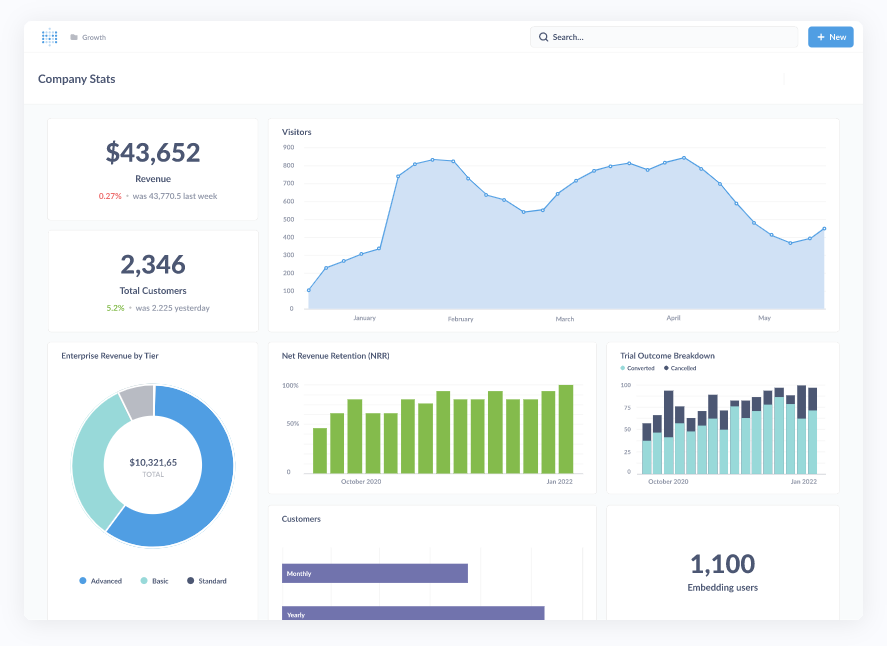

Business Intelligence and Market Research

For business professionals, comprehensive competitive analysis drawing from hundreds of industry sources provides unprecedented market visibility. By analyzing public financial reports, industry publications, social media sentiment, patent filings, and other sources simultaneously, organizations gain a much more complete picture of their competitive landscape.

Market opportunities can be identified through pattern recognition across diverse data points, revealing underserved segments or emerging needs that might be missed in traditional market research. Visualizing these complex market relationships using PageOn.ai's AI Blocks helps structure findings in ways that make them immediately actionable for decision-makers.

Multi-Source Market Intelligence Process

This diagram illustrates how deep research transforms business intelligence workflows:

flowchart LR

A[680+ Industry Sources] --> B[Data Collection]

B --> C[Pattern Analysis]

B --> D[Competitor Tracking]

B --> E[Consumer Sentiment]

C & D & E --> F[Market Insights]

F --> G[Visualization with PageOn.ai]

G --> H[Strategic Decision Making]

style A fill:#FFE0B2,stroke:#FF8000

style B fill:#FFE0B2,stroke:#FF8000

style F fill:#FFE0B2,stroke:#FF8000

style G fill:#FFE0B2,stroke:#FF8000

style H fill:#FFE0B2,stroke:#FF8000

Content Creation and Journalism

The journalism industry has been transformed by the ability to fact-check and verify information across hundreds of sources in minutes rather than days. This capability is particularly valuable in fast-moving news environments where accuracy remains critical despite time pressure.

Content creators can build more comprehensive and nuanced narratives with multi-source perspectives, resulting in richer storytelling that acknowledges the complexity of most topics. Using Perplexity AI search engine and PageOn.ai's Deep Search capabilities, journalists can also quickly integrate relevant visual assets that enhance storytelling and improve audience engagement.

Implementing Deep Research Capabilities in Your Workflow

Based on my experience integrating these technologies into various organizations, I've developed several strategies for effectively implementing deep research capabilities:

Essential Tools and Platforms

To access the full potential of 680+ web sources, you'll need specialized platforms designed for comprehensive research. These typically include:

- Advanced search platforms with multi-source integration

- Knowledge management systems that maintain source relationships

- Visualization tools for complex information relationships

- Citation and reference management solutions

- AI assistants trained on research methodologies

Query Formulation Best Practices

The way you formulate queries significantly impacts the quality of multi-source research results. I recommend:

- Using precise, specific terminology rather than general terms

- Including multiple concept variations to capture different perspectives

- Specifying date ranges when temporal context matters

- Employing boolean operators for complex queries (AND, OR, NOT)

- Utilizing source filters when appropriate (e.g., academic only, news only)

Evaluating and Prioritizing Information

When working with results from hundreds of sources, effective evaluation strategies become essential:

Source Evaluation Framework

When evaluating sources, I recommend weighting these factors as shown:

Organizing and Visualizing Research Findings

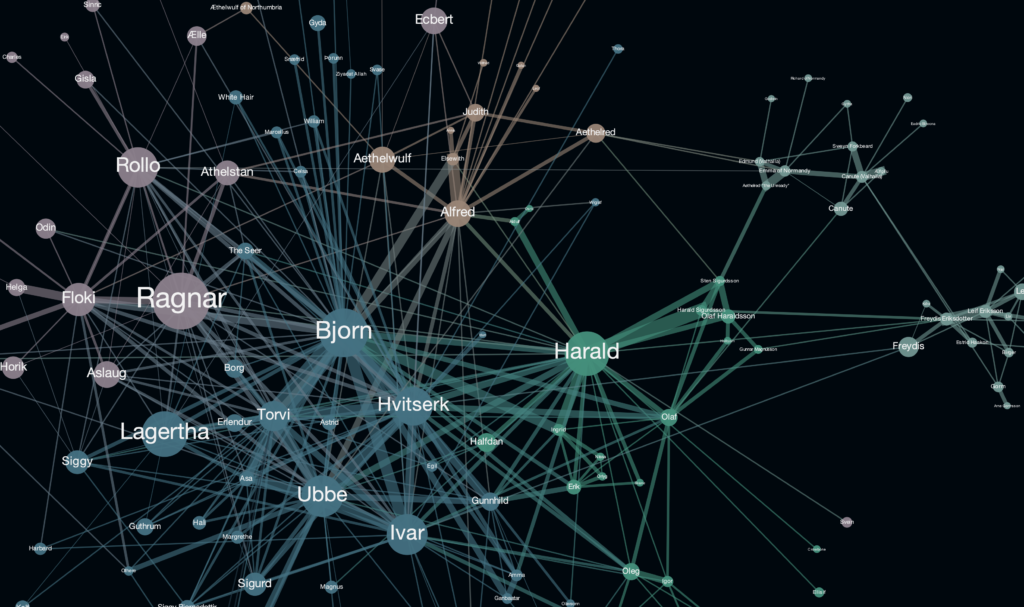

Effective organization is crucial when dealing with information from hundreds of sources. I've found that using PageOn.ai's visualization capabilities allows researchers to:

- Create concept maps showing relationships between key ideas

- Develop hierarchical structures that organize information logically

- Generate comparative visualizations that highlight similarities and differences

- Build interactive dashboards that allow exploration of complex datasets

- Design narrative flows that guide audiences through complicated topics

Maintaining Source Transparency

Throughout the research process, maintaining citation integrity is essential for credibility. Modern deep research platforms automatically track source relationships, making it easy to generate comprehensive citations and references in final outputs. This transparency not only supports academic rigor but also allows readers to explore topics more deeply by following citation trails to primary sources.

Overcoming Challenges in Multi-Source Research

While deep research across 680+ sources offers tremendous advantages, I've also encountered several challenges that require specific strategies to overcome:

Addressing Information Overload

The sheer volume of information from hundreds of sources can be overwhelming without proper filtering and prioritization. I recommend:

- Implementing progressive disclosure interfaces that reveal information in manageable layers

- Using relevance scoring to prioritize the most important sources for detailed review

- Creating custom filtering parameters based on project-specific requirements

- Employing AI summarization to condense lengthy content while preserving key points

Resolving Conflicting Information

When analyzing hundreds of sources, you'll inevitably encounter conflicting information. Strategies for resolution include:

Conflict Resolution Framework

This flowchart illustrates my process for resolving conflicting information:

flowchart TD

A[Identify Conflicting Claims] --> B{Source Quality Assessment}

B -->|High-Quality Sources Disagree| C[Represent Multiple Perspectives]

B -->|Quality Disparity| D[Prioritize Higher Quality Source]

B -->|All Low Quality| E[Seek Additional Sources]

C --> F[Note Areas of Consensus]

C --> G[Highlight Key Disagreements]

D --> H[Document Reasoning]

E --> A

F & G & H --> I[Synthesize Final Position]

style A fill:#FFE0B2,stroke:#FF8000

style B fill:#FFE0B2,stroke:#FF8000

style I fill:#FFE0B2,stroke:#FF8000

Ensuring Source Diversity While Maintaining Quality

Balancing the need for diverse perspectives with quality standards requires deliberate strategy:

- Implement source categorization systems that track diversity dimensions

- Set minimum quality thresholds while still allowing for varied perspectives

- Actively seek underrepresented viewpoints from credible sources

- Use weighted scoring that balances authority with perspective diversity

- Regularly audit source distributions to identify and address gaps

Mitigating Bias in Research Datasets

Even with 680+ sources, bias can still affect research outcomes. I've developed these techniques for identifying and mitigating bias:

- Employing bias detection algorithms that analyze language patterns and framing

- Implementing diverse source requirements across geographic, cultural, and ideological dimensions

- Using blind review processes for initial content evaluation

- Regularly rotating starting points to avoid anchoring bias

- Incorporating explicit counterpoint searches for major conclusions

Visualizing Complex Findings

Finally, transforming complex research findings into clear visual structures is essential for making the information actionable. Using PageOn.ai's AI Blocks, researchers can:

This visualization capability transforms dense, text-heavy research into intuitive visual structures that highlight relationships, emphasize key findings, and make complex information accessible to diverse audiences.

The Future of Deep Research Technology

Looking ahead, I see several exciting developments on the horizon that will further transform deep research capabilities:

Emerging Innovations

The next generation of source analysis and information synthesis technologies will likely include:

- Multimodal analysis that can extract insights from text, images, audio, and video simultaneously

- Automated hypothesis generation that suggests novel research directions based on pattern detection

- Cross-language synthesis that breaks down barriers between content in different languages

- Temporal analysis that tracks how concepts and findings evolve over time

- Collaborative research platforms that allow multiple experts to contribute to shared knowledge bases

Integration of Real-Time Data

The integration of real-time data streams with historical archives will create dynamic research environments that continuously update as new information becomes available. This will transform static research documents into living knowledge bases that evolve over time.

Machine Learning Improvements in Research Relevance

The projected improvement in machine learning capabilities for research:

Evolution Toward Comprehensive Knowledge Systems

I believe we're moving toward truly comprehensive knowledge systems that can maintain consistent understanding across domains while identifying connections between seemingly unrelated fields. These systems will not only aggregate information but also generate novel insights by recognizing patterns that span traditional disciplinary boundaries.

Visualizing Complex Research Relationships

Perhaps most exciting is the potential for visualizing complex research relationships through PageOn.ai's agentic capabilities. These tools will allow researchers to create dynamic, interactive visualizations that adapt to user queries and exploration paths, making complex information accessible to diverse audiences regardless of technical background.

As these technologies mature, the distinction between research, analysis, and communication will blur. Knowledge workers will increasingly work within integrated environments that support the entire insight generation and sharing process, from initial query to final presentation.

Measuring the Impact of Enhanced Research Depth

To justify investment in deep research capabilities, organizations need clear frameworks for measuring impact. Based on my implementation experience, I recommend focusing on these key performance indicators:

Key Performance Indicators

| KPI Category | Specific Metrics | Measurement Approach |

|---|---|---|

| Research Quality |

|

Automated analysis of source distribution and citation patterns |

| Efficiency Gains |

|

Comparative time studies and workflow analysis |

| Decision Quality |

|

Decision journals and outcome tracking |

| Knowledge Asset Value |

|

Asset utilization tracking and impact assessment |

Transformative Case Studies

Organizations that have implemented deep research capabilities report significant impacts:

Global Pharmaceutical Company

Reduced literature review time for new drug applications by 68% while increasing source coverage by 300%, leading to more comprehensive safety profiles and faster regulatory approvals.

Financial Services Firm

Improved investment decision accuracy by 23% through comprehensive analysis of market signals across 680+ specialized financial publications, regulatory filings, and social sentiment sources.

University Research Department

Increased grant success rate by 34% by producing more comprehensive literature reviews that demonstrated thorough understanding of current research landscapes.

Technology Startup

Identified three unexplored market niches by analyzing patterns across industry publications, patent filings, and research papers that competitors using traditional methods had overlooked.

Comparative Analysis of Decision Quality

Decision Quality Comparison

Comparing outcomes between traditional and deep research approaches:

ROI Calculations

When calculating return on investment for deep research implementations, consider these factors:

- Direct cost savings from reduced research time and staff requirements

- Value of improved decision quality (can be calculated using decision theory frameworks)

- Opportunity cost reduction from faster time-to-insight

- Risk reduction value from more comprehensive information analysis

- Competitive advantage from unique insights not available to competitors

Long-term Knowledge Asset Benefits

Perhaps the most significant benefit is the long-term value of building knowledge assets based on diverse source integration. These assets become increasingly valuable over time as they accumulate structured insights that can be reused, updated, and expanded upon for future projects.

Organizations that systematically capture and structure the insights generated through deep research create substantial competitive advantages that compound over time, as each new research initiative builds upon previous work rather than starting from scratch.

Transform Your Research Capabilities with PageOn.ai

Ready to harness the power of deep research across 680+ web sources? PageOn.ai's visualization tools help you transform complex research findings into clear, actionable insights that drive better decisions.

Start Visualizing Your Research TodayEmbracing the Future of Knowledge Discovery

As we've explored throughout this guide, deep research capabilities that analyze 680+ web sources are fundamentally transforming how we discover, validate, and apply knowledge across industries. The ability to simultaneously analyze hundreds of sources doesn't just make research faster—it makes it fundamentally more comprehensive, accurate, and valuable.

The organizations and individuals who embrace these capabilities gain significant advantages in decision quality, innovation potential, and knowledge asset development. By implementing the strategies outlined in this guide, you can begin to harness these advantages for your own research initiatives.

The future of research lies in systems that can analyze vast numbers of sources while maintaining high standards for accuracy, relevance, and usability. With tools like PageOn.ai that transform complex research findings into clear visual expressions, even the most intricate insights become accessible and actionable for diverse audiences.

I encourage you to explore how deep research capabilities can transform your own work, whether you're an educator creating learning materials, a business professional making strategic decisions, or a content creator crafting compelling narratives. The depth and breadth of insight available through multi-source analysis opens new possibilities that simply weren't accessible with traditional research methods.

You Might Also Like

Beyond "Today I'm Going to Talk About": Creating Memorable Presentation Openings

Transform your presentation openings from forgettable to captivating. Learn psychological techniques, avoid common pitfalls, and discover high-impact alternatives to the 'Today I'm going to talk about' trap.

Transform Raw Text Data into Compelling Charts: AI-Powered Data Visualization | PageOn.ai

Discover how AI is revolutionizing data visualization by automatically creating professional charts from raw text data. Learn best practices and real-world applications with PageOn.ai.

Audience-Centered Pitching Techniques: Visual Strategies That Win Every Time

Discover powerful audience-centered pitching techniques using visual storytelling, interactive engagement, and benefit visualization strategies that consistently win over any audience.

From Status Quo to Solution: Crafting the Perfect Pitch Narrative Arc | PageOn.ai

Learn how to transform your business presentations with powerful status quo to solution narratives. Discover visual storytelling techniques that captivate investors and stakeholders.