Visualizing the Five Core Primitives That Power Modern AI Connectivity

The Foundation: Understanding AI Primitives in the Connected Ecosystem

In today's rapidly evolving AI landscape, understanding the fundamental building blocks that enable AI systems to communicate and function together is essential for creating powerful, flexible solutions.

The Foundation: Understanding AI Primitives in the Connected Ecosystem

When I first began exploring the world of modern AI systems, I quickly realized that understanding the foundational building blocks—what we call "primitives"—was essential to grasping how these complex systems actually communicate and function together.

AI primitives serve as the fundamental, composable components that enable AI systems to connect, share information, and work together coherently. Unlike high-level frameworks that abstract away complexity, primitives offer granular control over system architecture, data flow, and performance optimization.

The five core primitives that form the "digital nervous system" of modern AI architectures

We've witnessed a remarkable evolution in AI architecture over the past few years. What began as isolated, single-purpose models has transformed into richly interconnected AI ecosystems that require specialized connectivity solutions. These primitives essentially serve as the "digital nervous system" that allows information to flow between components.

I've found that ai diagrams are particularly helpful when explaining these concepts. By transforming complex AI connectivity concepts into visual workflows using PageOn.ai's AI Blocks feature, we can more easily understand how these primitives interact and support each other.

Primitives vs. Frameworks Comparison

Below I've created a visualization that highlights the key differences between using AI primitives and high-level frameworks:

flowchart TD

subgraph "AI Primitives Approach"

P1[Memory Stores] -.-> PC[Custom Integration]

P2[Context Management] -.-> PC

P3[Tool APIs] -.-> PC

P4[Data Movement] -.-> PC

P5[Agent Coordination] -.-> PC

PC --> PO[Optimized Solution]

end

subgraph "Framework Approach"

F[High-Level Framework] --> FA[Abstracted Solution]

end

style P1 fill:#FF8000,color:#fff

style P2 fill:#FF8000,color:#fff

style P3 fill:#FF8000,color:#fff

style P4 fill:#FF8000,color:#fff

style P5 fill:#FF8000,color:#fff

style PC fill:#f9f9f9,stroke:#ccc

style PO fill:#f5f5f5,stroke:#ccc

style F fill:#cccccc,stroke:#999

style FA fill:#f5f5f5,stroke:#ccc

As industry experts have noted, the choice between primitives and frameworks is particularly crucial in sensitive domains like cybersecurity, where fine-tuned optimization and rigorous controls are essential. When working with primitives, you have both the freedom and responsibility to audit every component and data flow.

Memory Stores: The Persistent Knowledge Layer

In my experience building AI systems, I've found that memory primitives are absolutely essential for creating agents that feel natural and contextually aware. Without them, every interaction becomes isolated—like talking to someone with severe amnesia.

Memory stores enable AI systems to retain and recall information across interactions, creating a persistent knowledge layer that informs future responses and actions. This capability transforms simple query-response systems into sophisticated assistants that can maintain coherent conversations and build upon previous interactions.

Types of AI Memory Stores

flowchart TD

M[Memory Primitives] --> STM[Short-Term Memory]

M --> EM[Episodic Memory]

M --> SM[Semantic Memory]

M --> PM[Procedural Memory]

M --> VDB[Vector Databases]

STM --> STM1[Working Context Window]

STM --> STM2[Recent Interactions]

EM --> EM1[Conversation History]

EM --> EM2[User Preferences]

SM --> SM1[Facts & Knowledge]

SM --> SM2[Domain Expertise]

PM --> PM1[Function Execution]

PM --> PM2[Process Workflows]

VDB --> VDB1[Similarity Search]

VDB --> VDB2[Semantic Retrieval]

style M fill:#FF8000,color:#fff

style STM fill:#FFA040,color:#fff

style EM fill:#FFA040,color:#fff

style SM fill:#FFA040,color:#fff

style PM fill:#FFA040,color:#fff

style VDB fill:#FFA040,color:#fff

I've implemented several types of memory stores in my AI projects, each serving different purposes:

- Short-term memory: Maintains the immediate conversation context, typically limited by token windows.

- Episodic memory: Stores specific interactions and events, allowing the AI to reference past conversations.

- Semantic memory: Holds factual knowledge and conceptual understanding independent of specific experiences.

- Procedural memory: Contains information about how to perform specific tasks or functions.

Vector databases have emerged as a specialized form of memory primitive that's particularly well-suited for AI applications. These databases store information as high-dimensional vectors, enabling efficient similarity search and semantic retrieval—operations that are fundamental to many AI interactions.

Case study: Enterprise AI assistant architecture with integrated memory store primitives

In enterprise environments, I've seen how memory primitives enable context-aware AI assistants to deliver significantly more value than their context-free counterparts. For example, a customer service AI with properly implemented memory stores can recall previous customer issues, track resolution status, and maintain awareness of customer preferences—all without requiring the customer to repeat information.

When working on complex memory architectures, I've found that visualizing different memory store structures and their connections using PageOn.ai's structural visualization tools helps tremendously in planning and communicating these systems to stakeholders.

Context Management: Maintaining Coherence Across AI Interactions

Context management primitives are closely related to memory stores, but they serve a more specialized purpose: preserving conversation history and situational awareness throughout an interaction. I've found that implementing robust context management is crucial for creating AI experiences that feel natural and coherent.

The technical approaches to context management have evolved significantly as AI systems have become more sophisticated. We now have several techniques at our disposal:

Context Management Techniques Comparison

In my work with latency-sensitive applications, I've discovered that context management has a significant impact on AI performance. When implementing context primitives, we must carefully balance several competing factors:

- Context window size: Larger windows preserve more history but increase computational demands and latency.

- Compression techniques: Methods like summarization can reduce context size while preserving key information.

- Prioritization algorithms: Not all context is equally valuable—some systems prioritize recent interactions or emotionally charged content.

- Privacy considerations: Context retention must be balanced with user privacy expectations and regulatory requirements.

Context Flow in Multi-Turn Conversations

sequenceDiagram

participant User

participant CM as Context Manager

participant LLM as AI Model

participant Memory as Memory Store

User->>CM: Initial Query

CM->>LLM: Query + Empty Context

LLM->>CM: Response

CM->>Memory: Store Interaction

CM->>User: Display Response

User->>CM: Follow-up Query

CM->>Memory: Retrieve Context

CM->>LLM: Query + Previous Context

LLM->>CM: Contextual Response

CM->>Memory: Update Interaction

CM->>User: Display Response

User->>CM: Ambiguous Query

CM->>Memory: Retrieve Extended Context

CM->>LLM: Query + Rich Context

LLM->>CM: Disambiguated Response

CM->>Memory: Update Interaction

CM->>User: Display Response

Creating dynamic context flow diagrams that illustrate information preservation across AI conversations has been invaluable in my work. These visualizations help stakeholders understand how context is maintained and why it matters for user experience.

One of the most challenging aspects of context management is finding the right balance between computational efficiency and contextual richness. In my experience, this balance varies significantly based on the specific application and user expectations. For example, a customer service AI might need to retain detailed product information across a conversation, while a creative writing assistant might benefit from broader thematic context.

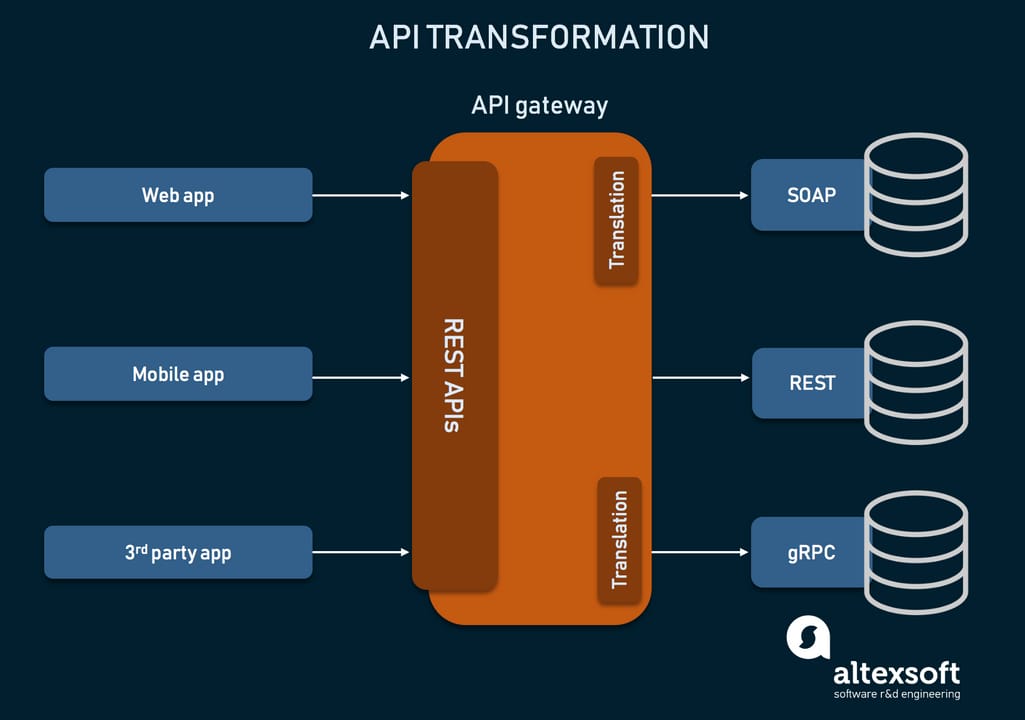

Tool APIs: Extending AI Capabilities Through Connectivity

Tool API primitives have revolutionized what AI systems can accomplish by enabling them to interact with external services and data sources. In my work building integrated AI solutions, I've seen how these primitives transform theoretical language models into practical, action-oriented systems.

At their core, tool APIs allow AI systems to extend beyond their internal knowledge and capabilities by connecting to specialized services. This connectivity enables actions like retrieving real-time data, performing calculations, executing transactions, and controlling external systems.

Standardized tool API architecture showing function calling protocols

The standardization of tool calling protocols has been a significant advancement in the AI field. Major AI providers have developed consistent approaches to function calling that enable:

- Structured parameter validation

- Type-safe function execution

- Error handling and retry mechanisms

- Authentication and authorization flows

- Cross-platform interoperability

When implementing tool connectivity in enterprise environments, I've had to carefully consider security implications. Some key security considerations include:

Security Considerations for Tool API Implementation

flowchart TD

AI[AI System] --> Auth[Authentication]

AI --> Perm[Permission Control]

AI --> Valid[Input Validation]

AI --> Audit[Audit Logging]

AI --> Rate[Rate Limiting]

Auth --> Auth1[OAuth/OIDC]

Auth --> Auth2[API Keys]

Auth --> Auth3[JWT Tokens]

Perm --> Perm1[Least Privilege]

Perm --> Perm2[Scoped Access]

Perm --> Perm3[User Attribution]

Valid --> Valid1[Schema Validation]

Valid --> Valid2[Sanitization]

Valid --> Valid3[Type Checking]

Audit --> Audit1[Who Called What]

Audit --> Audit2[Parameter Logging]

Audit --> Audit3[Response Tracking]

Rate --> Rate1[Request Quotas]

Rate --> Rate2[Throttling]

Rate --> Rate3[Cost Controls]

style AI fill:#FF8000,color:#fff

style Auth fill:#FFA040,color:#fff

style Perm fill:#FFA040,color:#fff

style Valid fill:#FFA040,color:#fff

style Audit fill:#FFA040,color:#fff

style Rate fill:#FFA040,color:#fff

I've implemented tool API primitives across various domains, each with unique requirements:

| Domain | Tool API Examples | Key Requirements |

|---|---|---|

| Financial Analysis | Market data retrieval, portfolio analysis, transaction execution | Ultra-low latency, strict security, audit trails |

| Healthcare Diagnostics | EHR integration, medical image analysis, treatment recommendation | HIPAA compliance, explainability, high reliability |

| Manufacturing | Equipment monitoring, quality control, supply chain optimization | Real-time responsiveness, OT/IT integration, fault tolerance |

When documenting tool API implementations, I've found that using PageOn.ai's Deep Search to integrate relevant API documentation visuals into explanatory materials significantly improves comprehension and adoption rates among development teams.

The future of tool API primitives is moving toward even greater standardization and interoperability. I'm particularly excited about the potential for AI tool trends 2025 to bring more sophisticated orchestration capabilities to these connectivity primitives.

Data Movement Primitives: Optimizing Information Flow

In my experience building high-performance AI systems, I've found that data movement primitives are often the unsung heroes of the architecture. These primitives focus on optimizing how information flows between components, with special attention to speed, reliability, and efficiency.

The critical nature of high-speed, low-latency networks for AI cannot be overstated. As connectivity experts have noted, AI models continuously ingest and process data from various sources. Without optimized connectivity, training times increase dramatically, and real-time applications become impossible.

Impact of Network Latency on AI Performance

I've implemented several techniques for reducing data bottlenecks in AI pipelines:

- Data preprocessing optimization: Moving preprocessing closer to data sources to reduce transfer volumes.

- Compression strategies: Using domain-specific compression algorithms to reduce bandwidth requirements.

- Batch optimization: Finding the optimal batch sizes for different network conditions and model architectures.

- Edge computing: Deploying inference capabilities closer to data sources to minimize round-trip latency.

- Asynchronous processing: Implementing non-blocking data pipelines that can continue processing while waiting for network operations.

Global AI Data Movement Architecture

flowchart TD

DC1[Data Center 1] <--> |High Speed Link| DC2[Data Center 2]

DC1 <--> |High Speed Link| DC3[Data Center 3]

DC2 <--> |High Speed Link| DC3

DC1 --> Edge1[Edge Location 1]

DC1 --> Edge2[Edge Location 2]

DC2 --> Edge3[Edge Location 3]

DC2 --> Edge4[Edge Location 4]

DC3 --> Edge5[Edge Location 5]

DC3 --> Edge6[Edge Location 6]

Edge1 --> |Low Latency| User1[User Region 1]

Edge2 --> |Low Latency| User2[User Region 2]

Edge3 --> |Low Latency| User3[User Region 3]

Edge4 --> |Low Latency| User4[User Region 4]

Edge5 --> |Low Latency| User5[User Region 5]

Edge6 --> |Low Latency| User6[User Region 6]

style DC1 fill:#FF8000,color:#fff

style DC2 fill:#FF8000,color:#fff

style DC3 fill:#FF8000,color:#fff

style Edge1 fill:#FFA040,color:#fff

style Edge2 fill:#FFA040,color:#fff

style Edge3 fill:#FFA040,color:#fff

style Edge4 fill:#FFA040,color:#fff

style Edge5 fill:#FFA040,color:#fff

style Edge6 fill:#FFA040,color:#fff

Global connectivity considerations become particularly important for distributed AI teams and applications. In my work with multinational organizations, I've had to design systems that account for:

- Regional data sovereignty requirements

- Varying network infrastructure quality across regions

- Time zone differences affecting synchronization windows

- Redundancy and failover across geographic locations

Private cloud environments with dedicated connectivity have proven particularly valuable for AI workloads. These environments eliminate congestion issues that can plague shared infrastructure and ensure consistent performance for data-hungry AI applications.

When explaining complex data flow patterns and identifying potential bottlenecks, I've found PageOn.ai's structural visualization capabilities to be invaluable. These tools allow me to create clear visual representations that help stakeholders understand where optimization efforts should be focused.

Agent Coordination Primitives: Orchestrating Multi-Agent Systems

As AI systems have grown more complex, I've increasingly found myself working with multi-agent architectures. These systems, composed of multiple specialized AI components working together, require sophisticated coordination primitives to function effectively.

Coordination primitives enable multiple AI agents to work together coherently by managing communication, task allocation, conflict resolution, and shared knowledge. Without these primitives, multi-agent systems quickly devolve into chaos—with agents working at cross-purposes or duplicating efforts.

Multi-agent system with central coordination primitive managing communication flow

Communication protocols and message passing systems form the backbone of agent coordination. In my experience, several approaches have proven effective:

Agent Communication Protocol Comparison

Agent-to-data connection mapping is another critical aspect of coordination primitives. This mapping defines how different agents access and interact with shared data resources, preventing conflicts and ensuring data consistency.

Agent Coordination Patterns

flowchart TD

subgraph "Hierarchical Coordination"

HC[Coordinator] --> HA1[Agent 1]

HC --> HA2[Agent 2]

HC --> HA3[Agent 3]

end

subgraph "Peer-to-Peer Coordination"

PA1[Agent 1] <--> PA2[Agent 2]

PA1 <--> PA3[Agent 3]

PA2 <--> PA3

end

subgraph "Hybrid Coordination"

HYC[Coordinator] --> HYA1[Agent 1]

HYC --> HYA2[Agent 2]

HYA1 <--> HYA2

end

style HC fill:#FF8000,color:#fff

style HA1 fill:#FFA040,color:#fff

style HA2 fill:#FFA040,color:#fff

style HA3 fill:#FFA040,color:#fff

style PA1 fill:#FFA040,color:#fff

style PA2 fill:#FFA040,color:#fff

style PA3 fill:#FFA040,color:#fff

style HYC fill:#FF8000,color:#fff

style HYA1 fill:#FFA040,color:#fff

style HYA2 fill:#FFA040,color:#fff

One of the most challenging aspects of agent coordination is balancing autonomy and oversight. In my experience, different use cases call for different approaches:

- High autonomy: Best for exploratory tasks, creative work, and situations where diverse approaches are beneficial.

- Tight coordination: Essential for mission-critical systems, financial transactions, and safety-sensitive applications.

- Hybrid approaches: Most practical for real-world applications, with varying levels of autonomy based on context and risk.

Creating agent interaction diagrams showing communication flows between system components has been invaluable in my work. These diagrams help stakeholders understand the complex relationships between agents and identify potential bottlenecks or single points of failure.

As multi-agent systems continue to evolve, I expect to see more sophisticated coordination primitives emerge, particularly in areas like consensus mechanisms, reputation systems, and adaptive task allocation. These advancements will enable even more complex collaborative behaviors among AI agents.

Implementation Strategies: Building with AI Connectivity Primitives

When I approach a new AI project, one of the first strategic decisions I face is whether to build with low-level primitives or leverage high-level frameworks. This decision has far-reaching implications for development speed, system flexibility, and long-term maintenance.

To guide this decision, I've developed a framework that considers several key factors:

Decision Framework: Primitives vs. Frameworks

flowchart TD

Start[Start Decision] --> Q1{Custom Security

Requirements?}

Q1 -->|Yes| P1[Consider Primitives]

Q1 -->|No| Q2{Performance

Critical?}

Q2 -->|Yes| P1

Q2 -->|No| Q3{Need Fine

Control?}

Q3 -->|Yes| P1

Q3 -->|No| Q4{Rapid Development

Priority?}

Q4 -->|Yes| F1[Consider Frameworks]

Q4 -->|No| Q5{Team Expertise?}

Q5 -->|Deep AI Knowledge| P1

Q5 -->|General Development| F1

P1 --> Eval1[Evaluate Required

Primitives]

F1 --> Eval2[Evaluate Framework

Options]

Eval1 --> Impl[Implementation

Strategy]

Eval2 --> Impl

style Start fill:#FF8000,color:#fff

style P1 fill:#FFA040,color:#fff

style F1 fill:#FFA040,color:#fff

style Impl fill:#FF8000,color:#fff

Security and compliance considerations are particularly important when implementing custom AI connectivity. In my experience, several areas require special attention:

- Data privacy: Ensuring that sensitive information is properly protected throughout the AI pipeline.

- Authentication and authorization: Implementing robust identity verification and access control for all system components.

- Audit trails: Maintaining comprehensive logs of all system actions for accountability and troubleshooting.

- Regulatory compliance: Adhering to industry-specific regulations and standards (GDPR, HIPAA, etc.).

- Vulnerability management: Regularly assessing and addressing security vulnerabilities in all system components.

Implementation roadmap for integrating AI connectivity primitives

For latency-sensitive AI applications, I've developed several performance optimization techniques:

| Technique | Application | Impact |

|---|---|---|

| Connection Pooling | Database and API connections | Reduces connection establishment overhead |

| Caching Strategies | Frequently accessed data and responses | Minimizes redundant computations and queries |

| Parallel Processing | Independent operations and data processing | Improves throughput and utilization |

| Asynchronous I/O | Network and disk operations | Prevents blocking on I/O-bound operations |

Future-proofing is another critical consideration in my implementation strategy. I design connectivity architectures that can evolve with AI capabilities by:

- Adopting modular designs that allow components to be replaced or upgraded independently

- Implementing standard interfaces that can accommodate new implementations

- Building in telemetry and observability to identify bottlenecks and optimization opportunities

- Planning for increasing scale and complexity as AI capabilities grow

When communicating implementation concepts to stakeholders, I've found that transforming these ideas into clear visual roadmaps using PageOn.ai's visualization tools significantly improves understanding and alignment.

Future Horizons: Emerging Primitives and Connectivity Innovations

As I look toward the future of AI connectivity, I'm excited about several emerging primitives and innovations that promise to transform how AI systems communicate and collaborate.

Looking ahead to AI tool trends 2025 and beyond, I see several next-generation AI connectivity primitives emerging:

Emerging AI Connectivity Primitives

flowchart TD

Future[Future Primitives] --> Fed[Federated Learning Primitives]

Future --> Quantum[Quantum-Compatible Connectors]

Future --> Cross[Cross-Modal Primitives]

Future --> Trust[Trust & Verification Primitives]

Future --> Edge[Edge Intelligence Primitives]

Fed --> Fed1[Secure Aggregation]

Fed --> Fed2[Differential Privacy]

Quantum --> Q1[Quantum State Transfer]

Quantum --> Q2[Hybrid Classical-Quantum]

Cross --> C1[Vision-Text Bridging]

Cross --> C2[Audio-Text-Vision Fusion]

Trust --> T1[Zero-Knowledge Proofs]

Trust --> T2[Provenance Tracking]

Edge --> E1[Adaptive Compression]

Edge --> E2[Intermittent Connectivity]

style Future fill:#FF8000,color:#fff

style Fed fill:#FFA040,color:#fff

style Quantum fill:#FFA040,color:#fff

style Cross fill:#FFA040,color:#fff

style Trust fill:#FFA040,color:#fff

style Edge fill:#FFA040,color:#fff

The impact of quantum computing on AI connectivity requirements will be profound. As quantum systems mature, we'll need entirely new primitives to handle:

- Quantum-classical data exchange with minimal decoherence

- Distributed quantum processing across geographic locations

- Quantum-resistant security protocols for AI communications

- Hybrid architectures that leverage both quantum and classical computing resources

Cross-Modal Primitive Adoption Forecast

Cross-modal primitives are particularly exciting because they enable seamless transitions between text, vision, and audio modalities. These primitives will transform how AI systems perceive and interact with the world, enabling more natural and comprehensive understanding.

Standardization will play a crucial role in advancing AI primitive interoperability. I expect to see industry consortia and standards bodies develop frameworks that ensure connectivity primitives from different vendors can work together seamlessly, similar to how networking standards evolved.

Emerging technology relationships and dependencies in the AI connectivity ecosystem

As these emerging primitives develop, I find that visualizing technology relationships and dependencies with PageOn.ai's structural mapping tools helps me stay ahead of trends and plan for future integration needs.

One area where I see particular promise is in AI homework assistance and educational applications. As cross-modal primitives mature, AI systems will be able to process textbooks, lectures, diagrams, and student questions across multiple modalities, providing more comprehensive and personalized educational support.

Transform Your Visual Expressions with PageOn.ai

Ready to bring clarity to complex AI connectivity concepts? PageOn.ai provides powerful visualization tools that make it easy to create professional diagrams, flowcharts, and visual explanations that communicate even the most intricate AI architectures effectively.

Start Creating with PageOn.ai TodayConclusion: The Connected Future of AI

As I reflect on the five core primitives that power modern AI connectivity—memory stores, context management, tool APIs, data movement, and agent coordination—I'm struck by how these fundamental building blocks enable the sophisticated AI systems we're beginning to take for granted.

The future of AI will be defined not just by more powerful models, but by more sophisticated connectivity between components. As these primitives continue to evolve and new ones emerge, we'll see AI systems that can collaborate, learn, and interact in increasingly natural and powerful ways.

For those looking to stay at the forefront of AI development, understanding these connectivity primitives is essential. They form the foundation upon which the next generation of AI applications will be built, enabling everything from more personalized digital assistants to complex multi-agent systems that can tackle previously impossible challenges.

As we navigate this exciting frontier, tools like PageOn.ai will be invaluable for visualizing, understanding, and communicating these complex concepts. By transforming abstract technical ideas into clear visual expressions, we can accelerate innovation and ensure that the benefits of advanced AI connectivity are accessible to all.

You Might Also Like

Mastering Content Rewriting: How Gemini's Smart Editing Transforms Your Workflow

Discover how to streamline content rewriting with Gemini's smart editing capabilities. Learn effective prompts, advanced techniques, and workflow optimization for maximum impact.

Mastering Visual Flow: How Morph Transitions Transform Presentations | PageOn.ai

Discover how Morph transitions create dynamic, seamless visual connections between slides, enhancing audience engagement and transforming ordinary presentations into memorable experiences.

Transforming Presentations: Strategic Use of Color and Imagery for Maximum Visual Impact

Discover how to leverage colors and images in your slides to create visually stunning presentations that engage audiences and enhance information retention.

Enhancing Audience Experience with Strategic Audio Integration | Create Immersive Brand Connections

Discover how strategic audio integration creates immersive brand connections across podcasts, streaming platforms, and smart speakers. Learn frameworks and techniques to transform your marketing.