Building Secure AI Agents: A Multi-Layered Approach to Safety

Visualizing comprehensive security architecture for autonomous AI systems

In today's rapidly evolving AI landscape, securing autonomous agents requires sophisticated, multi-layered approaches that go beyond traditional security measures. I'll guide you through the essential frameworks and implementation strategies needed to build truly secure AI agent systems.

Understanding the AI Agent Security Landscape

AI agents represent a fundamental shift in how software interacts with our world. Unlike traditional applications, these autonomous systems make decisions, take actions, and evolve over time without direct human oversight. This autonomy creates unique security challenges that traditional approaches simply weren't designed to address.

Why Traditional Security Falls Short

Traditional security models operate on predictable, deterministic principles. They assume systems follow predetermined paths and can be secured through static permissions and boundaries. AI agents, however, introduce several challenges that break these assumptions:

- Autonomy: Agents make independent decisions that can't be fully predicted at design time

- Learning capabilities: They evolve behaviors based on experience and feedback

- Complex interactions: They often work in ecosystems with other agents, creating emergent behaviors

- Access requirements: Many require broad system permissions to perform their intended functions

AI Agent Security Challenges vs. Traditional Systems

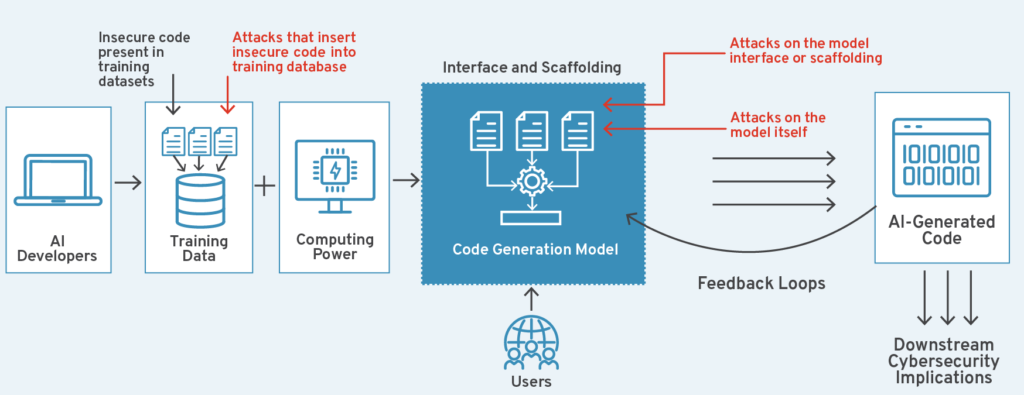

The Rising Threat Landscape

As AI agents become more prevalent in critical systems, they face increasingly sophisticated threats. These include:

- Prompt injection attacks that manipulate agent behavior through carefully crafted inputs

- Data poisoning that corrupts learning processes

- Model extraction attempts to steal proprietary capabilities

- Adversarial examples designed to trick perception systems

- Supply chain vulnerabilities in foundation models or dependencies

The business implications of these vulnerabilities can be severe: compromised data, damaged reputation, regulatory penalties, and potential harm to users or systems. This is why comprehensive security must be integrated from the earliest design phases rather than added as an afterthought.

To effectively address these challenges, we need to transform abstract security concepts into clear, actionable frameworks. Using visual framework for ai safety tools enables teams to better understand, implement, and maintain sophisticated security architectures across the entire AI agent lifecycle.

The MAESTRO Framework: Visualizing Seven Layers of AI Security

The MAESTRO (Multi-Agent Environment, Security, Threat, Risk, and Outcome) framework provides a comprehensive approach to securing AI agent systems through seven interconnected layers. I've found this structured approach invaluable for systematically addressing the full spectrum of security considerations across the entire AI lifecycle.

flowchart TD

A[Foundation Models] --> B[Agent Core]

B --> C[Tool Integration]

C --> D[Operational Context]

D --> E[Multi-Agent Interaction]

E --> F[Deployment Environment]

F --> G[Agent Ecosystem]

style A fill:#FF8000,stroke:#333,stroke-width:1px,color:white

style B fill:#FF9124,stroke:#333,stroke-width:1px,color:white

style C fill:#FFA347,stroke:#333,stroke-width:1px,color:white

style D fill:#FFB56B,stroke:#333,stroke-width:1px,color:black

style E fill:#FFC78E,stroke:#333,stroke-width:1px,color:black

style F fill:#FFD9B2,stroke:#333,stroke-width:1px,color:black

style G fill:#FFEBD5,stroke:#333,stroke-width:1px,color:black

Each layer of the MAESTRO framework addresses specific security concerns while building upon the protections established in previous layers. Let me break down these layers and explain how they interconnect:

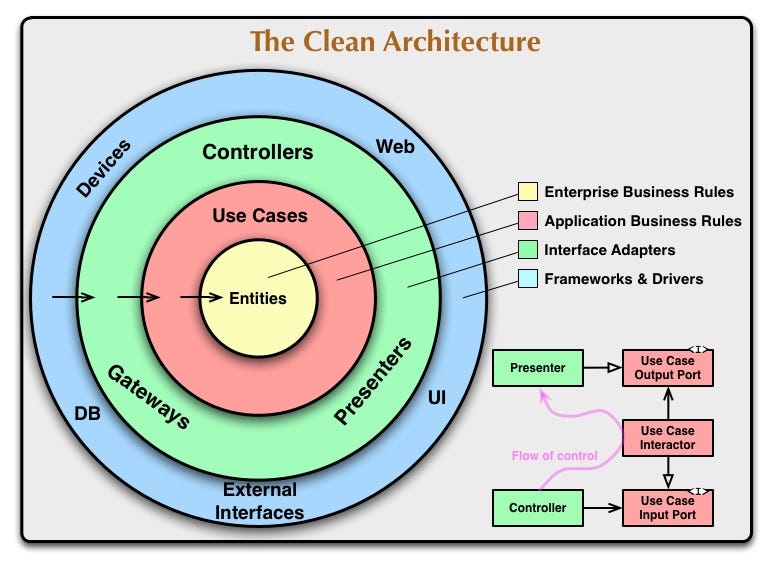

Layer 1: Foundation Models

Security begins at the foundation model level, where we establish core model safeguards and baseline protections. This includes implementing techniques like:

- Model alignment protocols to ensure behavior matches intended goals

- Robust input sanitization and validation

- Output filtering mechanisms to prevent harmful content generation

- Formal verification of critical model properties where possible

Layer 2: Agent Core

The agent core layer focuses on the decision-making components that transform foundation models into autonomous agents. Security at this layer includes:

- Action manifests that define permissible behaviors

- Runtime policy enforcement mechanisms

- Decision logging and verification systems

- Deterministic safety constraints

Layer 3: Tool Integration

As agents gain access to tools and external systems, this layer secures these interactions through:

- Granular permission systems for tool access

- Sandboxed execution environments

- Request validation and rate limiting

- Tool-specific security constraints

Layer 4: Operational Context

This layer addresses how agents interact with their operational environment, including:

- Context-aware security policies that adapt to different situations

- Environmental monitoring systems

- Boundary enforcement mechanisms

- Real-time security assessment

Layer 5: Multi-Agent Interaction

When multiple agents work together, additional security concerns emerge:

- Secure communication protocols between agents

- Authority and trust models for collaborative decision-making

- Conflict resolution mechanisms

- Collective behavior monitoring

Layer 6: Deployment Environment

This layer focuses on securing the infrastructure where agents operate:

- Secure deployment pipelines

- Infrastructure hardening

- Network segmentation and isolation

- Comprehensive logging and monitoring

Layer 7: Agent Ecosystem

The outermost layer addresses the broader ecosystem in which agents exist:

- Governance frameworks and policies

- Compliance monitoring and reporting

- Incident response plans

- Continuous security assessment

Visualizing these interconnected layers helps teams understand how security measures build upon each other. Using agentic workflows visualization tools like PageOn.ai's AI Blocks allows us to create clear representations of these complex relationships, making it easier to identify gaps or weaknesses in the security architecture.

Implementing Hybrid Defense Strategies

Effective AI agent security requires a hybrid approach that combines traditional deterministic controls with AI reasoning-based defense mechanisms. I've found this balanced strategy essential for addressing the unique challenges of autonomous systems.

flowchart LR

A[Agent Request] --> B{Runtime Policy Engine}

B -->|Approved| C[Action Execution]

B -->|Rejected| D[Denial]

B -->|Uncertain| E[AI Security Analyzer]

E -->|Approved| C

E -->|Rejected| D

E -->|Human Review| F[Security Team]

F -->|Approved| C

F -->|Rejected| D

Layer 1: Deterministic Controls

The first layer of defense uses traditional, rule-based security measures to establish clear boundaries for agent behavior:

- Runtime policy enforcement engines that monitor and control agent actions before execution

- Action manifests that explicitly define security properties of permitted actions

- Hard constraints on resource usage, data access, and external communications

- Predefined kill switches and circuit breakers for emergency containment

Runtime Policy Enforcement Architecture

A robust runtime policy enforcement system includes several key components:

- Action manifest registry that catalogs all permitted actions with their security properties

- Policy evaluation engine that checks requests against defined policies

- Authentication and authorization verification for all actions

- Resource and rate limiting to prevent abuse

- Comprehensive logging of all decisions and actions

Policy Enforcement Components

Layer 2: AI Reasoning-Based Defenses

The second layer leverages AI's own reasoning capabilities to enhance security:

- Adversarial training that exposes agents to potential attack patterns during development

- Specialized security analyst models that evaluate requests for malicious intent

- Self-reflection mechanisms that question the safety of planned actions

- Anomaly detection systems that identify unusual patterns in agent behavior

This hybrid approach creates multiple layers of protection. Deterministic controls provide clear, predictable boundaries, while AI reasoning-based defenses add flexibility to handle novel situations and evolving threats. When visualizing these complex defense strategies, AI agent tool chains can help map the intricate workflows across multiple systems.

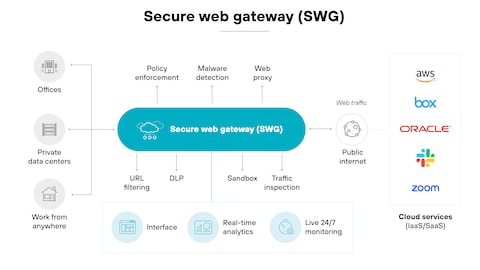

Securing the Agent Communication Ecosystem

As AI agents increasingly work together in collaborative environments, securing their communications becomes critical. I've found that implementing specialized protocols for agent-to-agent interaction helps maintain security across the entire ecosystem.

Agent-to-Agent (A2A) Security Protocols

A2A security protocols establish secure communication channels between autonomous systems:

- Mutual authentication requirements before communication begins

- Encrypted message channels with perfect forward secrecy

- Message integrity verification

- Rate limiting and anomaly detection

- Standardized communication formats with schema validation

sequenceDiagram

participant A as Agent A

participant Auth as Auth Service

participant B as Agent B

A->>Auth: Request communication token

Auth->>Auth: Verify Agent A identity & permissions

Auth->>A: Issue signed token

A->>B: Initiate communication with token

B->>Auth: Validate token

Auth->>B: Confirm token validity

B->>A: Establish encrypted channel

A->>B: Send encrypted message

B->>B: Validate message format

B->>A: Send encrypted response

Multi-party Computation Protocols (MCP)

When multiple agents need to collaborate on sensitive tasks, MCPs provide additional security guarantees:

- Secure computation across multiple agents without revealing individual inputs

- Distributed decision-making with consensus requirements

- Cryptographic guarantees for data privacy during collaboration

- Verifiable computation results

Visualizing Security Boundaries

Creating clear visual representations of agent interaction boundaries and permissions helps teams understand and maintain security constraints. These visualizations can show:

- Which agents can communicate with each other

- What types of data can be shared between agents

- Required authentication mechanisms for different interaction types

- Containment boundaries for agent groups

By mapping these relationships visually, teams can more easily identify potential security gaps or unintended communication paths. This is where visualization tools become invaluable for security planning and maintenance, especially when dealing with complex multi-agent systems where AI assistants interact with each other and with human users.

Threat Modeling for AI Agent Deployments

Effective security begins with comprehensive threat modeling. For AI agents, this process must account for their unique characteristics and potential vulnerabilities. I've found that systematic approaches yield the most thorough protection.

Systematic Vulnerability Identification

Threat modeling for AI agents should consider several key dimensions:

- Input vulnerabilities (prompt injection, data poisoning)

- Model vulnerabilities (extraction attacks, adversarial examples)

- Runtime vulnerabilities (resource exhaustion, side-channel attacks)

- Integration vulnerabilities (API abuse, privilege escalation)

- Ecosystem vulnerabilities (communication interception, trust exploitation)

flowchart TD

A[Threat Actor] --> B{Attack Vector}

B --> C[Input Manipulation]

B --> D[Model Exploitation]

B --> E[Runtime Abuse]

B --> F[Integration Attacks]

B --> G[Ecosystem Attacks]

C --> H[Prompt Injection]

C --> I[Data Poisoning]

D --> J[Model Extraction]

D --> K[Adversarial Examples]

E --> L[Resource Exhaustion]

E --> M[Side-Channel Attacks]

F --> N[API Abuse]

F --> O[Privilege Escalation]

G --> P[Communication Interception]

G --> Q[Trust Exploitation]

Creating Visual Attack Trees

Attack trees provide a structured way to visualize potential threat scenarios. For each critical asset or function, we can map out:

- Potential attack goals (what an attacker might want to achieve)

- Attack paths (sequences of actions that could lead to compromise)

- Required capabilities (what an attacker would need)

- Existing mitigations (security measures already in place)

- Residual risks (vulnerabilities that remain despite mitigations)

Mapping Threats Across MAESTRO Layers

By mapping potential threats across the seven layers of the MAESTRO framework, we can ensure comprehensive coverage:

| MAESTRO Layer | Example Threats | Mitigation Strategies |

|---|---|---|

| Foundation Models | Model poisoning, backdoor insertion, training data manipulation | Secure training pipelines, data validation, model verification |

| Agent Core | Prompt injection, goal manipulation, reasoning exploitation | Input sanitization, goal validation, reasoning guardrails |

| Tool Integration | Tool abuse, privilege escalation, command injection | Least privilege access, sandboxing, command validation |

| Operational Context | Context manipulation, environment poisoning | Context validation, environment monitoring, boundary enforcement |

| Multi-Agent Interaction | Communication interception, trust exploitation, collusion | Encrypted channels, trust verification, interaction monitoring |

| Deployment Environment | Infrastructure attacks, network vulnerabilities, resource exhaustion | Infrastructure hardening, network segmentation, resource limiting |

| Agent Ecosystem | Governance bypass, compliance violations, ecosystem manipulation | Strong governance, continuous monitoring, ecosystem verification |

Visual threat models transform abstract security concepts into actionable guidance. By creating clear representations of potential vulnerabilities and attack paths, teams can prioritize security efforts and ensure comprehensive coverage across all aspects of AI agent systems.

AI Agent Attack Surface Distribution

Enterprise Integration and Governance

Successfully integrating secure AI agents into enterprise environments requires clear governance frameworks and organizational responsibility models. I've found that establishing these structures early helps prevent security gaps during deployment.

Organizational Responsibility Models

Effective AI agent security requires clear roles and responsibilities:

- Executive sponsorship and oversight

- Security architecture and design teams

- Development teams with security training

- Dedicated AI safety specialists

- Operations and monitoring personnel

- Compliance and audit functions

flowchart TD

A[Executive Leadership] --> B[AI Governance Committee]

B --> C[Security Architecture]

B --> D[Development Teams]

B --> E[AI Safety Team]

B --> F[Operations]

B --> G[Compliance]

C --> H[Security Requirements]

C --> I[Threat Modeling]

C --> J[Security Design]

D --> K[Secure Development]

D --> L[Testing]

D --> M[Implementation]

E --> N[Safety Monitoring]

E --> O[Incident Response]

E --> P[Continuous Assessment]

F --> Q[Deployment]

F --> R[Operational Monitoring]

F --> S[Maintenance]

G --> T[Policy Compliance]

G --> U[Regulatory Alignment]

G --> V[Auditing]

Compliance Frameworks and Regulatory Requirements

AI agent deployments must align with various compliance requirements:

- Industry-specific regulations (finance, healthcare, etc.)

- Data protection laws (GDPR, CCPA, etc.)

- Emerging AI-specific regulations

- Internal governance policies

- Ethical AI frameworks and standards

Security Documentation and Communication

Clear documentation helps stakeholders understand security measures:

- Security architecture diagrams

- Threat models and risk assessments

- Compliance mapping documents

- Incident response playbooks

- Security policies and procedures

Monitoring and Governance Dashboards

Visual dashboards provide real-time insights into security status:

- Agent activity monitoring

- Security incident tracking

- Compliance status indicators

- Risk level visualizations

- Performance against security KPIs

By transforming complex governance requirements into accessible visual guides, teams can more easily understand and implement appropriate security measures. This visual approach is particularly valuable when communicating with stakeholders who may not have deep technical expertise in AI security but need to understand the overall security posture.

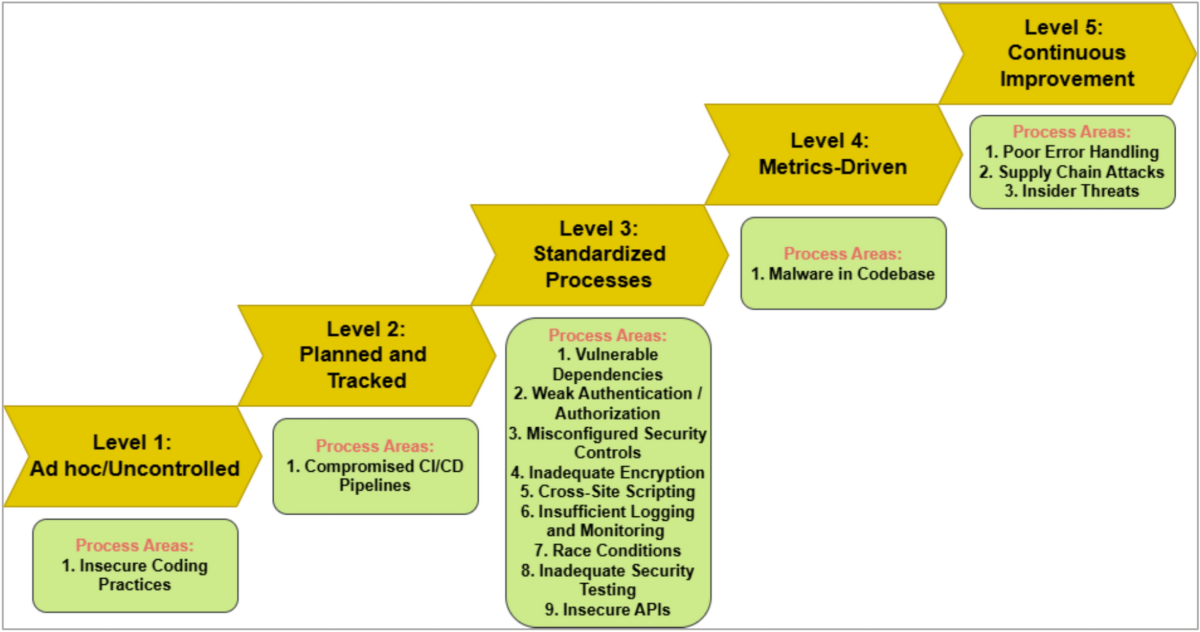

Future-Proofing Agent Security Architecture

As AI capabilities continue to advance rapidly, security architectures must be designed to evolve alongside them. I've found that forward-thinking approaches help maintain protection even as new challenges emerge.

Anticipating Emerging Threats

Future-oriented security planning should consider:

- Increasing agent autonomy and its security implications

- More sophisticated attack techniques targeting AI systems

- Emergent behaviors in complex multi-agent systems

- New capabilities that may introduce unforeseen vulnerabilities

- Evolving regulatory landscapes and compliance requirements

Emerging AI Security Challenges

Designing Adaptive Security Protocols

Security architectures should incorporate flexibility to adapt to changing conditions:

- Modular security components that can be updated independently

- Continuous learning systems that adapt to new threat patterns

- Configurable policy engines that can implement new rules without code changes

- Regular security architecture reviews and updates

- Scenario planning for potential future threats

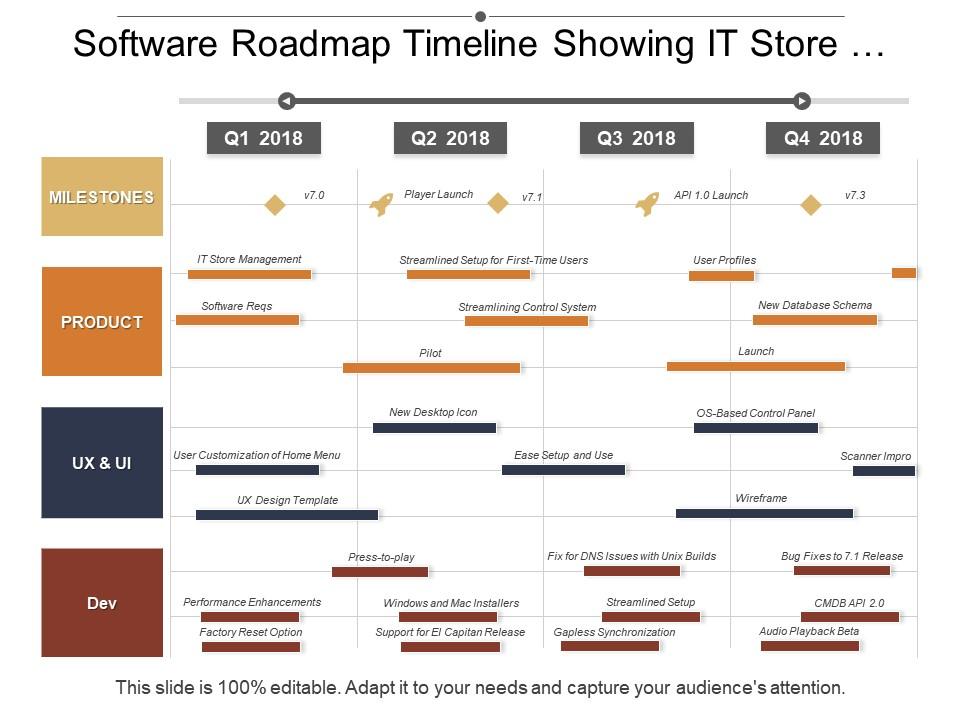

Creating Visual Security Roadmaps

Visual roadmaps help organizations plan security evolution over time:

- Current security capabilities and maturity levels

- Planned enhancements and their implementation timelines

- Dependencies between different security components

- Alignment with broader technology roadmaps

- Key milestones and decision points

By maintaining living documentation of security architecture as standards evolve, organizations can ensure their protection strategies remain effective. This approach requires regular reassessment of threats, capabilities, and security measures to identify gaps and opportunities for improvement.

Practical Implementation Guide

Implementing comprehensive security for AI agents requires a structured approach across all development stages. I've found that following a clear implementation roadmap helps teams maintain security focus throughout the process.

Step-by-Step Security Implementation

flowchart TD

A[1. Security Requirements] --> B[2. Threat Modeling]

B --> C[3. Security Architecture Design]

C --> D[4. Foundation Model Security]

D --> E[5. Agent Core Security]

E --> F[6. Tool Integration Security]

F --> G[7. Operational Security]

G --> H[8. Multi-Agent Security]

H --> I[9. Deployment Security]

I --> J[10. Ecosystem Governance]

J --> K[11. Testing & Validation]

K --> L[12. Monitoring & Maintenance]

L --> M[13. Continuous Improvement]

Let's break down each implementation phase:

- Security Requirements: Define security goals, constraints, and compliance needs

- Threat Modeling: Identify potential vulnerabilities and attack vectors

- Security Architecture Design: Create the overall security framework

- Foundation Model Security: Implement base model protections

- Agent Core Security: Secure decision-making components

- Tool Integration Security: Protect external system access

- Operational Security: Secure the runtime environment

- Multi-Agent Security: Implement communication safeguards

- Deployment Security: Secure infrastructure and deployment pipelines

- Ecosystem Governance: Establish policies and oversight

- Testing & Validation: Verify security measures work as intended

- Monitoring & Maintenance: Implement ongoing security operations

- Continuous Improvement: Regularly update security measures

Common Pitfalls and How to Avoid Them

| Common Pitfall | Prevention Strategy |

|---|---|

| Security as an afterthought | Integrate security from the initial design phase |

| Insufficient threat modeling | Conduct comprehensive threat assessments for all components |

| Overreliance on foundation model safety | Implement multiple security layers beyond model safety |

| Neglecting agent-to-agent security | Design explicit communication security protocols |

| Inadequate monitoring | Implement comprehensive logging and real-time monitoring |

| Unclear security responsibilities | Establish explicit ownership for each security component |

| Static security approaches | Design adaptive security systems that evolve with threats |

Tools and Resources

Several tools and frameworks can support AI agent security implementation:

- Security assessment frameworks specific to AI systems

- Runtime policy enforcement engines

- AI safety testing tools

- Visualization platforms for security architecture

- Monitoring and logging solutions for AI systems

- Governance and compliance management tools

By transforming complex security documentation into clear visual guidance, teams can better understand implementation requirements and maintain security focus throughout development. Visual representations help bridge communication gaps between technical and non-technical stakeholders, ensuring everyone understands the security architecture and their role in maintaining it.

Transform Your AI Security Visualization

Creating clear, comprehensive visualizations of complex AI security architectures is essential for effective implementation. PageOn.ai provides the tools you need to transform abstract security concepts into actionable visual frameworks that all stakeholders can understand.

Start Creating Secure AI Visualizations TodayBuilding truly secure AI agent systems requires a comprehensive approach that addresses the unique challenges of autonomous systems. By implementing multi-layered security architectures, leveraging visual tools for better understanding, and maintaining adaptive security practices, organizations can unlock the tremendous potential of AI agents while ensuring they remain trustworthy and safe.

The future of AI agent security will continue to evolve, but the fundamental principles of layered protection, comprehensive threat modeling, and clear visual communication will remain essential components of effective security strategies.

You Might Also Like

Beyond "Today I'm Going to Talk About": Creating Memorable Presentation Openings

Transform your presentation openings from forgettable to captivating. Learn psychological techniques, avoid common pitfalls, and discover high-impact alternatives to the 'Today I'm going to talk about' trap.

Mastering Visual Harmony: Typography and Color Selection for Impactful Presentations

Learn how to create professional presentations through strategic typography and color harmony. Discover font pairing, color theory, and design principles for slides that captivate audiences.

The Art of Instant Connection: Crafting Opening Strategies That Captivate Any Audience

Discover powerful opening strategies that create instant audience connection. Learn visual storytelling, interactive techniques, and data visualization methods to captivate any audience from the start.

Transform Presentation Anxiety into Pitch Mastery - The Confidence Revolution

Discover how to turn your biggest presentation weakness into pitch confidence with visual storytelling techniques, AI-powered tools, and proven frameworks for pitch mastery.