Revolutionizing Data Analysis: How AI Agents Transform Decade-Long Datasets into Instant Insights

The Evolution of Real-Time Data Analysis

I've witnessed a remarkable transformation in how we process and analyze large datasets. What once took weeks or months can now be accomplished in seconds, thanks to the revolutionary capabilities of AI agents. In this guide, I'll explore how these intelligent systems are changing the game for organizations dealing with decade-spanning datasets.

The Evolution of Real-Time Data Analysis

I've been in the data analysis field for years, and I've seen firsthand how the landscape has transformed. Historically, processing large datasets spanning multiple years was an exercise in patience and resource management. Organizations would dedicate substantial computing power to batch processing tasks that could take days or even weeks to complete.

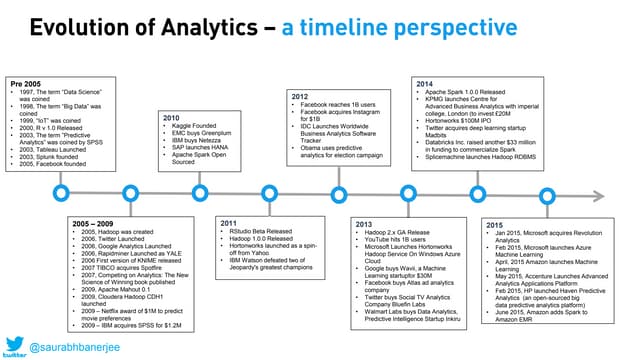

The evolution of data processing capabilities over the past three decades

The computational limitations were significant. Traditional systems simply weren't designed to handle the volume, velocity, and variety of data that modern businesses generate. When dealing with decade-long datasets, analysts often had to make compromises—either analyze a sample of the data or accept that comprehensive analysis would take considerable time.

Today, AI agents are fundamentally changing our expectations of data processing timelines. These intelligent systems can distribute workloads across massive parallel computing resources, identify patterns that would take humans years to discover, and deliver insights in near real-time—even when processing 10 years of complex data.

The Data Processing Evolution

flowchart TD

A[Historical Data] --> B[Traditional Batch Processing]

B -->|Days/Weeks| C[Analysis Results]

A --> D[Modern AI Agents]

D -->|Seconds/Minutes| E[Real-Time Insights]

style D fill:#FF8000,stroke:#333,stroke-width:1px

style E fill:#FF8000,stroke:#333,stroke-width:1px

We're witnessing a paradigm shift from batch processing to continuous, instantaneous analysis of decade-spanning information. This transformation isn't just about speed—it's about fundamentally changing how organizations can leverage their historical data for competitive advantage and strategic decision-making.

Core Technologies Enabling 10-Year Data Processing

The ability to process decade-long datasets in real-time isn't magic—it's the result of several breakthrough technologies working in concert. I've had the opportunity to work with many of these systems, and their capabilities continue to amaze me.

Advanced Neural Network Architectures

Modern AI systems employ specialized neural networks designed specifically for temporal data patterns. These architectures—including Transformer models, Long Short-Term Memory (LSTM) networks, and Temporal Convolutional Networks (TCNs)—excel at identifying patterns across extended time periods.

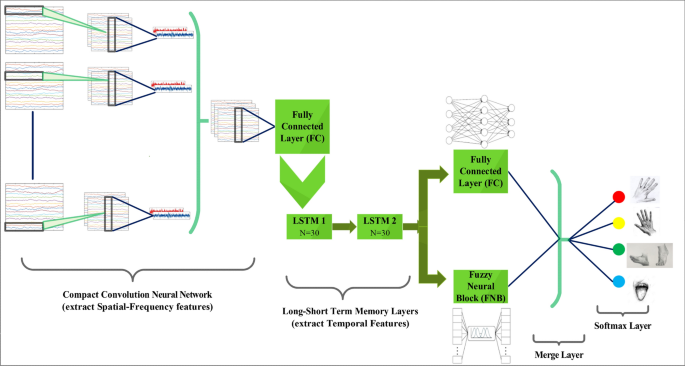

Neural network architecture specialized for processing temporal data patterns

Distributed Computing Frameworks

When dealing with massive datasets spanning a decade, no single computer can handle the load efficiently. Distributed computing frameworks partition these enormous datasets across hundreds or thousands of machines, processing them in parallel and then aggregating the results.

Memory-Efficient Algorithms

One of the biggest challenges with decade-long datasets is memory management. Traditional approaches would require loading enormous amounts of data into memory, creating computational bottlenecks. Modern AI agents use sophisticated streaming algorithms, incremental learning techniques, and feature hashing to process data efficiently without excessive memory requirements.

The integration of business intelligence AI creates meaningful connections across disparate long-term datasets. These systems can identify relationships between seemingly unrelated data points collected years apart, providing context that would be virtually impossible for human analysts to discover manually.

flowchart TD

subgraph "Core Technologies"

A[Advanced Neural Networks]

B[Distributed Computing]

C[Memory-Efficient Algorithms]

D[Business Intelligence AI]

end

A --> E[Pattern Recognition]

B --> F[Parallel Processing]

C --> G[Streaming Analysis]

D --> H[Cross-Dataset Connections]

E & F & G & H --> I[Real-Time Insights]

style A fill:#f9f9f9,stroke:#FF8000,stroke-width:2px

style B fill:#f9f9f9,stroke:#FF8000,stroke-width:2px

style C fill:#f9f9f9,stroke:#FF8000,stroke-width:2px

style D fill:#f9f9f9,stroke:#FF8000,stroke-width:2px

style I fill:#FF8000,stroke:#333,stroke-width:1px

Practical Applications Across Industries

The ability to analyze decade-long datasets in real-time is transforming industries across the board. I've seen firsthand how these capabilities are creating breakthrough insights in several key sectors:

Healthcare

Analyzing patient outcomes and treatment efficacy across 10-year longitudinal studies in seconds instead of months. This enables medical researchers to identify subtle correlations between treatments and long-term outcomes that might otherwise remain hidden.

Finance

Identifying subtle market patterns and cyclical trends that only emerge when examining decade-long data. Financial institutions can now detect complex market cycles and correlations that operate on 7-10 year timeframes, providing unprecedented strategic advantages.

Manufacturing

Predicting equipment failures by correlating maintenance data across 10+ years of operational history. This enables predictive maintenance systems that can identify failure patterns that only become apparent when analyzing extended operational histories.

Climate Science

Processing environmental sensor data to model complex ecological changes with unprecedented speed. Climate scientists can now run complex simulations using decade-spanning datasets in minutes rather than weeks.

In each of these cases, the ability to process and analyze decade-long datasets in real-time isn't just an incremental improvement—it's enabling entirely new approaches and insights that were previously impossible. I'm particularly impressed by how these capabilities are transforming fields like healthcare, where the ability to rapidly analyze longitudinal studies could directly impact patient outcomes and treatment protocols.

From Data Overload to Actionable Intelligence

Having massive processing power is only valuable if it leads to actionable insights. In my experience, transforming complex temporal data into clear, decision-ready intelligence requires both technical prowess and thoughtful presentation.

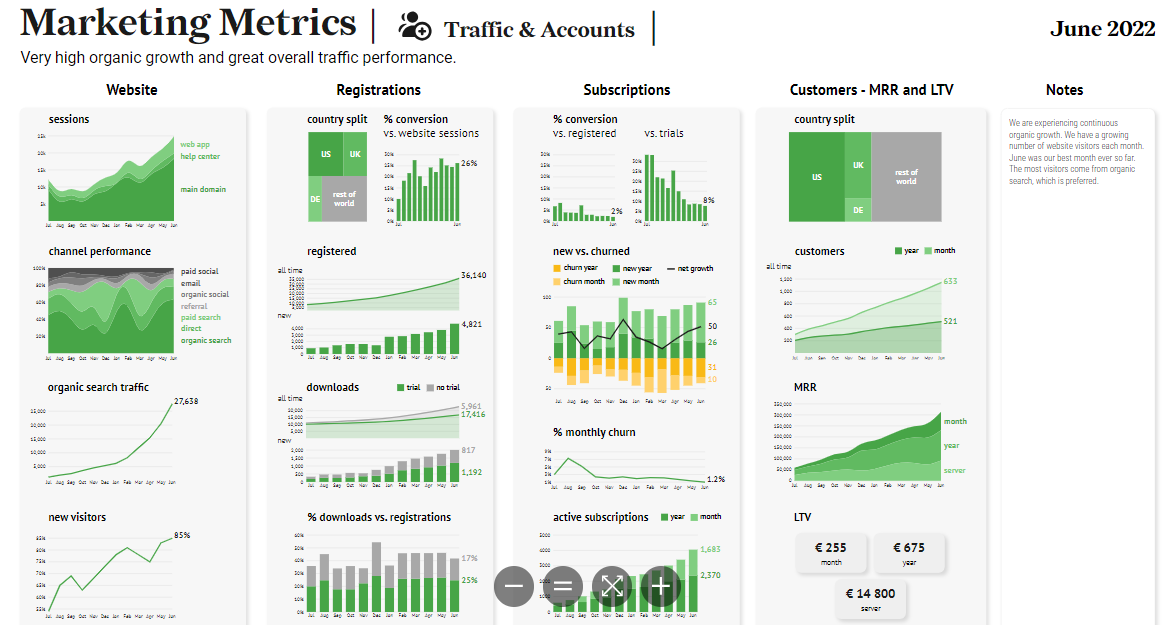

Example dashboard visualizing complex temporal relationships in financial market data

Visualizing Complex Temporal Relationships

When dealing with decade-spanning datasets, traditional visualization approaches often fall short. Modern techniques leverage interactive elements, dimensionality reduction, and hierarchical visualization to make complex time-series patterns comprehensible.

PageOn.ai's AI Blocks feature is particularly effective for transforming abstract time-series patterns into intuitive visual narratives. I've used this tool to create modular, interconnected visualizations that allow stakeholders to explore complex temporal relationships without getting overwhelmed by the underlying data complexity.

flowchart TD

A[Raw 10-Year Dataset] --> B[AI Processing Layer]

B --> C{Pattern Recognition}

C --> D[Time-Series Patterns]

C --> E[Anomaly Detection]

C --> F[Trend Identification]

C --> G[Cyclical Behaviors]

D & E & F & G --> H[PageOn.ai Visualization Layer]

H --> I[Interactive Dashboards]

H --> J[Visual Narratives]

H --> K[Decision Support Tools]

style B fill:#FF8000,stroke:#333,stroke-width:1px

style H fill:#FF8000,stroke:#333,stroke-width:1px

Strategies for Presenting Real-Time Insights

The key challenge when presenting insights from decade-long datasets is maintaining context while highlighting actionable information. I've found several approaches particularly effective:

- Progressive disclosure: Allowing users to start with high-level insights and drill down into specific time periods or data segments

- Comparative visualization: Placing current patterns alongside historical trends to provide immediate context

- Anomaly highlighting: Automatically drawing attention to deviations from long-term patterns

- Predictive overlays: Showing AI-generated forecasts alongside historical data

PageOn.ai's Deep Search functionality is particularly valuable when working with extensive historical datasets. This feature automatically integrates relevant historical context into current analysis, ensuring that insights are presented with appropriate background information and precedents from the full 10-year dataset.

Implementation Challenges and Solutions

While the benefits of real-time analysis of decade-long datasets are compelling, implementation isn't without challenges. In my work helping organizations deploy these systems, I've encountered several common obstacles—and developed strategies to overcome them.

Data Quality Issues in Long-Term Datasets

Decade-spanning datasets invariably contain inconsistencies, missing values, and changing data formats. These issues can significantly impact analysis quality if not properly addressed.

AI-Driven Solutions for Data Quality

- Automated anomaly detection to identify and flag suspicious data points

- Intelligent imputation methods that consider temporal context when filling missing values

- Format normalization tools that can reconcile changing data structures over time

- Confidence scoring that indicates the reliability of insights based on underlying data quality

Computing Infrastructure Requirements

Real-time analysis of massive historical datasets demands substantial computing resources. Organizations must carefully plan their infrastructure to support these capabilities without excessive costs.

Successful AI implementation requires balancing technical capabilities with organizational needs. I've found that a phased approach often works best, starting with high-value use cases and expanding as the organization builds familiarity and confidence with the technology.

Training Considerations for Decade-Spanning Models

AI models working with extensive historical datasets require specialized training approaches to capture long-term dependencies without overfitting to specific time periods.

Effective Training Strategies

- Time-based cross-validation to ensure models perform consistently across different eras

- Hierarchical modeling that captures patterns at different time scales (days, months, years)

- Transfer learning to leverage pre-trained models for specific domains

- Continuous retraining pipelines that incorporate new data as it becomes available

The Human-AI Partnership in Long-Term Data Analysis

As AI agents become increasingly capable of processing decade-long datasets in real-time, the role of human analysts is evolving rather than disappearing. In my experience, the most successful implementations create effective partnerships between human expertise and AI capabilities.

Visualization of the complementary roles of humans and AI in long-term data analysis

Evolving Role of Data Scientists

When AI assistants can process 10 years of data instantly, data scientists shift from spending most of their time on data preparation and basic analysis to focusing on higher-value activities:

- Designing novel analytical approaches tailored to specific business questions

- Interpreting complex patterns identified by AI systems

- Developing hypotheses based on AI-identified correlations

- Creating custom visualizations for unique data relationships

- Building domain-specific AI models that incorporate specialized knowledge

Democratizing Access to Historical Insights

One of the most powerful aspects of modern AI systems is their ability to make complex historical data accessible to non-technical stakeholders. PageOn.ai's Vibe Creation feature exemplifies this approach, enabling users without data science backgrounds to interact with and derive insights from decade-spanning datasets.

This democratizing access to decade-long data insights across organizational hierarchies transforms how decisions are made. When everyone from frontline employees to senior executives can easily explore historical patterns relevant to their work, organizations become more responsive and data-driven.

flowchart TD

A[10 Years of Raw Data] --> B[AI Processing Layer]

B --> C[Data Scientists]

B --> D[Business Analysts]

B --> E[Executive Decision Makers]

B --> F[Operational Teams]

C --> G[Advanced Model Development]

D --> H[Business Process Optimization]

E --> I[Strategic Decision Making]

F --> J[Day-to-Day Operations]

G & H & I & J --> K[Organizational Value]

style B fill:#FF8000,stroke:#333,stroke-width:1px

style K fill:#FF8000,stroke:#333,stroke-width:1px

Balancing Algorithmic Insights with Human Expertise

When interpreting long-term trends, I've found that the most valuable insights come from combining algorithmic pattern detection with human domain expertise. AI excels at identifying correlations and patterns in massive datasets, but humans provide crucial context about:

- External events that might explain data anomalies

- Industry-specific knowledge that affects interpretation

- Organizational history that provides context for trends

- Ethical and social considerations that pure data analysis might miss

Future Horizons: Beyond 10-Year Analysis

As impressive as today's capabilities are, I'm even more excited about what's on the horizon. The field is advancing rapidly, with several emerging trends that will further transform how we analyze long-term historical data.

Processing Even Longer Time Horizons

Current technologies are pushing beyond the 10-year mark, with emerging capabilities for processing 20+ years of data in real-time. These extended time horizons will enable organizations to identify ultra-long-term patterns and cycles that remain invisible even in decade-long analyses.

Integration of Multimodal Data Sources

Future systems will seamlessly integrate diverse data types spanning decades—text, images, video, sensor data, and more. This multimodal analysis will provide unprecedented context and insight, allowing organizations to correlate patterns across different types of information collected over extended periods.

Emerging Multimodal Applications

- Healthcare: Correlating patient imaging data with clinical notes and sensor readings across decades

- Urban planning: Analyzing satellite imagery, traffic patterns, and demographic data over 20+ years

- Customer behavior: Integrating purchase history, support interactions, and social media engagement across generations

- Scientific research: Combining experimental results, published literature, and raw data across research institutions

PageOn.ai's Agentic Capabilities

PageOn.ai's emerging Agentic capabilities represent the next frontier in historical data analysis. These autonomous AI systems will proactively explore massive historical datasets, identify potentially valuable patterns without explicit direction, and generate insights that humans might never think to look for.

I'm particularly excited about how these capabilities will transform the way organizations derive insights from historical data. Rather than requiring analysts to formulate specific questions, agentic systems will continuously monitor and analyze decades of information, surfacing insights as they emerge and evolve.

Ethical Considerations

As we develop increasingly powerful tools for analyzing decades of data, ethical considerations become even more important. Organizations must carefully consider:

- Privacy implications of retaining and analyzing personal data over extended periods

- Potential for historical biases to be amplified in long-term analyses

- Transparency requirements when making decisions based on patterns spanning decades

- Data governance policies that account for changing regulations and standards over time

The future of long-term data analysis will require thoughtful balancing of technological capabilities with ethical responsibilities. Organizations that navigate this balance successfully will gain tremendous value while maintaining trust and compliance.

Transform Your Data Visualization with PageOn.ai

Ready to unlock the insights hidden in your long-term data? PageOn.ai's powerful visualization tools can help you transform complex temporal patterns into clear, actionable intelligence—no coding or data science expertise required.

Start Creating with PageOn.ai TodayEmbracing the Future of Real-Time Historical Analysis

As we've explored throughout this guide, AI agents have fundamentally transformed our ability to analyze decade-long datasets in real-time. This capability isn't just an incremental improvement—it's enabling entirely new approaches to decision-making across industries.

I've seen firsthand how organizations that embrace these technologies gain significant competitive advantages. They can identify patterns that competitors miss, respond more quickly to emerging trends, and make decisions with the context that only long-term historical analysis can provide.

The future will bring even more powerful capabilities, with longer time horizons, multimodal data integration, and increasingly autonomous AI systems. Organizations that prepare now by building the right infrastructure, partnerships, and expertise will be positioned to capitalize on these advances.

Whether you're just beginning to explore AI-powered data analysis or looking to enhance your existing capabilities, tools like PageOn.ai provide accessible entry points for transforming how your organization leverages its historical data. The ability to instantly analyze a decade of information isn't just a technical achievement—it's a strategic advantage that can transform decision-making at every level of your organization.

You Might Also Like

Navigating the Digital Labyrinth: Maze and Labyrinth Design Patterns for Digital Products

Discover how maze and labyrinth design patterns can transform your digital products into engaging user experiences. Learn strategic applications, implementation techniques, and ethical considerations.

Tracking Presentation Engagement: Transform Your Impact With Built-in Analytics

Discover how built-in analytics transforms presentation engagement. Learn to track audience behavior, implement data-driven strategies, and maximize your presentation impact.

Print vs Digital Design: Navigating Today's Hybrid Market Landscape

Explore the evolving relationship between print and digital design disciplines, with insights on market trends, strategic applications, and essential skills for cross-disciplinary designers.

Mastering MCP Architecture: The Ultimate Blueprint for Seamless AI-Data Integration

Explore the Model Context Protocol architecture that solves the N×M integration problem. Learn how MCP creates standardized connections between AI systems and data sources.