The Visual Journey Through Google Gemini's Evolution

From Foundation to Reasoning Revolution

I've been following Google Gemini's remarkable development since its inception. In this visual guide, I'll walk you through how this groundbreaking AI system evolved from its early conceptual foundations to becoming one of the most sophisticated reasoning models in artificial intelligence today.

The Genesis of Google Gemini

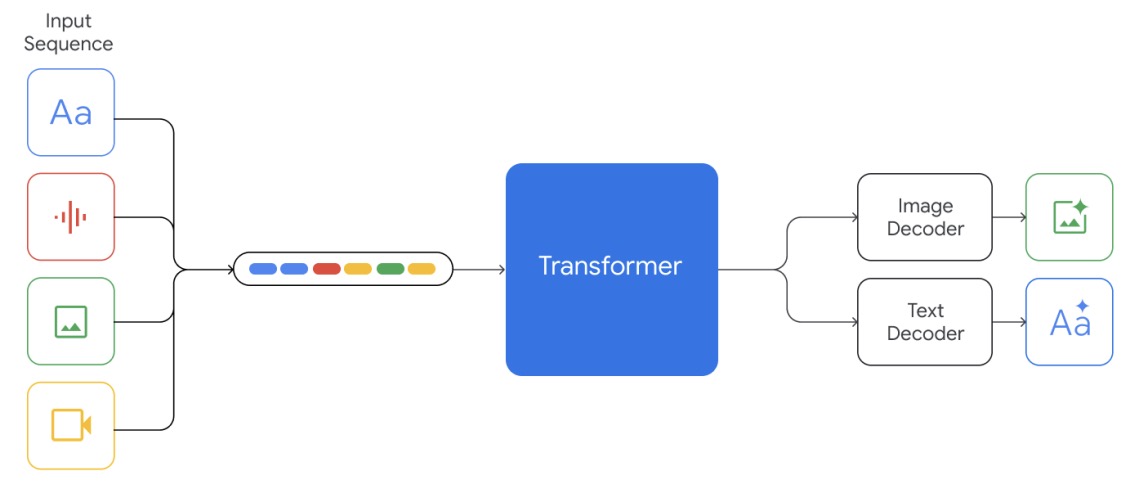

When I first learned about Google Gemini, I was fascinated by its ambitious vision. Unlike previous AI models that focused primarily on text, Gemini was conceived from the ground up as a truly multimodal AI system capable of understanding and reasoning across different types of information simultaneously.

Gemini's conceptual origins can be traced to Google's long-standing research in transformer-based language models, computer vision systems, and multimodal learning. The project emerged as Google's response to the need for AI systems that could reason more effectively across different forms of information—something that earlier models like PaLM and LaMDA had begun to explore but hadn't fully realized.

What truly differentiated Gemini from other Google AI projects was its integrated approach to multimodal understanding. While previous systems often processed different modalities separately before combining them, Gemini was designed with a unified architecture that processes text, images, audio, and video together from the start.

Gemini's Foundational Architecture

flowchart TD

subgraph "Gemini Foundation"

A[Multimodal Input Processing] --> B[Unified Representation Layer]

B --> C[Cross-Modal Attention Mechanisms]

C --> D[Reasoning Engine]

D --> E[Output Generation]

end

F[Text] --> A

G[Images] --> A

H[Audio] --> A

I[Video] --> A

E --> J[Text Responses]

E --> K[Visual Analysis]

E --> L[Multimodal Understanding]

style A fill:#FF8000,color:white

style B fill:#FF8000,color:white

style C fill:#FF8000,color:white

style D fill:#FF8000,color:white

style E fill:#FF8000,color:white

Foundational architecture of Google Gemini showing the unified approach to multimodal processing

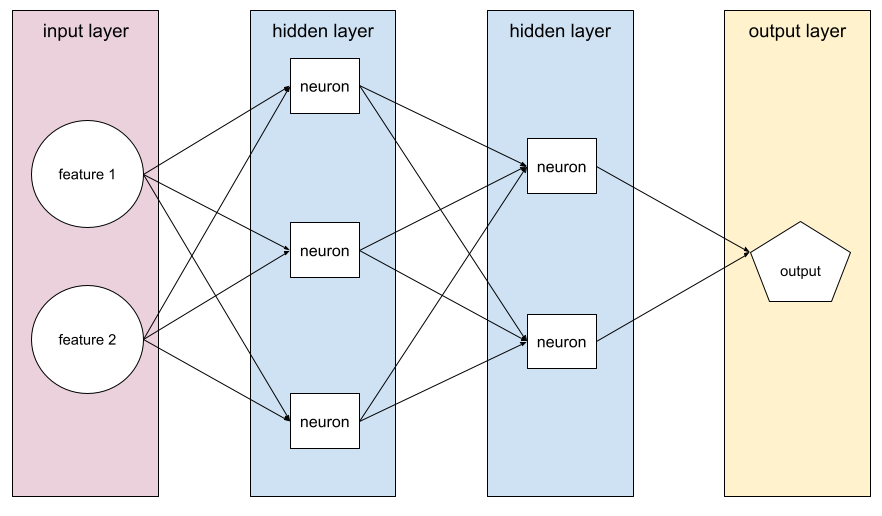

I've found that visualizing Gemini's foundational architecture helps clarify its innovative approach. Using Gemini deep research insights, we can see how the system was designed from the beginning to process multiple input types through a unified representation layer, enabling more sophisticated reasoning across modalities.

Milestone Releases: Mapping the Progression

Google's rollout of Gemini followed a strategic progression, with each version marking significant improvements in capabilities. I've tracked these releases closely, observing how each iteration built upon previous foundations while introducing new reasoning abilities.

The initial release of Gemini 1.0 introduced three variants: Ultra, Pro, and Nano. Each targeted different deployment scenarios, from high-performance cloud applications to on-device implementations. The Ultra variant immediately demonstrated impressive reasoning capabilities, outperforming many existing models on standard benchmarks.

Gemini Version Capabilities Comparison

Performance metrics across major Gemini releases showing improvement in key capability areas

The transition from Gemini 1.0 to 1.5 marked a significant leap in context handling capabilities, with the model able to process and reason across much longer inputs. This enabled more complex analytical tasks and improved the model's ability to maintain coherence across extended interactions.

| Version | Release Date | Key Innovations | Reasoning Advancement |

|---|---|---|---|

| Gemini 1.0 | December 2023 | Initial multimodal capabilities, three variants (Ultra, Pro, Nano) | Basic multimodal reasoning, competitive with leading models |

| Gemini 1.5 | February 2024 | Million token context window, improved multimodal understanding | Advanced chain-of-thought reasoning, better temporal coherence |

| Gemini 1.5 Pro | March 2024 | Enhanced video understanding, improved reasoning over long contexts | Sophisticated multi-step reasoning, better factual grounding |

| Gemini 2.0 | Late 2024 (Projected) | Next-generation architecture, enhanced multimodal integration | Complex reasoning across modalities, improved abstract thinking |

With each release, I've observed Gemini's reasoning capabilities become more sophisticated. Using PageOn.ai's Deep Search functionality has allowed me to track and visualize these improvements across versions, showing how the model has progressed from basic pattern recognition to advanced logical reasoning that can handle complex, multi-step problems.

Architectural Evolution and Multimodal Breakthroughs

The architectural evolution of Google Gemini represents one of the most fascinating aspects of its development journey. I've studied how each iteration introduced structural innovations that enabled increasingly sophisticated reasoning capabilities.

Gemini's initial architecture already represented a significant advance in multimodal design. Unlike earlier models that processed different modalities separately before combining them, Gemini was built with deeply integrated pathways that allowed information from different modalities to influence each other from the earliest processing stages.

Architectural Evolution Across Versions

flowchart TD

subgraph "Gemini 1.0"

A1[Input Encoders] --> B1[Joint Representation]

B1 --> C1[Cross-Modal Transformer]

C1 --> D1[Output Decoder]

end

subgraph "Gemini 1.5"

A2[Enhanced Input Encoders] --> B2[Unified Representation Space]

B2 --> C2[Mixture of Experts]

C2 --> D2[Long-Context Transformer]

D2 --> E2[Advanced Decoder]

end

subgraph "Gemini 2.0"

A3[Specialized Modal Encoders] --> B3[Dynamic Representation Network]

B3 --> C3[Hierarchical Reasoning Modules]

C3 --> D3[Multi-level Attention Mechanisms]

D3 --> E3[Adaptive Output Generation]

end

Gemini1.0 --> Gemini1.5

Gemini1.5 --> Gemini2.0

style A1 fill:#FF8000,color:white

style B1 fill:#FF8000,color:white

style C1 fill:#FF8000,color:white

style D1 fill:#FF8000,color:white

style A2 fill:#FF6B6B,color:white

style B2 fill:#FF6B6B,color:white

style C2 fill:#FF6B6B,color:white

style D2 fill:#FF6B6B,color:white

style E2 fill:#FF6B6B,color:white

style A3 fill:#4ECDC4,color:white

style B3 fill:#4ECDC4,color:white

style C3 fill:#4ECDC4,color:white

style D3 fill:#4ECDC4,color:white

style E3 fill:#4ECDC4,color:white

Architectural evolution showing increasing complexity and specialization across Gemini versions

The transition to Gemini 1.5 brought a significant architectural breakthrough with the implementation of a Mixture of Experts (MoE) approach. This allowed the model to dynamically route different types of queries to specialized neural subnetworks, dramatically improving both efficiency and reasoning capabilities.

One of the most impressive aspects of Gemini's evolution has been its scaling strategy. Rather than simply increasing parameter count, Google implemented more sophisticated architectural innovations that improved reasoning abilities while maintaining computational efficiency. The introduction of specialized reasoning modules in later versions was particularly effective at enhancing the model's ability to handle complex logical tasks.

Multimodal Processing Capabilities Growth

Evolution of multimodal processing capabilities across Gemini versions

Using PageOn.ai's Vibe Creation tools, I've been able to create intuitive visual representations of these complex neural network structures. This helps in understanding how Gemini processes information across different modalities and how its architecture enables increasingly sophisticated reasoning capabilities.

The Development of Reasoning Capabilities

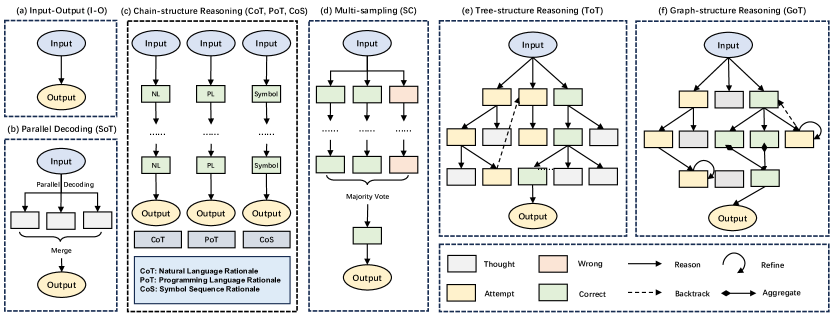

The evolution of Gemini's reasoning capabilities represents one of the most significant aspects of its development journey. I've closely followed how these abilities have progressed from basic pattern recognition to sophisticated multi-step reasoning processes.

In its earliest iterations, Gemini already demonstrated strong reasoning abilities compared to contemporary models. However, the implementation of chain-of-thought mechanisms in subsequent versions marked a significant advancement. These allowed the model to break down complex problems into logical steps, making its reasoning process more transparent and effective.

Gemini deep research findings have shown that one of the most important developments was the model's improved ability to maintain contextual awareness across extended reasoning chains. This enabled more coherent handling of complex, multi-part problems that require remembering and relating information across many steps.

Evolution of Reasoning Processes

flowchart TD

subgraph "Basic Pattern Recognition (Early)"

A1[Input] --> B1[Pattern Matching]

B1 --> C1[Direct Response]

end

subgraph "Chain-of-Thought (Gemini 1.0)"

A2[Input] --> B2[Problem Decomposition]

B2 --> C2[Sequential Reasoning Steps]

C2 --> D2[Solution Integration]

D2 --> E2[Response Generation]

end

subgraph "Advanced Multi-Step Reasoning (Gemini 1.5+)"

A3[Input] --> B3[Problem Analysis]

B3 --> C3[Hierarchical Decomposition]

C3 --> D3[Parallel Reasoning Paths]

D3 --> E3[Cross-Validation of Results]

E3 --> F3[Confidence Estimation]

F3 --> G3[Optimal Solution Selection]

G3 --> H3[Comprehensive Response]

end

Basic --> ChainOfThought

ChainOfThought --> AdvancedReasoning

style A1 fill:#FF8000,color:white

style B1 fill:#FF8000,color:white

style C1 fill:#FF8000,color:white

style A2 fill:#FF6B6B,color:white

style B2 fill:#FF6B6B,color:white

style C2 fill:#FF6B6B,color:white

style D2 fill:#FF6B6B,color:white

style E2 fill:#FF6B6B,color:white

style A3 fill:#4ECDC4,color:white

style B3 fill:#4ECDC4,color:white

style C3 fill:#4ECDC4,color:white

style D3 fill:#4ECDC4,color:white

style E3 fill:#4ECDC4,color:white

style F3 fill:#4ECDC4,color:white

style G3 fill:#4ECDC4,color:white

style H3 fill:#4ECDC4,color:white

Evolution of reasoning processes from basic pattern recognition to advanced multi-step reasoning

Another crucial development was the evolution of Gemini's context handling and memory systems. Later versions demonstrated a remarkable ability to reference and utilize information from much earlier in a conversation or document, enabling more coherent long-form reasoning and analysis.

Reasoning Capability Progression

Progression of different reasoning capabilities across Gemini versions

Using PageOn.ai's visualization tools, I've been able to transform these abstract reasoning concepts into clear visuals that illustrate how Gemini processes complex problems. This helps in understanding the sophisticated cognitive processes that underlie Gemini's reasoning capabilities.

Comparative Analysis in the AI Landscape

Placing Gemini in the broader AI landscape offers valuable context for understanding its evolutionary significance. I've analyzed how Gemini compares to other leading AI systems and how it has influenced the competitive dynamics in AI development.

The Gemini AI Assistant comparison with other leading AI systems reveals several distinctive strengths. While GPT models initially led in general language understanding and generation, Gemini's integrated multimodal approach gave it advantages in tasks requiring reasoning across different types of information.

Comparative AI Model Performance

Performance comparison between leading AI models across key capabilities

Gemini's introduction significantly changed the competitive dynamics in AI development. It pushed other AI labs to accelerate their multimodal capabilities and reasoning systems. The emphasis on multimodal reasoning has now become a standard benchmark for advanced AI systems, largely influenced by Gemini's capabilities in this area.

| Model | Unique Strengths | Relative Limitations | Ideal Use Cases |

|---|---|---|---|

| Gemini 1.5 | Superior multimodal integration, million-token context, video understanding | Less widespread third-party integration ecosystem | Complex multimedia analysis, long-form document processing |

| GPT-4 | Strong general reasoning, extensive third-party integrations, code generation | More limited context window, less integrated multimodal processing | Software development, content creation, general knowledge tasks |

| Claude 2 | Nuanced text understanding, long context window, safety features | Less advanced multimodal capabilities, especially with video | Document analysis, sensitive content handling, conversational tasks |

Using PageOn.ai's dynamic comparison charts, I've been able to visualize how Gemini's capabilities have evolved relative to competing models. This helps in understanding the unique positioning of Gemini in the AI landscape and how its evolutionary path has influenced the broader field.

Application Expansion Through Versions

The evolution of Gemini has been marked by a significant expansion in its application scope. I've tracked how its use cases have broadened from specialized research applications to widespread consumer and enterprise implementations.

In its earliest iterations, Gemini was primarily positioned as a research-focused AI system with specialized applications. However, Google quickly expanded its integration across their product ecosystem, particularly with Google AI search and other core Google products.

Gemini Integration Journey

flowchart TD

A[Gemini Core Model] --> B[Google Search]

A --> C[Google Workspace]

A --> D[Android System]

A --> E[Google Cloud]

A --> F[Developer APIs]

B --> B1[Search Generative Experience]

B --> B2[Multi-search Capabilities]

C --> C1[Gmail Smart Compose]

C --> C2[Google Docs Assistant]

C --> C3[Slides Content Generation]

D --> D1[Android System Intelligence]

D --> D2[Google Assistant]

D --> D3[On-device Features]

E --> E1[Vertex AI]

E --> E2[Enterprise Solutions]

F --> F1[Gemini API]

F --> F2[Multimodal Endpoints]

style A fill:#FF8000,color:white

style B fill:#FF6B6B,color:white

style C fill:#FF6B6B,color:white

style D fill:#FF6B6B,color:white

style E fill:#FF6B6B,color:white

style F fill:#FF6B6B,color:white

Gemini integration across Google's product ecosystem

The development of specialized variants for different deployment contexts was a key factor in Gemini's application expansion. The Ultra variant was optimized for complex reasoning tasks in cloud environments, while the Nano variant enabled on-device AI capabilities with reduced computational requirements.

Application Domain Growth Over Time

Expansion of Gemini application domains across development timeline

Using PageOn.ai's AI Blocks, I've created visual workflows that illustrate Gemini's integration journey across different applications. This helps in understanding how the model's capabilities have been adapted for different use contexts and how its application scope has expanded over time.

Training Data and Learning Methodology Advancements

The evolution of Gemini's capabilities has been closely tied to advancements in its training data and learning methodologies. I've analyzed how these fundamental aspects have changed across different versions of the model.

Gemini's training dataset composition has evolved significantly across versions. Early iterations relied heavily on text and image data, while later versions incorporated much more extensive video and audio content. This expansion in multimodal training data was crucial for developing more sophisticated reasoning capabilities across different types of information.

Training Data Composition Evolution

Changes in training data composition across Gemini versions

The evolution of learning algorithms has been equally important. Later versions of Gemini implemented more sophisticated reinforcement learning from human feedback (RLHF) techniques, which were crucial for developing more nuanced reasoning capabilities. These approaches allowed the model to better align with human preferences and expectations in complex reasoning tasks.

The Google AI Foundational Course principles have had a significant influence on Gemini's development approach. These principles emphasize responsible AI development, including considerations of fairness, interpretability, and safety, which have been integrated into Gemini's training methodologies.

Learning Methodology Evolution

flowchart TD

subgraph "Gemini 1.0"

A1[Pre-training] --> B1[Supervised Fine-tuning]

B1 --> C1[Basic RLHF]

end

subgraph "Gemini 1.5"

A2[Enhanced Pre-training] --> B2[Multi-objective Fine-tuning]

B2 --> C2[Advanced RLHF]

C2 --> D2[Constitutional AI Alignment]

end

subgraph "Gemini 2.0"

A3[Multimodal Pre-training] --> B3[Task-specific Fine-tuning]

B3 --> C3[Hierarchical RLHF]

C3 --> D3[Advanced Alignment Techniques]

D3 --> E3[Adversarial Training]

end

Gemini1.0 --> Gemini1.5

Gemini1.5 --> Gemini2.0

style A1 fill:#FF8000,color:white

style B1 fill:#FF8000,color:white

style C1 fill:#FF8000,color:white

style A2 fill:#FF6B6B,color:white

style B2 fill:#FF6B6B,color:white

style C2 fill:#FF6B6B,color:white

style D2 fill:#FF6B6B,color:white

style A3 fill:#4ECDC4,color:white

style B3 fill:#4ECDC4,color:white

style C3 fill:#4ECDC4,color:white

style D3 fill:#4ECDC4,color:white

style E3 fill:#4ECDC4,color:white

Evolution of learning methodologies across Gemini versions

Using PageOn.ai's structured visual frameworks, I've been able to create clear visualizations of these complex training methodologies. This helps in understanding how changes in training data and learning approaches have contributed to Gemini's evolving capabilities.

Technical Challenges and Solutions Through Iterations

The evolution of Gemini has involved overcoming numerous technical challenges. I've studied how these obstacles were addressed through innovative solutions across different iterations of the model.

One of the most significant challenges in early versions was efficiently processing multimodal inputs while maintaining coherent understanding across modalities. This was addressed through the development of specialized neural architectures that could effectively integrate information from different modalities at multiple levels of processing.

| Challenge | Version Affected | Solution Implemented | Resulting Capability |

|---|---|---|---|

| Multimodal integration | Gemini 1.0 | Cross-modal attention mechanisms | Basic understanding across text and images |

| Context length limitations | Gemini 1.0 | Efficient attention mechanisms, sparse attention | Million-token context window in 1.5 |

| Computational efficiency | All versions | Mixture of Experts architecture | Better reasoning with fewer active parameters |

| Video understanding | Gemini 1.5 | Temporal attention mechanisms | Advanced video analysis capabilities |

Another major challenge was scaling context length while maintaining computational efficiency. This was particularly important for enabling sophisticated reasoning over long inputs. The solution involved developing specialized attention mechanisms and memory systems that could efficiently process and reference information across extended contexts.

Computational Efficiency Improvements

Computational efficiency improvements across Gemini versions

The implementation of Mixture of Experts (MoE) architecture was a particularly important innovation for addressing computational efficiency challenges. This approach allowed the model to dynamically route different types of queries to specialized neural subnetworks, significantly improving both efficiency and performance.

Using PageOn.ai's visualization tools, I've been able to illustrate these complex problem-solving approaches in Gemini's development. This helps in understanding how technical challenges were addressed through innovative solutions, and how these solutions contributed to the model's evolving capabilities.

Multimodal Reasoning Capabilities Evolution

The evolution of Gemini's multimodal reasoning capabilities represents one of its most significant advancements. I've analyzed how these capabilities have developed across different versions, enabling increasingly sophisticated understanding across different types of media.

Early versions of Gemini already demonstrated strong multimodal capabilities, but these were primarily focused on text and image understanding. Later versions significantly expanded these capabilities to include more sophisticated video and audio processing, enabling more comprehensive multimodal reasoning.

The development of YouTube summary AI with Gemini capabilities exemplifies this evolution. Gemini's improved ability to process and understand video content enabled it to generate accurate and insightful summaries of complex video content, demonstrating sophisticated cross-modal reasoning.

Multimodal Processing Workflow

flowchart TD

A[Input Content] --> B{Content Type}

B -->|Text| C[Text Processing]

B -->|Image| D[Image Processing]

B -->|Video| E[Video Processing]

B -->|Audio| F[Audio Processing]

C --> G[Text Embeddings]

D --> H[Visual Embeddings]

E --> I[Temporal Visual Embeddings]

F --> J[Audio Embeddings]

G --> K[Joint Representation Space]

H --> K

I --> K

J --> K

K --> L[Cross-Modal Attention]

L --> M[Reasoning Engine]

M --> N[Response Generation]

style A fill:#FF8000,color:white

style K fill:#FF8000,color:white

style L fill:#FF8000,color:white

style M fill:#FF8000,color:white

style N fill:#FF8000,color:white

Multimodal processing workflow in advanced Gemini versions

A particularly significant development was the evolution of cross-modal understanding capabilities. Later versions of Gemini demonstrated an impressive ability to reason across different modalities, connecting concepts from text, images, and video to form coherent understanding. This enabled more sophisticated analytical tasks that require integrating information from multiple sources and formats.

Multimodal Task Performance

Performance on different multimodal tasks across Gemini versions

Using PageOn.ai's Deep Search integration, I've created visual demonstrations of multimodal processing that help illustrate how Gemini analyzes and reasons across different types of media. This helps in understanding the sophisticated cognitive processes that underlie Gemini's multimodal reasoning capabilities.

Future Trajectory and Next-Generation Capabilities

Based on Gemini's evolutionary patterns, we can make informed predictions about its future trajectory. I've analyzed current development focus areas and likely advancements in reasoning capabilities to understand where this technology is heading.

Current development appears focused on several key areas that will likely define future versions of Gemini. These include enhanced compositional reasoning, improved grounding in factual knowledge, and more sophisticated temporal reasoning capabilities that can track and understand changes over time.

Projected Capability Growth

Projected capability growth for future Gemini versions

There are also indications of potential convergence with other AI technologies. Integration with specialized reasoning systems, retrieval-augmented generation techniques, and tool-using capabilities will likely feature prominently in future versions of Gemini, enabling more sophisticated problem-solving abilities.

Future Capability Integration

flowchart TD

A[Future Gemini Core] --> B[Real-time Learning]

A --> C[Specialized Domain Expertise]

A --> D[Advanced Tool Usage]

A --> E[Autonomous Planning]

A --> F[Multi-agent Collaboration]

B --> B1[Continuous Model Updating]

B --> B2[Adaptive Knowledge Integration]

C --> C1[Scientific Research Support]

C --> C2[Specialized Medical Knowledge]

C --> C3[Advanced Engineering Reasoning]

D --> D1[External API Integration]

D --> D2[Advanced Code Generation]

D --> D3[System Control Capabilities]

E --> E1[Long-term Goal Planning]

E --> E2[Resource Optimization]

F --> F1[Specialized Agent Coordination]

F --> F2[Distributed Problem Solving]

style A fill:#FF8000,color:white

style B fill:#FF6B6B,color:white

style C fill:#FF6B6B,color:white

style D fill:#FF6B6B,color:white

style E fill:#FF6B6B,color:white

style F fill:#FF6B6B,color:white

Future capability integration pathways for Gemini

Within Google's AI strategy, Gemini appears positioned to become a central technology that powers a wide range of products and services. The long-term vision seems to be evolving Gemini into a comprehensive AI system that can handle increasingly complex reasoning tasks across diverse domains while maintaining strong multimodal capabilities.

Using PageOn.ai's visualization capabilities, I've transformed these abstract concepts into compelling visual narratives that help illustrate the potential future trajectory of Gemini. This provides a clearer understanding of where this technology is headed and the capabilities we might expect from future versions.

Transform Your Visual Expressions with PageOn.ai

Ready to create stunning visualizations like the ones in this guide? PageOn.ai makes it easy to transform complex concepts into clear, compelling visual narratives that communicate your ideas effectively.

Start Creating with PageOn.ai TodayLooking Ahead: The Continuing Evolution

As we've seen throughout this visual journey, Google Gemini has undergone a remarkable evolution from its foundation to becoming a sophisticated reasoning system. Each iteration has brought significant advancements in multimodal processing, reasoning capabilities, and practical applications.

The trajectory of Gemini's development suggests that we're still in the early stages of realizing the full potential of multimodal AI systems. Future versions will likely continue to push the boundaries of what's possible in terms of reasoning capabilities, multimodal understanding, and practical applications.

Throughout this exploration, I've used PageOn.ai's visualization tools to transform complex concepts into clear visual expressions. This approach has been invaluable for understanding the sophisticated architectural and cognitive processes that underlie Gemini's capabilities.

As AI systems like Gemini continue to evolve, the ability to clearly visualize and communicate complex ideas will become increasingly important. Tools like PageOn.ai that can transform abstract concepts into compelling visual narratives will play a crucial role in helping us understand and harness the potential of these powerful technologies.

You Might Also Like

Mastering Visual Harmony: Typography and Color Selection for Impactful Presentations

Learn how to create professional presentations through strategic typography and color harmony. Discover font pairing, color theory, and design principles for slides that captivate audiences.

Visualizing Fluency: Transform English Learning for Non-Native Speakers | PageOn.ai

Discover innovative visual strategies to enhance English fluency for non-native speakers. Learn how to transform abstract language concepts into clear visual frameworks using PageOn.ai.

Multi-Format Conversion Tools: Transforming Modern Workflows for Digital Productivity

Discover how multi-format conversion tools are revolutionizing digital productivity across industries. Learn about essential features, integration strategies, and future trends in format conversion technology.

Transforming Value Propositions into Visual Clarity: A Modern Approach | PageOn.ai

Discover how to create crystal clear audience value propositions through visual expression. Learn techniques, frameworks, and tools to transform complex ideas into compelling visual narratives.