The Ultimate Visual Guide to AI Data Privacy & Security

From Foundational Principles to Practical Implementation

I've created this comprehensive guide to help you navigate the complex intersection of artificial intelligence, data privacy, and security. Through visual frameworks and practical strategies, we'll explore how to protect sensitive data while maximizing AI's potential.

The Evolving Landscape of AI Data Privacy & Security

I've observed that AI advancements present us with a fascinating paradox: while we're witnessing unprecedented capabilities in data processing and analysis, we're simultaneously facing heightened privacy concerns. This dual-edged nature of AI technology requires us to be increasingly vigilant about how we handle sensitive information.

The dual nature of AI advancement: capabilities versus concerns

Current Regulatory Frameworks

Today's AI applications must navigate a complex web of regulations. The General Data Protection Regulation (GDPR) in Europe, the California Consumer Privacy Act (CCPA) in the US, and domain-specific regulations like HIPAA for healthcare data all impose strict requirements on how AI systems can collect, process, and store personal data.

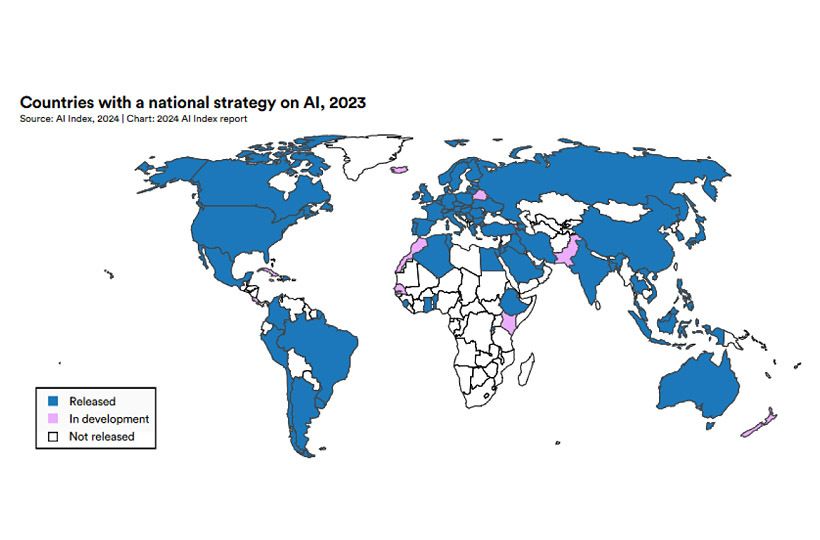

Global AI Privacy Regulation Coverage

Comparison of major regulatory frameworks and their scope of protection

Impact of Security Breaches

The business impact of AI security breaches has been substantial in recent years. Organizations experiencing AI-related data breaches face not only financial penalties under regulations like GDPR (up to 4% of global annual revenue) but also significant reputational damage and loss of consumer trust.

AI Security Breach Impact (2020-2023)

Average costs by industry sector in millions USD

I've found that visualization tools like PageOn.ai are invaluable in helping teams better understand complex privacy requirements. By creating clear visual maps of data flows, regulatory touchpoints, and security protocols, organizations can transform abstract compliance concepts into actionable frameworks that all team members can comprehend.

Foundational Principles of AI Data Privacy

In my experience, successful AI privacy strategies always begin with strong foundational principles. These principles serve as the bedrock upon which all technical implementations and governance structures are built.

Privacy-by-Design Framework

The interconnected principles of privacy-by-design for AI systems:

flowchart TD

A[Privacy by Design] --> B[Data Minimization]

A --> C[Purpose Limitation]

A --> D[Storage Limitation]

A --> E[User Control]

A --> F[Default Protection]

B --> G[Collection Phase]

C --> H[Processing Phase]

D --> I[Retention Phase]

E --> J[User Interface]

F --> K[System Design]

classDef orange fill:#FF8000,stroke:#FF8000,color:white

class A orange

Privacy-by-Design Approach

I always advocate for embedding privacy considerations from the very inception of AI projects. Rather than treating privacy as an afterthought or compliance checkbox, privacy-by-design integrates data protection into the architecture of AI systems from the beginning. This approach not only ensures regulatory compliance but also builds user trust.

Data Minimization Strategies

One of the most effective privacy principles I've implemented is data minimization: collecting only what's absolutely necessary for the AI system to function properly. By limiting data collection to essential elements, we reduce both privacy risks and storage costs while enhancing system performance.

Key Data Minimization Tactics

- Identify the minimum data points required for each AI function

- Implement automated data filtering at collection points

- Regularly audit data stores to eliminate unnecessary information

- Create clear data necessity justification processes

Purpose Limitation

I've found that clearly defining and visualizing data usage boundaries is crucial. Purpose limitation ensures that data collected for one specific purpose isn't repurposed for unrelated functions without explicit consent. This principle helps prevent function creep and maintains user trust in how their data is being utilized.

Storage Limitation

Implementing effective data lifecycle management is another principle I strongly advocate for. By establishing clear retention periods and automated deletion processes, organizations can ensure that data doesn't persist indefinitely, reducing both privacy risks and storage costs.

Data Lifecycle Management

Optimal retention periods by data sensitivity level

I've successfully used PageOn.ai's AI Blocks to create clear visual representations of data flows and privacy touchpoints. These visualizations help teams understand complex data processes and identify potential privacy vulnerabilities that might otherwise go unnoticed in text-heavy documentation.

Visual representation of data flows with privacy touchpoints created using PageOn.ai

Building a Comprehensive AI Security Framework

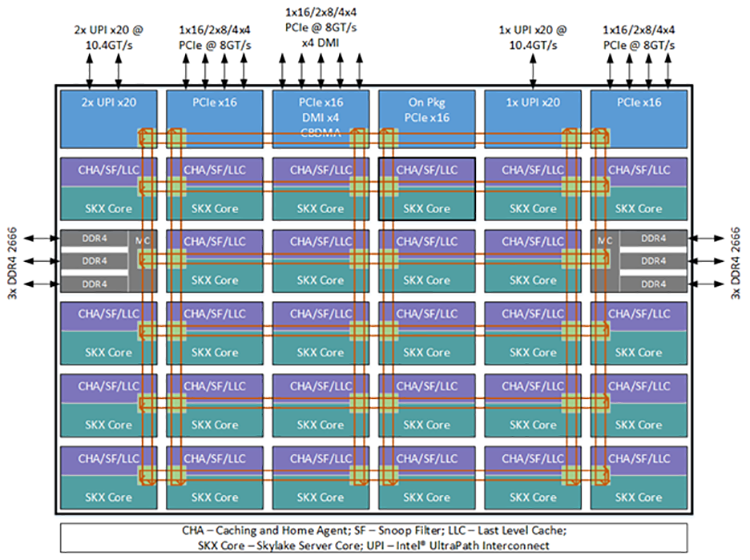

Based on my experience, AI systems require specialized security approaches that address their unique vulnerabilities. A multi-layered security framework provides the most robust protection for AI applications and the sensitive data they process.

Multi-Layered AI Security Framework

The interconnected layers of comprehensive AI protection:

flowchart TD

A[AI Security Framework] --> B[Data Layer]

A --> C[Model Layer]

A --> D[Application Layer]

A --> E[Infrastructure Layer]

A --> F[Governance Layer]

B --> B1[Encryption]

B --> B2[Access Controls]

B --> B3[Data Validation]

C --> C1[Model Hardening]

C --> C2[Adversarial Testing]

C --> C3[Output Filtering]

D --> D1[API Security]

D --> D2[Authentication]

D --> D3[Rate Limiting]

E --> E1[Network Security]

E --> E2[Container Security]

E --> E3[Cloud Security]

F --> F1[Policies]

F --> F2[Monitoring]

F --> F3[Incident Response]

classDef orange fill:#FF8000,stroke:#FF8000,color:white

class A orange

Multi-layered Security Approaches

I've found that effective AI security requires multiple defensive layers. This approach ensures that if one security control fails, others remain in place to protect the system. From data encryption to model security to infrastructure protection, each layer addresses specific vulnerabilities in the AI pipeline.

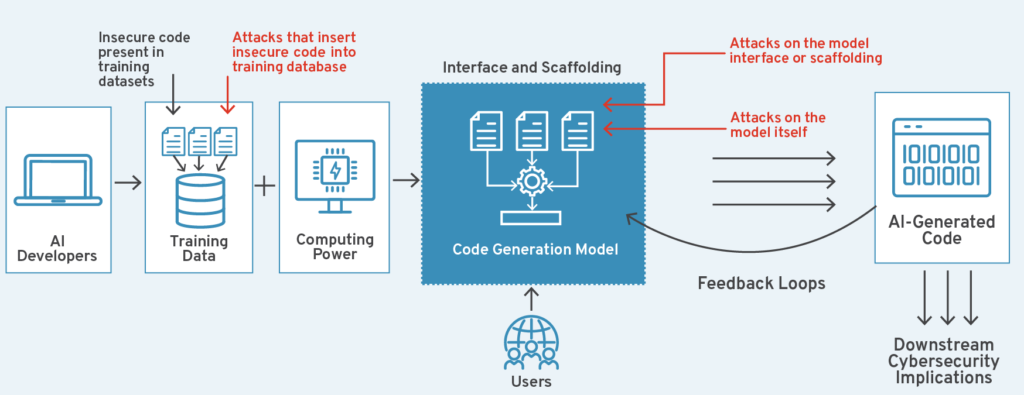

Secure AI Agents

Secure AI agents play a crucial role in maintaining data integrity throughout AI systems. These specialized components monitor data flows, detect anomalies, and enforce security policies autonomously. I've implemented these agents as an essential part of modern AI security architecture to provide continuous protection.

Architecture of secure AI agents monitoring system components

Visual Framework for AI Safety

Creating a visual framework for AI safety has proven invaluable in my work with organizations. These frameworks help teams identify vulnerabilities that might otherwise remain abstract or overlooked. By mapping potential attack vectors and security controls visually, teams can more effectively prioritize security investments.

Visual AI Risk Assessment

I recommend implementing visual AI risk assessment frameworks for ongoing security monitoring. These tools allow teams to continually evaluate emerging threats and vulnerabilities in a format that's accessible to both technical and non-technical stakeholders.

AI Security Risk Assessment Matrix

Visualization of risk severity by likelihood and impact

I've successfully used PageOn.ai to visualize threat models, making abstract security concepts tangible for cross-functional teams. These visualizations have proven particularly valuable when communicating security requirements between technical and non-technical stakeholders, creating a shared understanding of security objectives.

Technical Implementation of AI Privacy Safeguards

In my experience implementing AI privacy safeguards, the technical approaches we choose can dramatically impact both security posture and system performance. Here are the key technical strategies I've found most effective.

Encryption Methodologies

I've implemented specialized encryption methodologies designed specifically for AI training data. These approaches go beyond standard encryption by allowing certain computations to be performed on encrypted data without decryption, maintaining privacy throughout the AI pipeline.

AI-Specific Encryption Approaches

Comparison of encryption methods for AI data protection:

flowchart TD

A[AI Data Encryption] --> B[Homomorphic Encryption]

A --> C[Secure Enclaves]

A --> D[Tokenization]

A --> E[Functional Encryption]

B --> B1[Allows computation on encrypted data]

B --> B2[High computational overhead]

C --> C1[Hardware-based isolation]

C --> C2[Trusted execution environments]

D --> D1[Replaces sensitive data with tokens]

D --> D2[Maintains data utility]

E --> E1[Selective data revelation]

E --> E2[Fine-grained access control]

classDef orange fill:#FF8000,stroke:#FF8000,color:white

class A orange

Differential Privacy Techniques

I've found differential privacy to be an essential technique for balancing utility and anonymization in AI systems. By adding carefully calibrated noise to data or queries, these methods provide mathematical guarantees against re-identification while preserving the statistical utility of the dataset.

Privacy-Utility Tradeoff with Differential Privacy

Impact of privacy budget (epsilon) on model accuracy

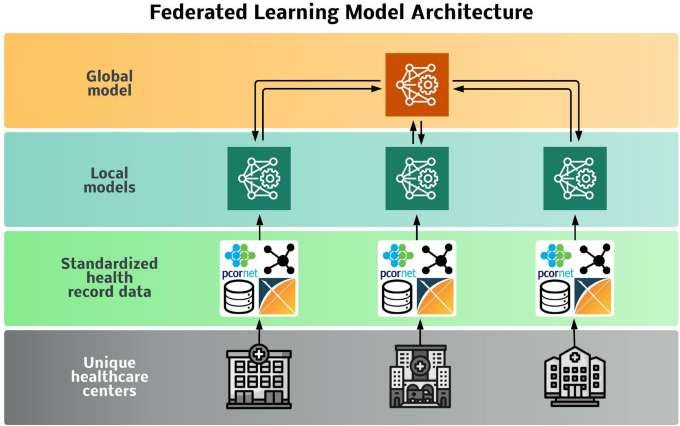

Federated Learning Approaches

One of the most promising privacy-preserving techniques I've implemented is federated learning, which keeps sensitive data localized while still enabling collaborative model training. Rather than centralizing all training data, the model travels to the data, with only model updates being shared.

Federated learning architecture keeping sensitive data on local devices

Secure Multi-party Computation

For collaborative AI development involving multiple organizations, I've successfully implemented secure multi-party computation (MPC) protocols. These sophisticated cryptographic techniques allow multiple parties to jointly compute functions over their inputs while keeping those inputs private from other participants.

Technical Implementation Checklist

- Evaluate data sensitivity to select appropriate encryption methods

- Determine appropriate epsilon values for differential privacy based on use case

- Assess network conditions for feasibility of federated learning deployment

- Consider computational overhead of secure MPC protocols against privacy benefits

- Implement secure key management infrastructure for all cryptographic operations

I've utilized PageOn.ai's Deep Search to integrate relevant security visualizations and technical diagrams into my implementation documentation. This capability has been invaluable for creating comprehensive technical specifications that clearly communicate complex security architectures to development teams.

Ethical Considerations in AI Data Usage

Throughout my work with AI systems, I've found that technical safeguards must be complemented by strong ethical frameworks. These ethical considerations help ensure that privacy protections align with human values and societal expectations.

AI Ethics Framework

Core ethical principles for responsible AI data usage:

flowchart TD

A[AI Ethics Framework] --> B[Transparency]

A --> C[Fairness]

A --> D[Human Autonomy]

A --> E[Accountability]

A --> F[Privacy]

B --> B1[Explainability]

B --> B2[Disclosure]

C --> C1[Non-discrimination]

C --> C2[Equal treatment]

D --> D1[Human oversight]

D --> D2[Meaningful consent]

E --> E1[Responsibility]

E --> E2[Auditability]

F --> F1[Data protection]

F --> F2[Minimization]

classDef orange fill:#FF8000,stroke:#FF8000,color:white

class A orange

Transparency Obligations

I believe that explaining data usage to stakeholders is not just a regulatory requirement but an ethical imperative. Transparent AI systems build trust by clearly communicating what data is collected, how it's used, and what influences algorithmic decisions. This transparency should be accessible to both technical and non-technical audiences.

Fairness in AI

In my experience, preventing discriminatory outcomes requires proper data governance from the earliest stages of AI development. This includes diverse training data, regular bias audits, and fairness metrics that are monitored throughout the AI lifecycle. Without these measures, AI systems can inadvertently perpetuate or amplify societal biases.

AI Fairness Assessment Methods

Effectiveness comparison of different fairness evaluation approaches

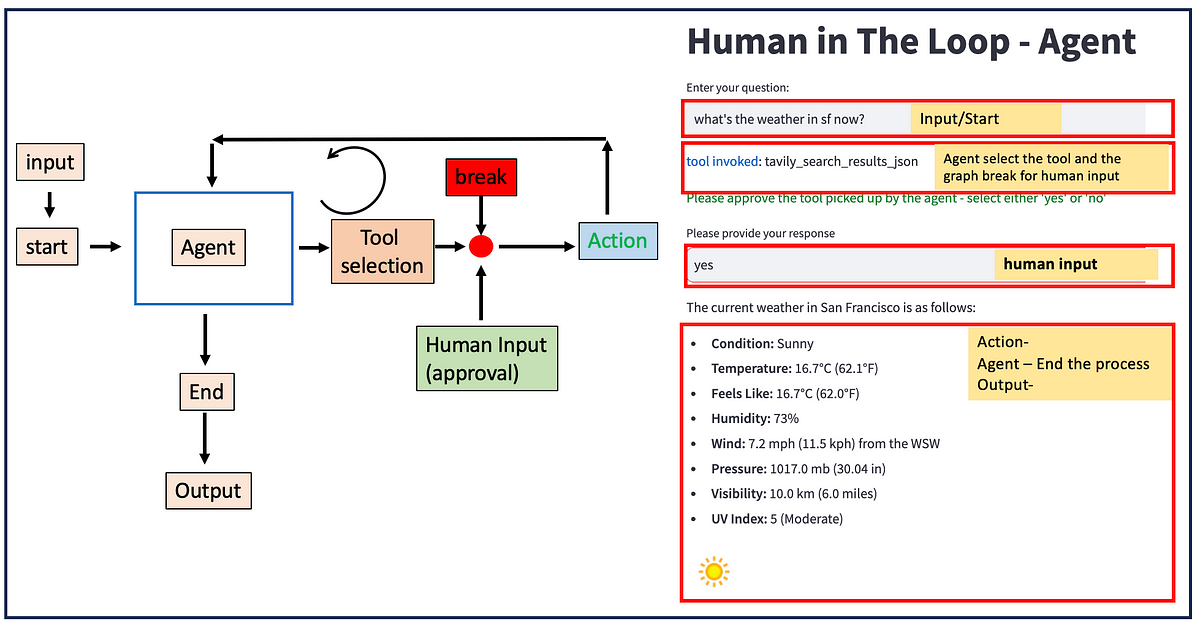

Human Oversight Requirements

I strongly advocate for keeping humans meaningfully involved in AI systems, especially those making consequential decisions. This human-in-the-loop approach ensures that automated processes can be monitored, questioned, and overridden when necessary. Effective oversight requires both technical mechanisms and organizational processes.

Human oversight integration points in AI decision processes

Visualizing Ethical Frameworks

I've used PageOn.ai to create visual representations of ethical frameworks, which has proven invaluable for creating organizational alignment. These visualizations transform abstract ethical principles into concrete decision trees and workflows that teams can follow consistently.

Addressing Emerging Ethical Concerns

As AI assistants and autonomous systems become more prevalent, new ethical challenges continue to emerge. I've found that regular ethical reviews and stakeholder consultations are essential to identify and address these evolving concerns before they become problematic.

Compliance Documentation & Visualization Strategies

In my experience, effective compliance isn't just about meeting requirements—it's about making those requirements accessible and actionable for everyone in the organization. Visual documentation strategies have proven particularly effective in this regard.

Visual Data Mapping Tools

I've created visual data mapping tools that clearly show how data flows through AI systems, where it's stored, and which privacy controls are applied at each stage. These visual maps have proven invaluable for both regulatory compliance and internal governance.

AI Data Flow Compliance Map

Visualization of data movement with compliance checkpoints:

flowchart TD

A[Data Collection] -->|Consent Validation| B[Data Storage]

B -->|Access Controls| C[Data Processing]

C -->|Purpose Validation| D[AI Training]

D -->|Model Validation| E[Inference Engine]

E -->|Output Filtering| F[User Interface]

A1[GDPR Art. 6] -.-> A

A2[CCPA Notice] -.-> A

B1[GDPR Art. 32] -.-> B

B2[ISO 27001] -.-> B

C1[GDPR Art. 5(1)(b)] -.-> C

C2[HIPAA Processing] -.-> C

D1[Bias Audit] -.-> D

D2[Documentation] -.-> D

E1[Explainability] -.-> E

E2[Monitoring] -.-> E

F1[User Rights] -.-> F

F2[Transparency] -.-> F

classDef orange fill:#FF8000,stroke:#FF8000,color:white

classDef blue fill:#4299e1,stroke:#4299e1,color:white

class A,B,C,D,E,F blue

class A1,A2,B1,B2,C1,C2,D1,D2,E1,E2,F1,F2 orange

Data Processing Agreements

I've developed data processing agreements with visual components that clearly delineate responsibilities and requirements between data controllers and processors. These visual elements help ensure all parties understand their compliance obligations in AI partnerships.

Visual data processing agreement showing responsibility allocation

Intuitive Privacy Notices

I believe that privacy notices should be clear and accessible to all users. I've designed intuitive privacy notices with visual components that explain complex data practices in ways that average users can understand, increasing transparency and building trust.

Automated Compliance Monitoring

To ensure ongoing compliance, I've implemented automated compliance monitoring dashboards that provide real-time visibility into privacy metrics and potential issues. These tools help organizations move from periodic compliance checks to continuous monitoring.

Compliance Monitoring Dashboard

Key metrics for ongoing privacy compliance tracking

I've used PageOn.ai to transform complex compliance requirements into clear visual guides for teams. These visualizations help bridge the gap between legal requirements and practical implementation, making compliance more achievable across the organization.

Future-Proofing Your AI Privacy & Security Strategy

Based on my experience, AI privacy and security is a rapidly evolving field. Organizations need forward-looking strategies that anticipate emerging challenges and regulatory changes rather than merely reacting to them.

Emerging AI Tool Trends

Looking ahead to AI tool trends for 2025, I anticipate several developments with significant privacy implications. These include more sophisticated synthetic data generation, enhanced federated learning capabilities, and AI-powered privacy compliance tools that can automatically detect and remediate potential issues.

Emerging AI Privacy Technologies (2023-2026)

Adoption timeline and privacy impact assessment

Evolving Regulatory Landscapes

I've been closely monitoring regulatory developments across global markets, and it's clear that AI-specific regulations are rapidly evolving. Organizations should prepare for more stringent requirements around algorithmic transparency, automated decision-making, and data rights. A modular privacy framework that can adapt to these changes will be essential.

Global AI regulatory landscape and implementation timeline

Adaptive Security Frameworks

I recommend building adaptive security frameworks that evolve with AI capabilities. These frameworks should incorporate continuous monitoring, regular security assessments, and agile response mechanisms that can quickly adapt to new threats as they emerge.

Privacy-Preserving Innovation

In my work with organizations, I've helped develop privacy-preserving AI innovation strategies that make privacy a competitive advantage rather than a constraint. By embedding privacy considerations into the R&D process, organizations can develop innovative AI solutions that respect user privacy by design.

Future-Proofing Checklist

- Establish a privacy regulatory monitoring system

- Develop modular privacy frameworks that can adapt to new requirements

- Invest in privacy-enhancing technologies research

- Create cross-functional privacy innovation teams

- Build scenario planning capabilities for emerging privacy challenges

I've utilized PageOn.ai's Agentic capabilities to model future privacy scenarios and create preparedness visualizations. These tools help organizations anticipate potential challenges and develop proactive strategies rather than reactive responses.

Case Studies: AI Privacy & Security Success Stories

Throughout my career, I've had the opportunity to work with organizations across various industries implementing robust AI privacy and security measures. These case studies highlight practical applications of the principles and techniques discussed in this guide.

Healthcare: Secure AI Diagnostics

I worked with a healthcare provider to implement secure AI diagnostics while protecting sensitive patient data. By using federated learning, the diagnostic models were trained across multiple hospitals without centralizing patient data. This approach maintained HIPAA compliance while still enabling highly accurate diagnostic capabilities.

Healthcare AI Privacy Architecture

Federated learning implementation for patient data protection:

flowchart TD

A[Central Server] -->|Send Model Updates| B[Hospital 1]

A -->|Send Model Updates| C[Hospital 2]

A -->|Send Model Updates| D[Hospital 3]

B -->|Return Gradients Only| A

C -->|Return Gradients Only| A

D -->|Return Gradients Only| A

B1[Patient Data] -->|Local Training| B

C1[Patient Data] -->|Local Training| C

D1[Patient Data] -->|Local Training| D

A -->|Deployment| E[Secure Inference Service]

classDef orange fill:#FF8000,stroke:#FF8000,color:white

classDef blue fill:#4299e1,stroke:#4299e1,color:white

classDef green fill:#48bb78,stroke:#48bb78,color:white

class A orange

class B,C,D blue

class B1,C1,D1 green

class E orange

Finance: Privacy-Preserving Fraud Detection

I helped a financial institution implement AI-powered fraud detection while maintaining strict privacy protections. Using homomorphic encryption techniques, the system could analyze transaction patterns for fraud indicators without decrypting sensitive customer financial data, balancing security needs with privacy requirements.

Financial Fraud Detection Results

Performance comparison before and after privacy-preserving implementation

Smart Cities: Balancing Surveillance and Privacy

I consulted on a smart city project that needed to balance surveillance capabilities with citizen privacy. We implemented privacy-by-design principles including automated anonymization of facial features in public camera feeds, strict purpose limitations, and transparent public dashboards showing what data was being collected and how it was being used.

E-commerce: Personalization without Privacy Compromise

I worked with an e-commerce platform to implement personalization capabilities without compromising customer privacy. By using on-device processing and differential privacy techniques, the platform could provide highly relevant recommendations while minimizing the collection and centralization of personal data.

E-commerce personalization architecture with privacy-preserving components

For each of these case studies, I used PageOn.ai to create compelling visual narratives that demonstrated best practices. These visualizations were instrumental in helping stakeholders understand complex privacy and security architectures and their business benefits.

Transform Your AI Privacy & Security Visualization

Ready to create clear, compelling visual representations of your AI privacy and security frameworks? PageOn.ai helps you transform complex privacy concepts into intuitive visual guides that everyone in your organization can understand and implement.

Start Creating with PageOn.ai TodayConclusion: Building a Privacy-First AI Future

Throughout this guide, I've shared the strategies and techniques I've found most effective for implementing robust AI data privacy and security. From foundational principles to technical implementations to future-proofing strategies, these approaches help organizations harness AI's power while respecting privacy and building trust.

As AI continues to evolve, so too will privacy and security challenges. By adopting a proactive, visual approach to these challenges, organizations can build AI systems that not only comply with regulations but also align with human values and expectations.

I encourage you to use the frameworks and visualization strategies outlined in this guide as a starting point for your own AI privacy and security journey. With tools like PageOn.ai, you can transform complex privacy concepts into clear visual representations that drive understanding and action across your organization.

You Might Also Like

Enhancing Audience Experience with Strategic Audio Integration | Create Immersive Brand Connections

Discover how strategic audio integration creates immersive brand connections across podcasts, streaming platforms, and smart speakers. Learn frameworks and techniques to transform your marketing.

Transforming Presentations: Strategic Use of Color and Imagery for Maximum Visual Impact

Discover how to leverage colors and images in your slides to create visually stunning presentations that engage audiences and enhance information retention.

Mastering Visual Flow: How Morph Transitions Transform Presentations | PageOn.ai

Discover how Morph transitions create dynamic, seamless visual connections between slides, enhancing audience engagement and transforming ordinary presentations into memorable experiences.

Revolutionizing Market Entry Presentations with ChatGPT and Gamma - Strategic Impact Guide

Learn how to leverage ChatGPT and Gamma to create compelling market entry presentations in under 90 minutes. Discover advanced prompting techniques and visual strategies for impactful pitches.