Architecting Secure AI Systems: A Visual Framework for Permission Management and Risk Mitigation

A comprehensive approach to balancing AI autonomy with robust security controls

As AI systems become increasingly autonomous and integrated into critical workflows, the need for sophisticated security frameworks has never been more pressing. I've developed this guide to help you navigate the complex landscape of AI security, with a special focus on permission management and risk visualization techniques that keep your automated systems both powerful and protected.

The Evolving Landscape of AI Security Challenges

I've been monitoring the AI security landscape for years, and what I'm seeing now is unprecedented. As AI systems gain more autonomy and access to critical systems, they introduce unique security vulnerabilities that traditional frameworks simply weren't designed to address. The core challenge we face is what I call the "permission paradox" – how do we grant AI agents enough freedom to be useful while implementing strict security controls to prevent misuse?

The growing complexity of AI security threats requires new approaches to visualization and management

Recent incidents have highlighted the urgency of this challenge. In 2023 alone, we saw several high-profile cases where inadequately secured secure AI agents inadvertently exposed sensitive data or made unauthorized system changes. These incidents typically share a common pattern: AI systems with overly broad permissions operating without proper monitoring or guardrails.

The Evolution of AI Security Threats

As AI capabilities expand, security threats evolve in complexity and potential impact:

flowchart TD

A[Traditional Security Threats] --> B[AI-Enhanced Threats]

B --> C[Autonomous AI Threats]

A --> D[Known Attack Patterns]

A --> E[Limited Scope]

A --> F[Human-Driven]

B --> G[Learning & Adaptation]

B --> H[Broader Attack Surface]

B --> I[Human-AI Collaboration]

C --> J[Self-Modification]

C --> K[Novel Attack Vectors]

C --> L[System Manipulation]

style A fill:#f5f5f5,stroke:#333

style B fill:#fff0e6,stroke:#FF8000

style C fill:#ffe0cc,stroke:#FF8000,stroke-width:2px

style J fill:#ffe0cc,stroke:#FF8000,stroke-width:2px

style K fill:#ffe0cc,stroke:#FF8000,stroke-width:2px

style L fill:#ffe0cc,stroke:#FF8000,stroke-width:2px

What makes these security challenges particularly difficult is the rising complexity of multi-agent AI environments. When multiple AI systems interact, they create emergent behaviors that can be difficult to predict and secure. Traditional security frameworks that rely on static rules and predefined threat models struggle to keep pace with intelligent, adaptive systems.

In my experience working with enterprise AI deployments, I've found that organizations often underestimate how fundamentally different AI security needs to be from conventional cybersecurity approaches. The dynamic nature of agentic workflows requires us to rethink security from the ground up.

Core Components of an Effective AI Security Framework

Through my work implementing AI security frameworks across various industries, I've identified several essential components that form the foundation of any robust approach. Let's examine these critical elements and how they work together to create comprehensive protection.

Permission Structures for AI Systems

Designing effective permission structures for AI agents requires a fundamentally different approach than traditional user-based access control. I've found that the most successful implementations use role-based access control systems that are specifically optimized for AI agents.

A visual permission map clarifies AI agent capabilities and security boundaries

The key innovation I recommend is implementing just-in-time permission granting protocols. Rather than giving AI agents broad permissions at initialization, these systems request specific permissions only when needed and with appropriate verification. This approach dramatically reduces the attack surface and potential for misuse.

Creating comprehensive audit trails is equally important. By capturing permission usage patterns and analyzing them for anomalies, we can detect potential security incidents before they escalate. PageOn.ai's Deep Search functionality can be invaluable here, helping to integrate relevant security standards and compliance requirements directly into your visual security framework.

Just-in-Time Permission Flow

How permissions are granted only when needed and with appropriate verification:

sequenceDiagram

participant User

participant AI Agent

participant Auth Service

participant Resource

AI Agent->>Auth Service: Request permission for task

Auth Service->>Auth Service: Evaluate request context

alt High-risk operation

Auth Service->>User: Request explicit approval

User->>Auth Service: Grant approval

else Low-risk operation

Auth Service->>Auth Service: Automatic approval

end

Auth Service->>AI Agent: Issue time-limited token

AI Agent->>Resource: Access with token

Resource->>Resource: Verify token & scope

Resource->>AI Agent: Return results

AI Agent->>Auth Service: Release permission

When designing these permission structures, I've found that using visual framework for ai safety greatly enhances team understanding and implementation accuracy. Visualizing complex permission relationships makes it much easier to identify potential security gaps before they can be exploited.

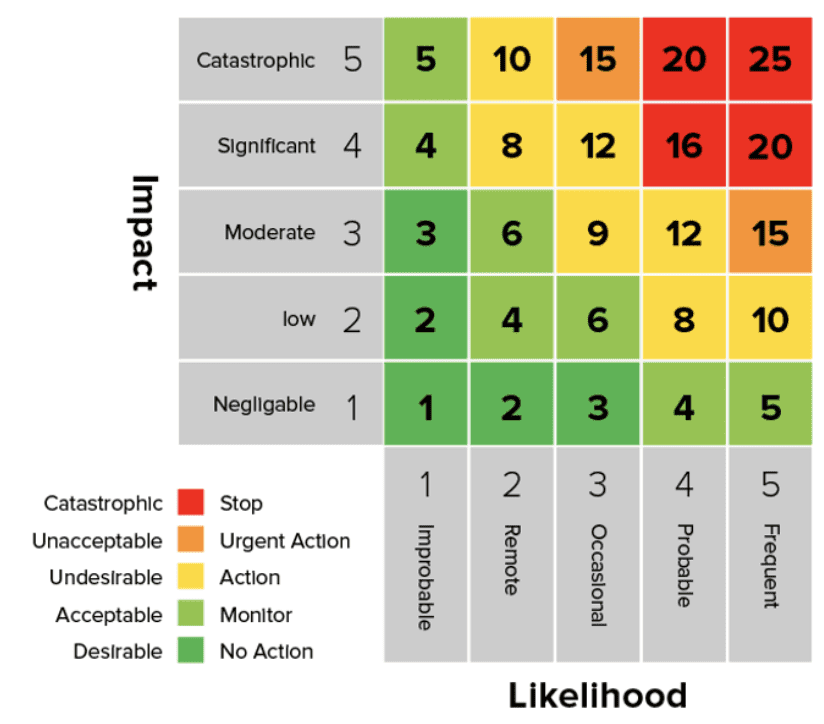

Visualizing Risk in Complex AI Workflows

One of the most challenging aspects of AI security is making abstract risks tangible and actionable. I've developed several techniques for transforming complex security concepts into clear visual representations that help teams understand and mitigate risks effectively.

Risk heatmap highlighting vulnerable components in an AI workflow

Risk visualization dashboards serve as the command center for AI security operations. By creating intuitive risk heatmaps, we can immediately highlight the most vulnerable components in our workflows. This visual approach helps prioritize security efforts where they'll have the greatest impact.

Real-time Security Monitoring Visuals

Effective security monitoring for AI systems requires dynamic dashboards that adapt to emerging threats. I've implemented systems that create visual alerts prioritized by severity, making it immediately obvious when critical security incidents require attention.

Security Incident Response Workflow

Visual decision tree for automated security response protocols:

flowchart TD

A[Security Incident Detected] --> B{Severity Assessment}

B -->|Critical| C[Immediate System Isolation]

B -->|High| D[Alert Security Team]

B -->|Medium| E[Log & Monitor]

B -->|Low| F[Automated Resolution]

C --> G[Forensic Analysis]

D --> G

E --> H{Escalation Needed?}

F --> I[Update Security Rules]

H -->|Yes| D

H -->|No| I

G --> J[Containment & Recovery]

J --> K[Post-Incident Review]

K --> I

style A fill:#f5f5f5,stroke:#333

style B fill:#fff0e6,stroke:#FF8000

style C fill:#ffe0cc,stroke:#FF8000

style D fill:#ffe0cc,stroke:#FF8000

Pattern recognition plays a crucial role in detecting unusual AI behavior before it becomes problematic. By visualizing normal operational patterns, security teams can quickly spot anomalies that might indicate a security breach or malfunction.

In my security practice, I've found that PageOn.ai's Vibe Creation feature is particularly valuable for transforming complex security concepts into accessible visual frameworks. This allows both technical and non-technical stakeholders to understand security risks and contribute to mitigation strategies. When working with AI work assistants, clear visualization of security boundaries becomes even more critical.

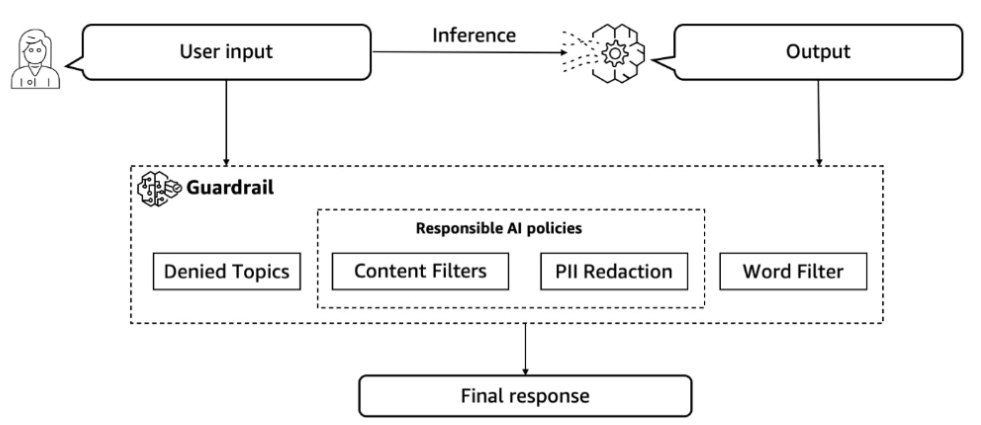

Implementing Guardrails in Automated AI Workflows

My experience implementing AI systems across various industries has taught me that effective guardrails are essential for maintaining security without sacrificing functionality. These safety mechanisms act as boundaries that prevent AI systems from taking potentially harmful actions.

Conceptual visualization of multiple guardrail layers protecting an AI system

Embedding security checkpoints within AI decision processes is one of the most effective guardrail strategies I've implemented. These checkpoints verify that actions align with security policies before execution, creating a proactive defense against potential misuse.

Secure AI Agent Design Patterns

When designing AI agents with security as a priority, I follow several architectural patterns that have proven effective across different use cases. The principle of least privilege is foundational – each agent should have access only to the specific tools and data required for its tasks, nothing more.

Secure AI Agent Architecture

Core components and security boundaries of a secure AI agent design:

flowchart TD

subgraph "Security Boundary"

A[Input Validation Layer]

B[Intent Analysis Engine]

C[Permission Manager]

D[Execution Sandbox]

E[Output Filtering]

F[Audit Logger]

end

G[External Request] --> A

A --> B

B --> C

C --> D

D --> E

E --> H[Secure Response]

A --> F

B --> F

C --> F

D --> F

E --> F

I[Tool Access] -.-> D

C -.-> I

style A fill:#fff0e6,stroke:#FF8000

style C fill:#fff0e6,stroke:#FF8000

style D fill:#fff0e6,stroke:#FF8000

style E fill:#fff0e6,stroke:#FF8000

style F fill:#fff0e6,stroke:#FF8000

Creating secure communication channels between collaborative AI agents is another critical aspect of my security framework. When multiple AI agent tool chains work together, each interaction point presents a potential security vulnerability that must be protected.

I've found that designing fail-safe mechanisms that gracefully handle security exceptions is essential for maintaining system stability. These mechanisms ensure that when security violations occur, the system responds in a controlled manner rather than failing catastrophically. Using PageOn.ai's AI Blocks has been instrumental in helping me visualize these agent interaction patterns and security boundaries clearly.

Multi-Layer Defense Strategies for AI Systems

In my security practice, I've found that defense-in-depth approaches are particularly effective for AI workflows. By implementing multiple layers of security controls, we create a system where a breach of any single layer doesn't compromise the entire security posture.

Multi-layered defense architecture for AI systems showing security controls at each level

Runtime security monitoring for AI agent activities forms a crucial layer in this approach. By continuously analyzing agent behaviors and comparing them against expected patterns, we can detect anomalies that might indicate security breaches or unexpected emergent behaviors.

Adaptive security policies that evolve with AI capabilities represent the next frontier in AI security. I've designed systems that automatically adjust security controls based on an AI agent's learning and capability expansion, ensuring that security measures remain appropriate as the system develops.

Defense-in-Depth Strategy

Layered security controls protecting AI workflows:

flowchart TB

subgraph "Layer 1: Perimeter"

A1[Network Segmentation]

A2[API Gateways]

A3[Traffic Filtering]

end

subgraph "Layer 2: Authentication"

B1[Identity Verification]

B2[MFA]

B3[Token Validation]

end

subgraph "Layer 3: Authorization"

C1[Permission Management]

C2[Role-Based Access]

C3[Just-in-Time Access]

end

subgraph "Layer 4: Runtime"

D1[Behavior Monitoring]

D2[Anomaly Detection]

D3[Resource Limits]

end

subgraph "Layer 5: Data"

E1[Encryption]

E2[Data Minimization]

E3[Secure Storage]

end

A1 --> B1

A2 --> B2

A3 --> B3

B1 --> C1

B2 --> C2

B3 --> C3

C1 --> D1

C2 --> D2

C3 --> D3

D1 --> E1

D2 --> E2

D3 --> E3

style A1 fill:#fff0e6,stroke:#FF8000

style B1 fill:#fff0e6,stroke:#FF8000

style C1 fill:#fff0e6,stroke:#FF8000

style D1 fill:#fff0e6,stroke:#FF8000

style E1 fill:#fff0e6,stroke:#FF8000

Secure data handling protocols for AI training and inference are essential components of a comprehensive security strategy. I've implemented systems that enforce data minimization principles, ensuring AI agents only access the specific data needed for their tasks and nothing more. Using PageOn.ai's Agentic capabilities has been invaluable in modeling potential attack vectors and developing appropriate defensive responses.

Case Studies: Successful AI Security Frameworks in Action

Throughout my career implementing AI security frameworks, I've seen firsthand how different industries adapt these principles to their unique requirements. Let's explore some real-world examples that demonstrate effective security approaches across diverse sectors.

Financial Sector: Investment Analysis AI

A major investment firm implemented a secure AI agent framework for analyzing market trends and making investment recommendations. Their approach featured:

- Granular permission controls for different data sensitivity levels

- Just-in-time access for trading capabilities

- Comprehensive audit trails for regulatory compliance

- Circuit breakers to prevent excessive trading activity

Result: Zero security incidents over 18 months while maintaining high performance.

Healthcare: Patient Data Analysis

A healthcare network deployed AI agents to analyze patient data while maintaining strict HIPAA compliance:

- Data anonymization at multiple processing stages

- Role-based access control aligned with clinical roles

- Secure sandboxes for testing new analytical models

- Visual dashboards showing data access patterns

Result: Improved diagnostic insights while enhancing patient data protection.

In the manufacturing sector, I worked with a company that implemented secure human-AI collaborative environments for their production facilities. By clearly defining operational boundaries and implementing visual security indicators, they created a system where human workers and AI systems could safely collaborate while maintaining strict security controls.

Government applications present some of the most challenging security requirements. I've helped agencies develop frameworks that balance stringent security controls with the need for AI innovation. The key to success in these environments has been creating clear visual representations of security boundaries and permissions, making complex security requirements accessible to all stakeholders. Many organizations have used PageOn.ai to visualize and strengthen their AI security posture, making abstract security concepts tangible and actionable.

Future-Proofing Your AI Security Framework

As AI capabilities continue to advance rapidly, security frameworks must evolve accordingly. I've developed strategies for future-proofing security approaches that can adapt to emerging challenges and technologies.

Next-generation adaptive security framework responding to evolving AI capabilities

Emerging standards and best practices in AI security governance provide a foundation for future-ready security frameworks. Organizations like NIST and ISO are developing AI-specific security standards that will shape the regulatory landscape in coming years. Staying ahead of these developments is essential for maintaining robust security postures.

Evolution of AI Security Capabilities

Projected development of security approaches for advanced AI systems:

timeline

title AI Security Evolution Timeline

section Current

Static Permission Models : Rule-based access control

Reactive Monitoring : Alert-based detection

Manual Incident Response : Human-driven remediation

section Near Future (1-2 years)

Adaptive Permissions : Context-sensitive access

Predictive Monitoring : Pattern-based detection

Semi-Automated Response : Human-supervised remediation

section Mid Future (3-5 years)

Intent-Based Security : Purpose-driven access control

Cognitive Threat Detection : Behavior anomaly analysis

Autonomous Response : Self-healing systems

section Long Future (5+ years)

Self-Governing AI : Ethical self-constraint

Collaborative Defense : AI security collectives

Preventative Security : Threat anticipation

Building adaptable security frameworks that evolve with AI technology is perhaps the most important strategy for future-proofing. Rather than creating rigid security rules, I design systems with flexible security policies that can be adjusted as new capabilities and threats emerge.

Creating organizational readiness for AI security incidents is equally important. By developing response playbooks and conducting regular tabletop exercises, teams can prepare for security challenges before they occur. This proactive approach dramatically improves response effectiveness when incidents do happen.

I've found PageOn.ai to be an invaluable tool for modeling future security scenarios and response strategies. By creating visual representations of potential threats and mitigation approaches, teams can better prepare for emerging security challenges and ensure their AI systems remain secure even as capabilities advance.

Transform Your AI Security Framework with PageOn.ai

Ready to create clear, compelling visualizations of your AI security architecture? PageOn.ai helps you transform complex security concepts into intuitive visual frameworks that your entire team can understand and implement.

Start Creating with PageOn.ai TodayConclusion: Building a Secure AI Future

Throughout this guide, I've shared strategies and frameworks for securing AI systems that balance powerful capabilities with robust protection. As AI becomes increasingly integrated into critical workflows, the importance of thoughtful security architecture only grows.

The most successful AI security implementations I've seen share common characteristics: they use visual frameworks to make complex security concepts accessible, they implement defense-in-depth approaches with multiple protection layers, and they remain adaptable as AI capabilities evolve.

By applying the principles and techniques we've explored, you can create AI systems that are both powerful and secure, enabling innovation while protecting critical assets. Remember that security is never a finished state but an ongoing process of adaptation and improvement. With the right visualization tools and security mindset, you can build AI systems that earn and maintain trust through robust, transparent security practices.

You Might Also Like

The Art of Text Contrast: Transform Audience Engagement With Visual Hierarchy

Discover how strategic text contrast can guide audience attention, enhance information retention, and create more engaging content across presentations, videos, and marketing materials.

Mastering Content Rewriting: How Gemini's Smart Editing Transforms Your Workflow

Discover how to streamline content rewriting with Gemini's smart editing capabilities. Learn effective prompts, advanced techniques, and workflow optimization for maximum impact.

Transforming Presentations: Strategic Use of Color and Imagery for Maximum Visual Impact

Discover how to leverage colors and images in your slides to create visually stunning presentations that engage audiences and enhance information retention.

Revolutionizing Slides: The Power of AI Presentation Tools | PageOn.ai

Discover how AI presentation tools are transforming slide creation, saving hours of work while enhancing design quality. Learn how PageOn.ai can help visualize your ideas instantly.